The Nutanix Cloud Bible - Classic Edition

» Download The Nutanix Cloud Bible Classic Version as PDF (opens in a new tab/window)

Welcome to the classic edition of The Nutanix Cloud Bible The purpose of the The Nutanix Cloud Bible is to provide in-depth technical information about the Nutanix platform architecture.

The Basics: Compute, Storage and Networking

The BasicsLearn the basics of Webscale principles and core architectural concepts.

» Download this section as PDF (opens in a new tab/window)

web·scale - /web ‘ skãl/ - noun - computing architecture

a new architectural approach to infrastructure and computing.

Nutanix leverages “Web-scale” principles throughout our software stack. Web-scale doesn’t mean you need to be as big as Google, Facebook, Amazon, or Microsoft in order to leverage them. Web-scale principles are applicable and beneficial at any scale, whether 3-nodes or thousands of nodes.

There are a few key constructs used when talking about “Web-scale” infrastructure:

- Hyper-convergence

- Software defined intelligence

- Distributed autonomous systems

- Incremental and linear scale out

Other related items:

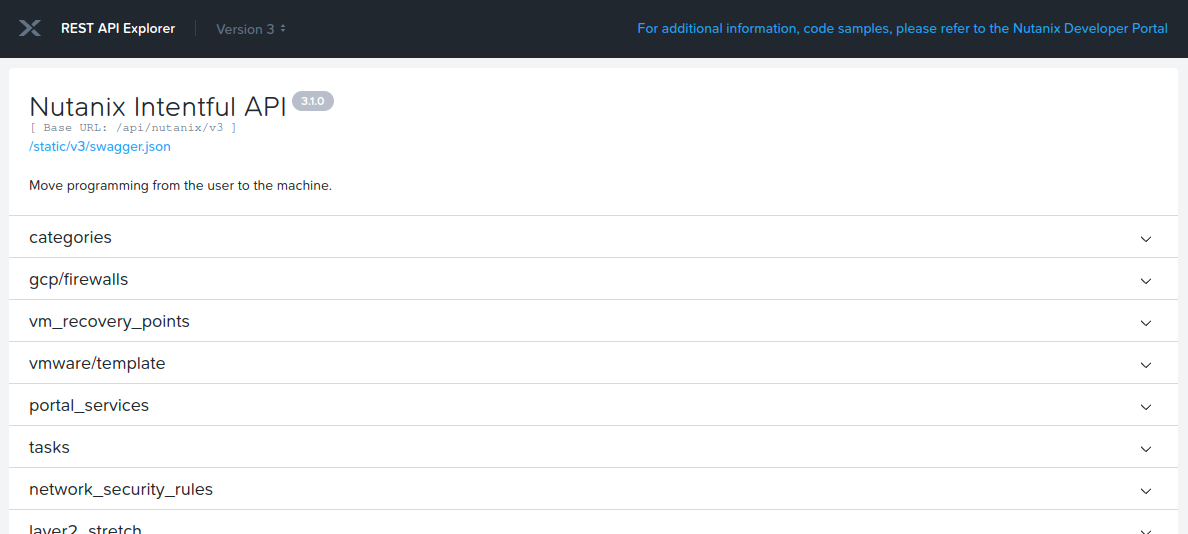

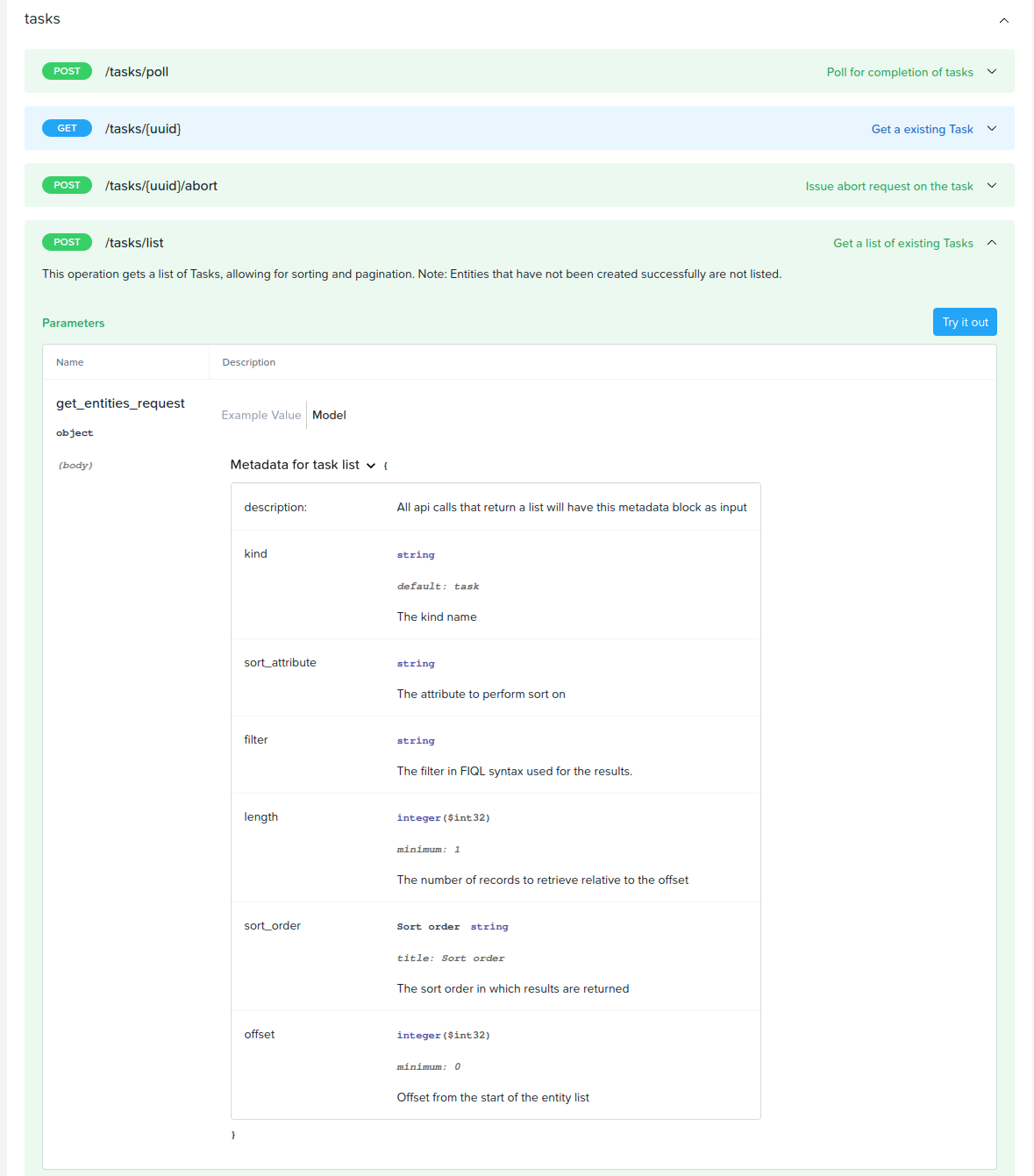

- API-based automation and rich analytics

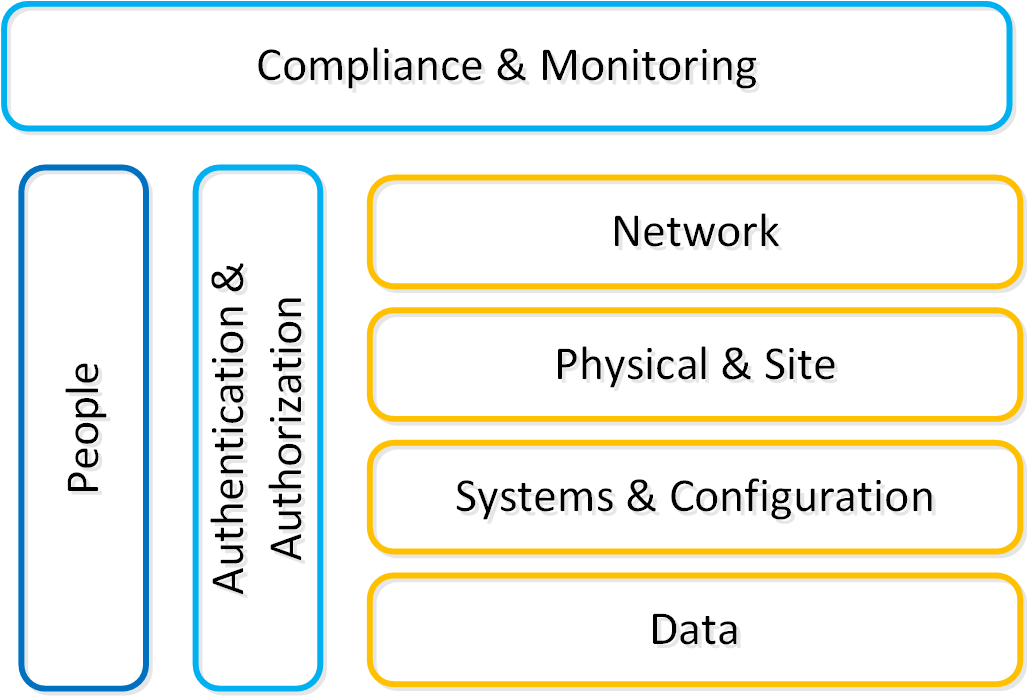

- Security as a core tenant

- Self-healing

This book will cover these basics as well as the core architectural concepts.

» Download this section as PDF (opens in a new tab/window)

When Nutanix was conceived it was focused on one goal:

Make infrastructure computing invisible, anywhere.

This simplicity was to be achieved by focus in three core areas:

- Enable choice and portability (HCI/Cloud/Hypervisor)

- Simplify the “stack” through convergence, abstraction and intelligent software (AOS)

- Provide an intuitive user interface (UI) through focus on user experience (UX) and design (Prism)

HCI/Cloud/Hypervisor: “The Choice”

Though we started with a single hardware platform (NX) supporting a single hypervisor (ESXi), we’ve always known we were more than a single hypervisor/platform/cloud company. This was one of the reasons we chose to build our own UI from scratch vs. run as a plug-in in vCenter, run as a VM vs. natively in the kernel (a lot more reasons there), etc. Why you may ask? Choice.

Not one hypervisor, platform, or cloud will fit all customer’s needs. By supporting multiple under the same platform we give the customer choice and leverage. By giving them the ability to move between them, we give them flexibility. All delivered with the same experience since it’s all part of the Nutanix platform.

We now have support for over 12 different hardware platforms (direct/OEM/third-party), multiple hypervisors (AHV, ESXi, Hyper-V, etc.), and expanding integrations with all of the major cloud vendors (AWS, Azure, GCP). This allows the customer to choose what is best for them, as well as use this for negotiations purposes between vendors.

NOTE: Platform is one key word that is used throughout the section and in general. We’re not trying to build one-off products, we’re building a platform.

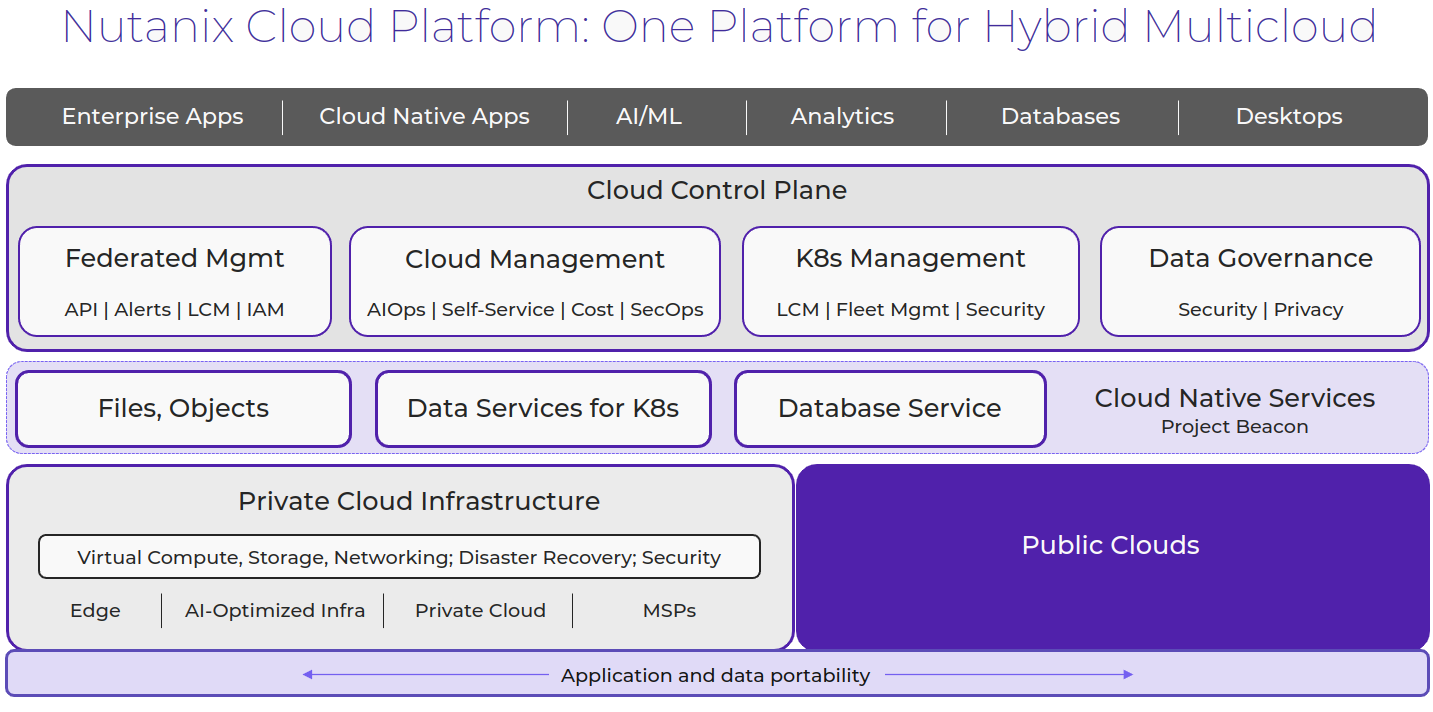

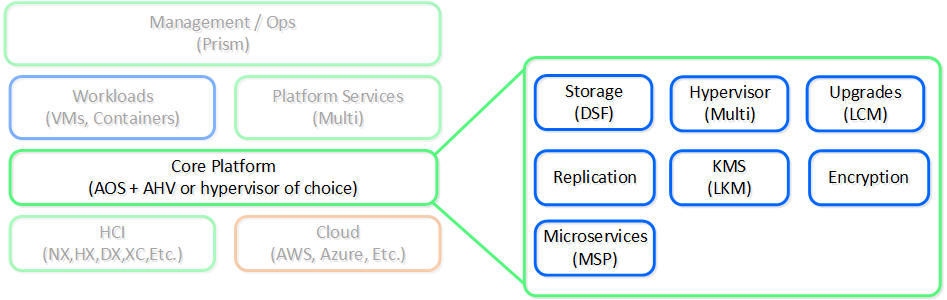

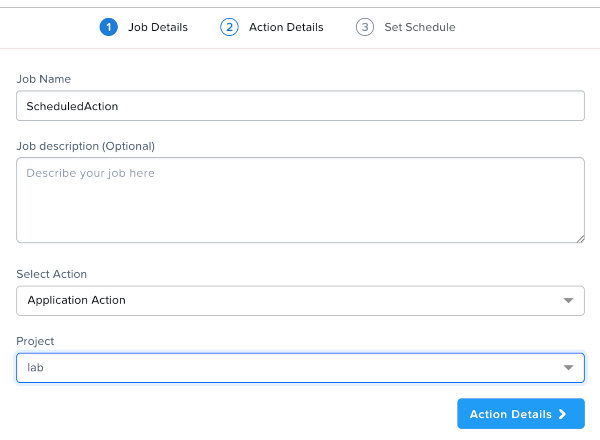

The following shows a high-level architecture of the Nutanix platform:

Nutanix Platform - Architecture

Nutanix Platform - Architecture

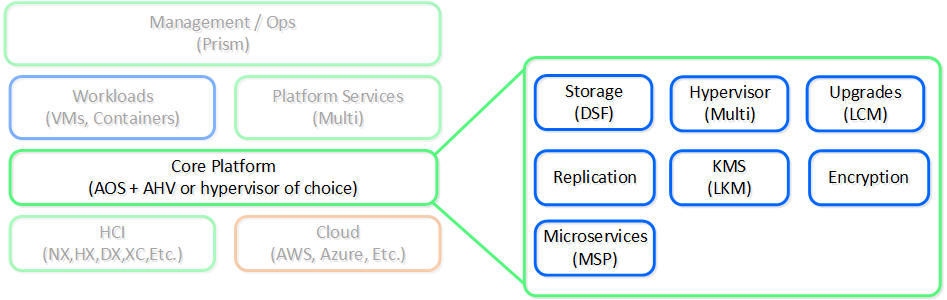

AOS + AHV/Hypervisor: “The Runtime”

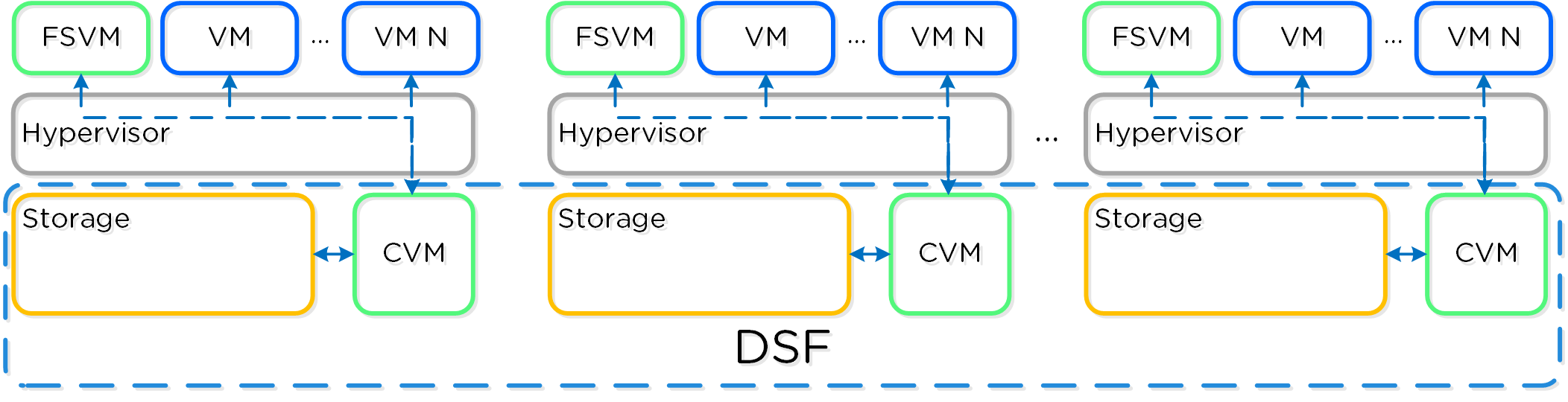

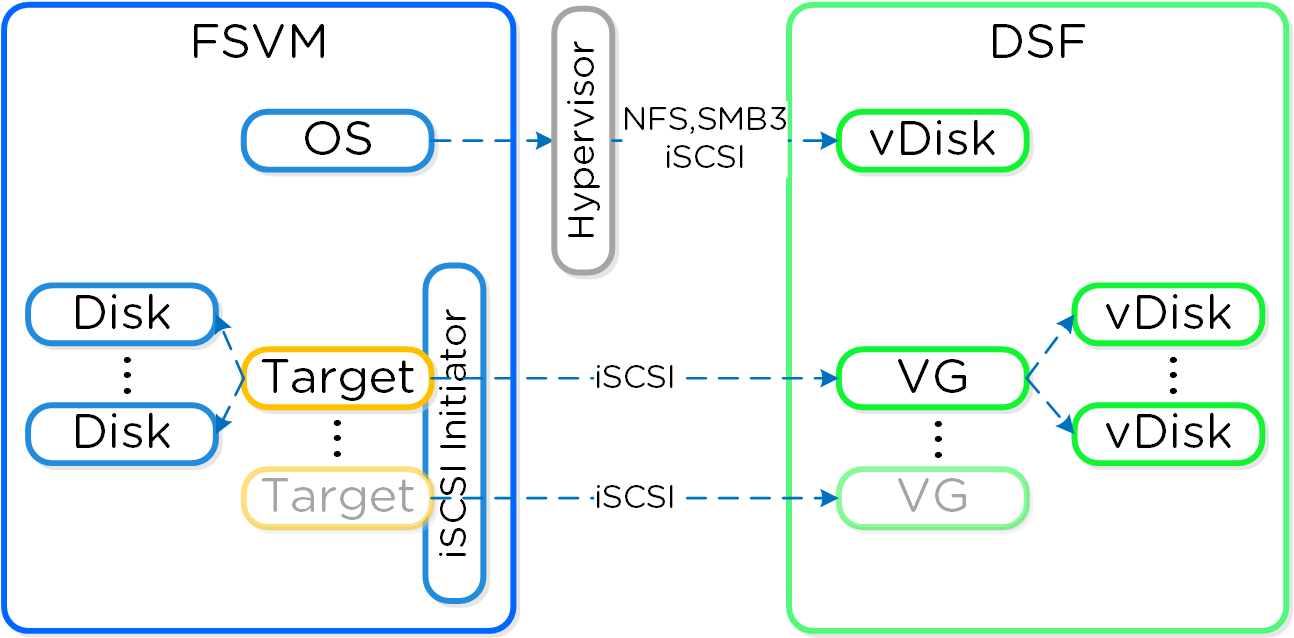

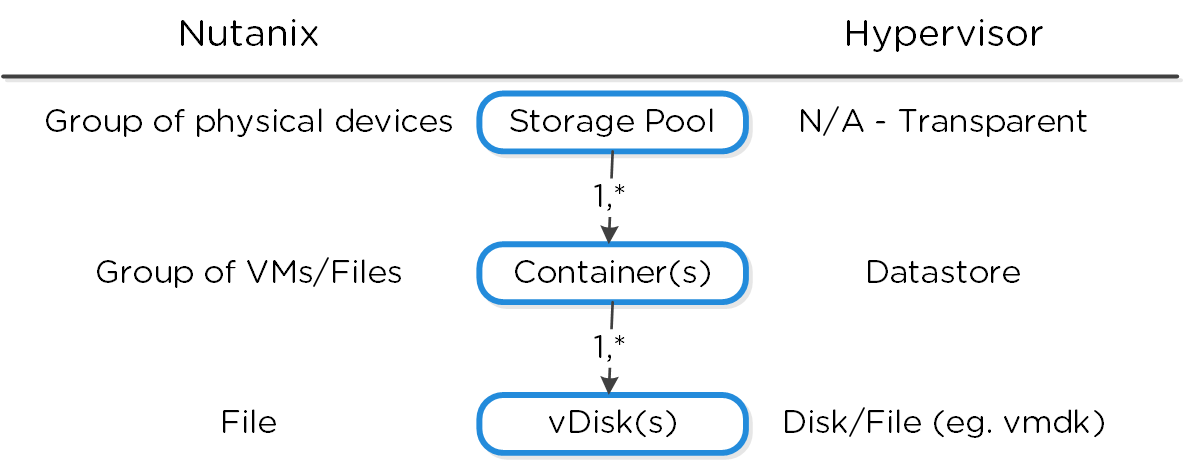

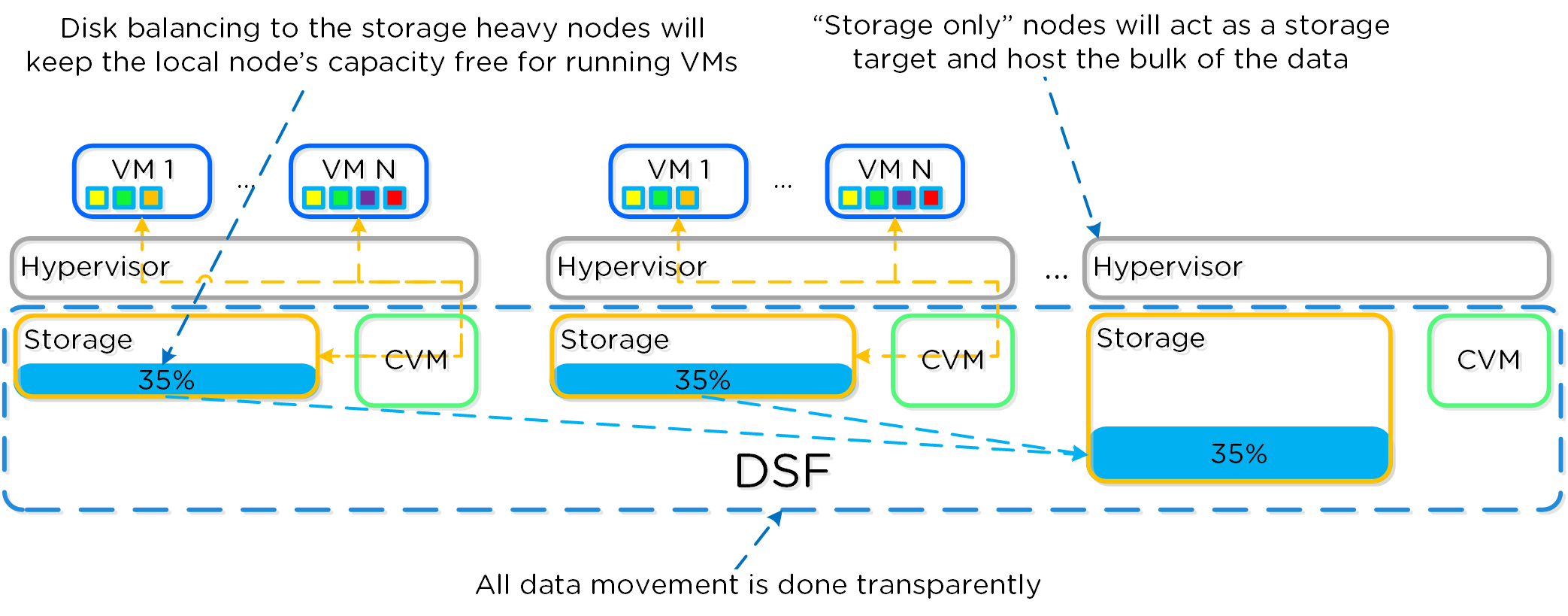

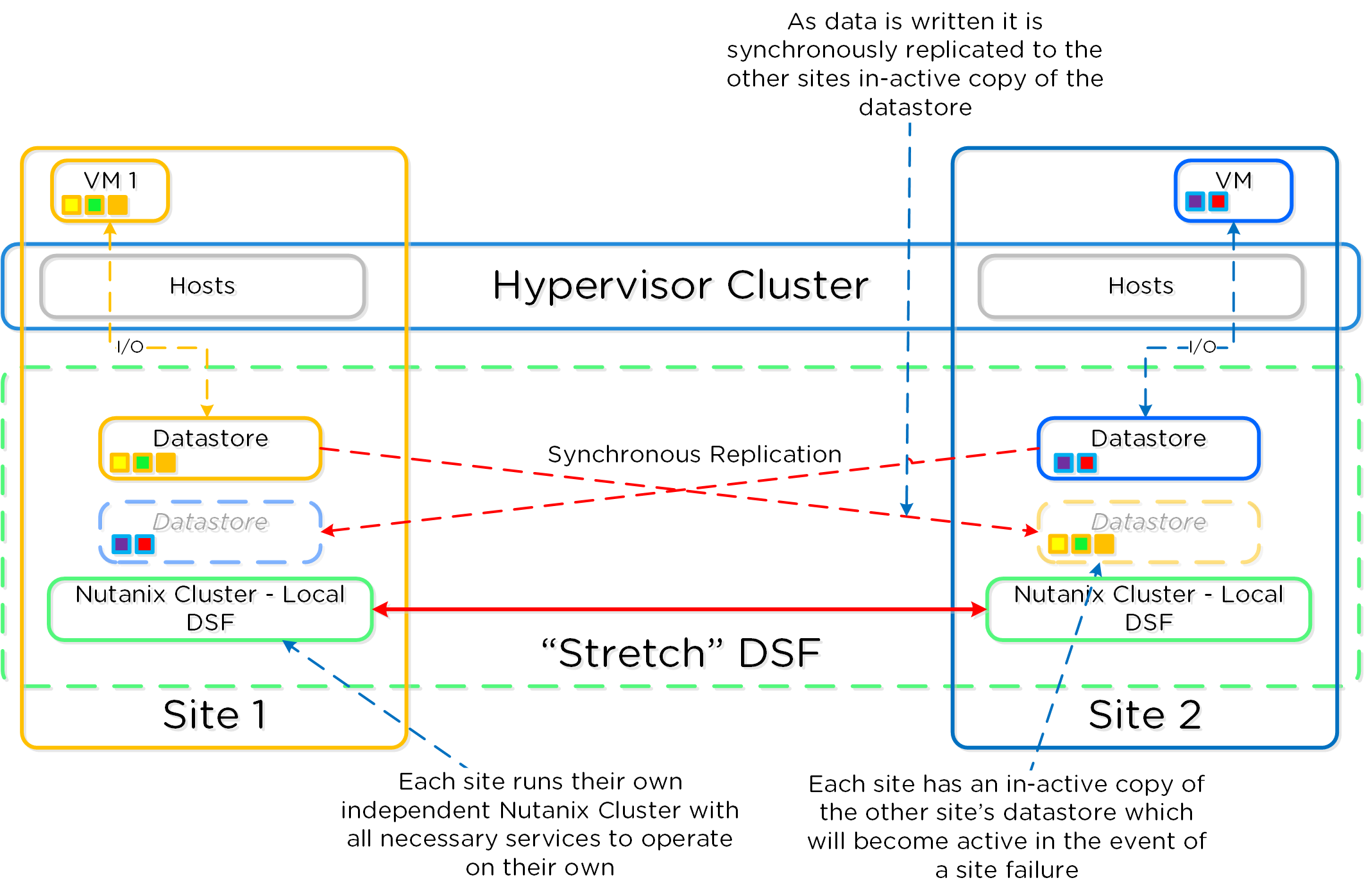

We started this journey by simplifying storage with a feature called the Distributed Storage Fabric (DSF then known as the Nutanix Distributed Filesystem aka NDFS), which combined local storage resources with intelligent software to provide “centralized storage” like capabilities.

Over the years, we’ve added a great deal of features and capabilities. To simplify things these have been broken down into two core areas:

- Core Services

- Foundational services

- Platform Services

- Services building upon core services providing additional capabilities/services

The core provides the foundational services and components that facilitate the running of workloads (VMs/Containers) and other higher-level Nutanix services. In the beginning this was just the DSF product, however we continue to expand the platform’s capabilities to help simplify and abstract the stack.

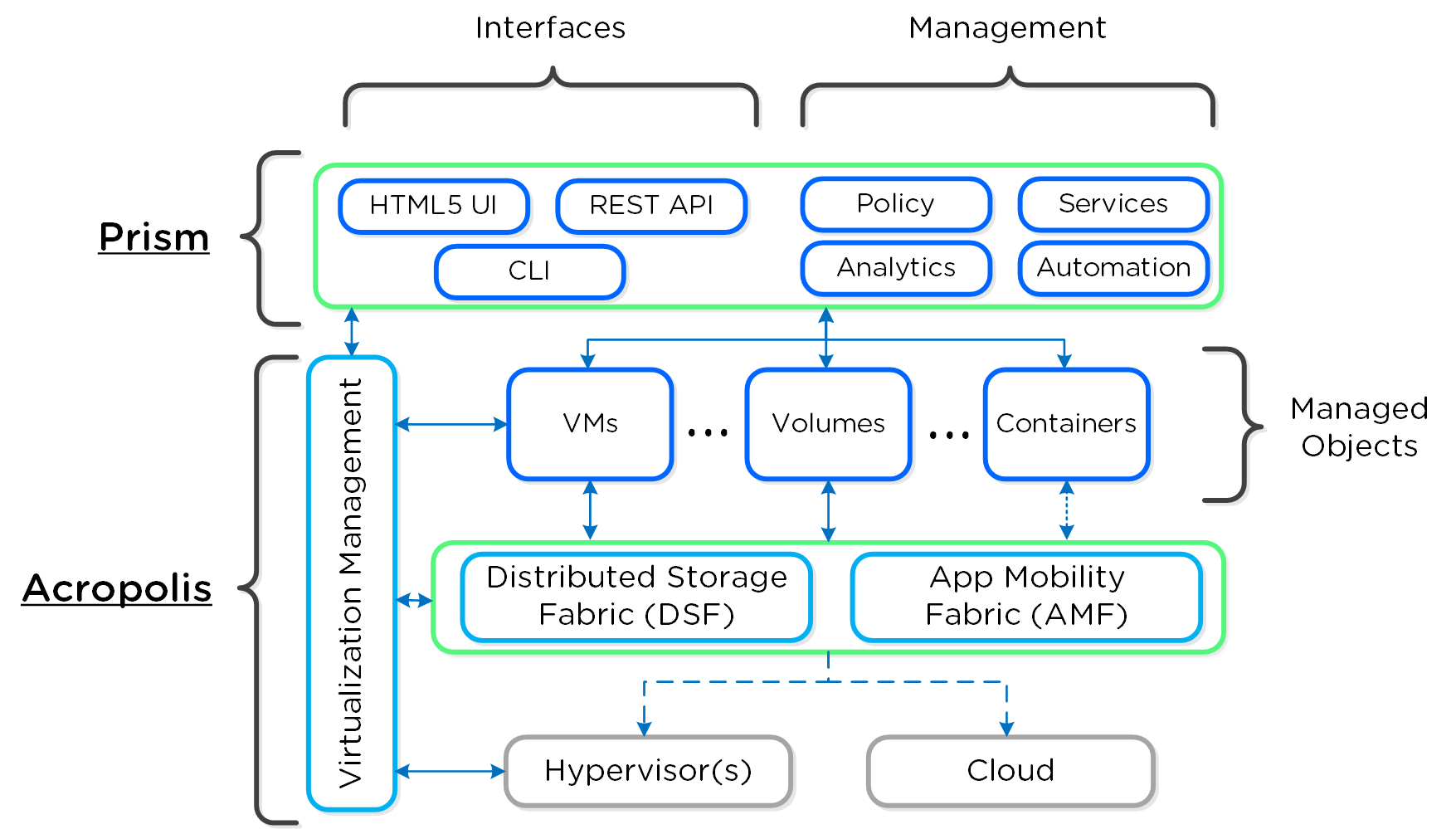

The following shows a high-level view of the AOS core platform:

Nutanix Platform - AOS Core

Nutanix Platform - AOS Core

Over the years this has expanded into things like abstracting virtualization (we believe this should be something transparent and part of the system) by introducing our own hypervisor (AHV), simplifying upgrades, and providing other essential services like security and encryption.

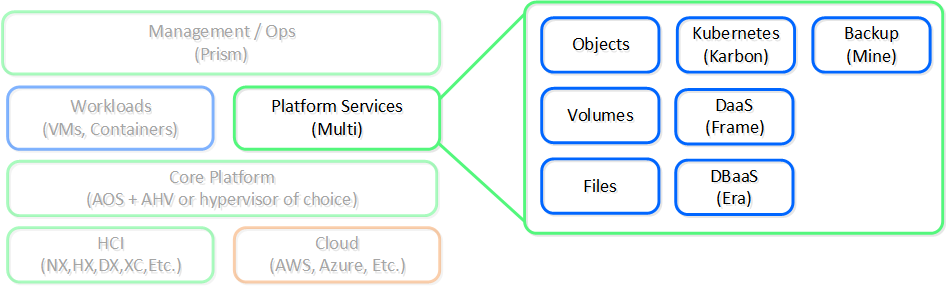

With these capabilities we solved for a lot of the infrastructure level issues, but we didn’t stop there. People still needed additional services like file shares, object storage, or containers.

Rather than requiring customers to use other vendors and products for some services we figured which ones we should partner on and which ones we should build ourselves. For backup we partnered with vendors like Veeam and Hycu, for others like file and object services we built them as services into the platform.

The following shows a high-level view of the Nutanix platform services:

Nutanix Platform - Services

Nutanix Platform - Services

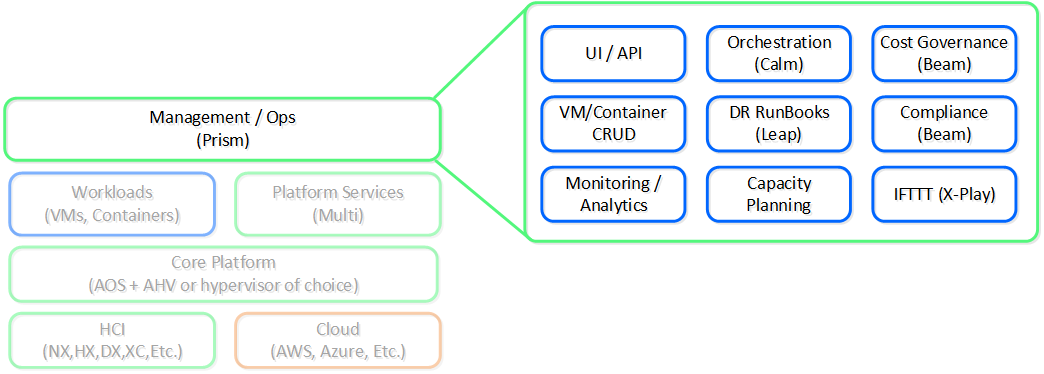

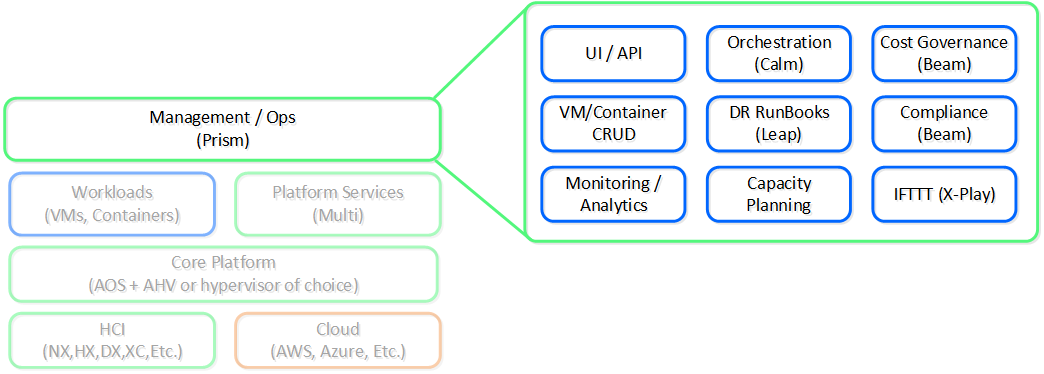

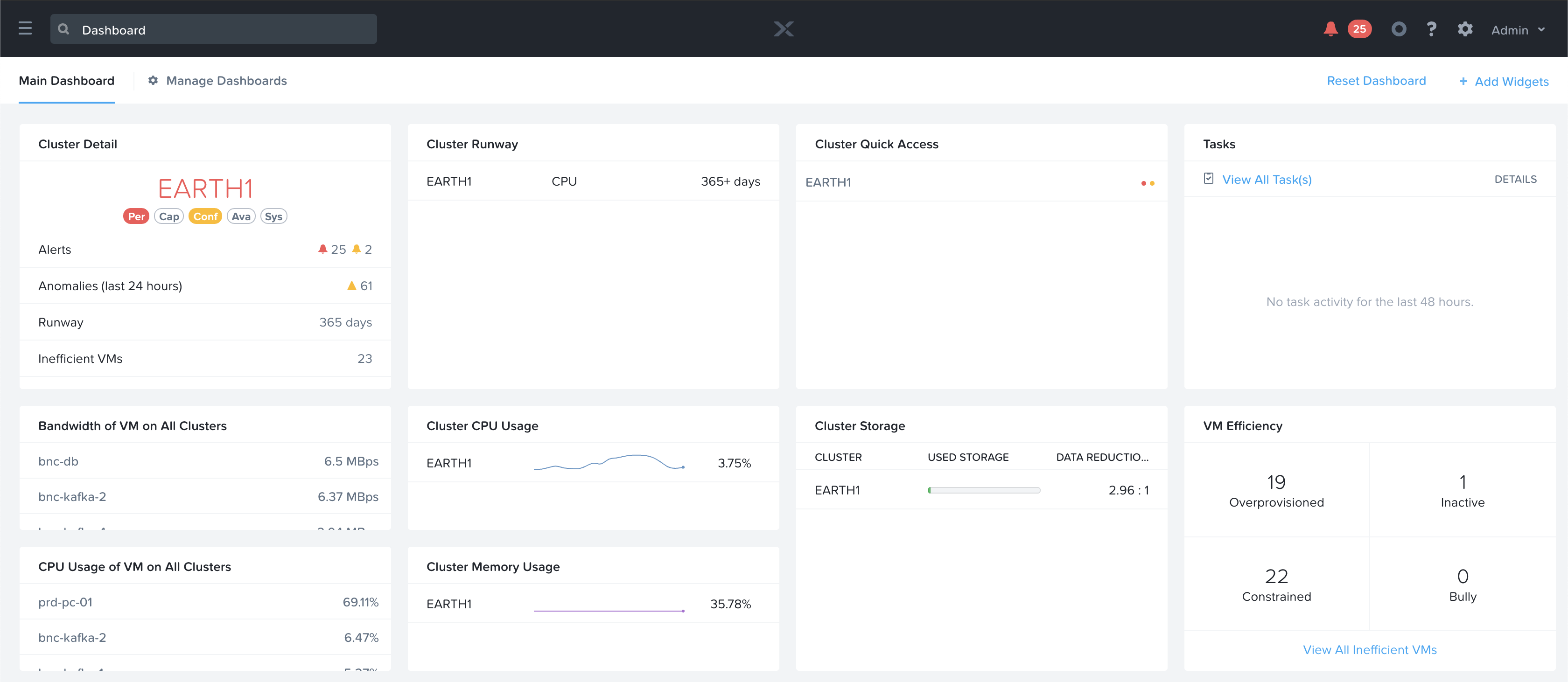

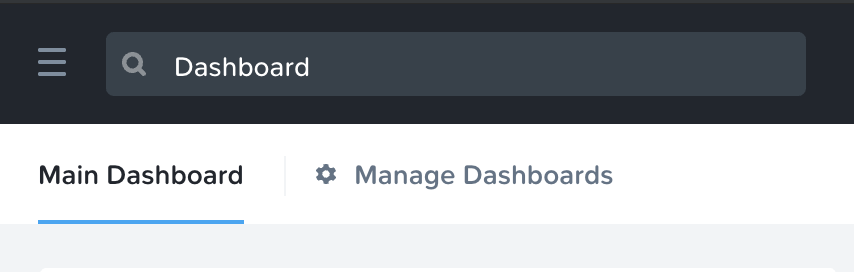

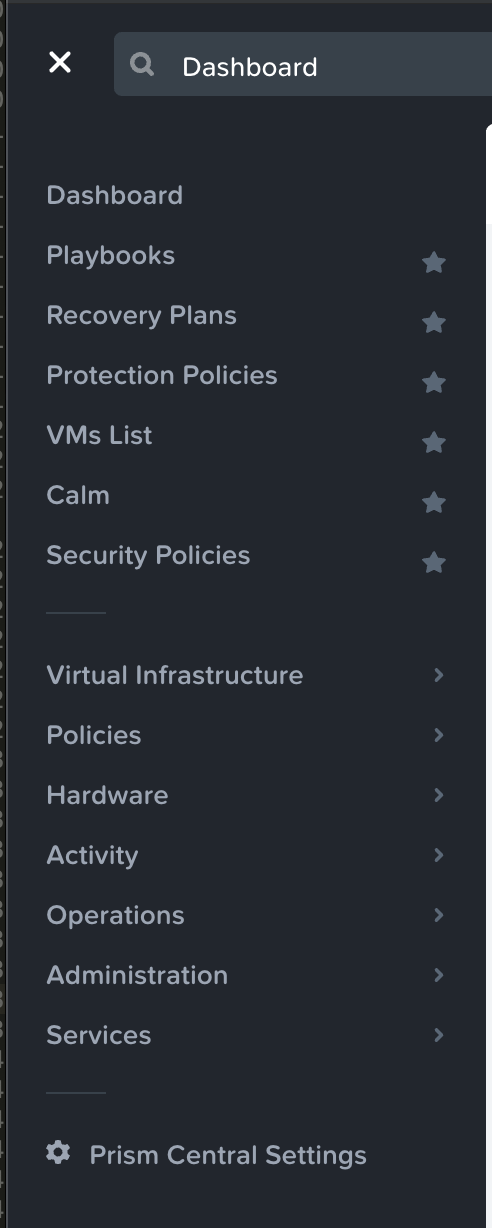

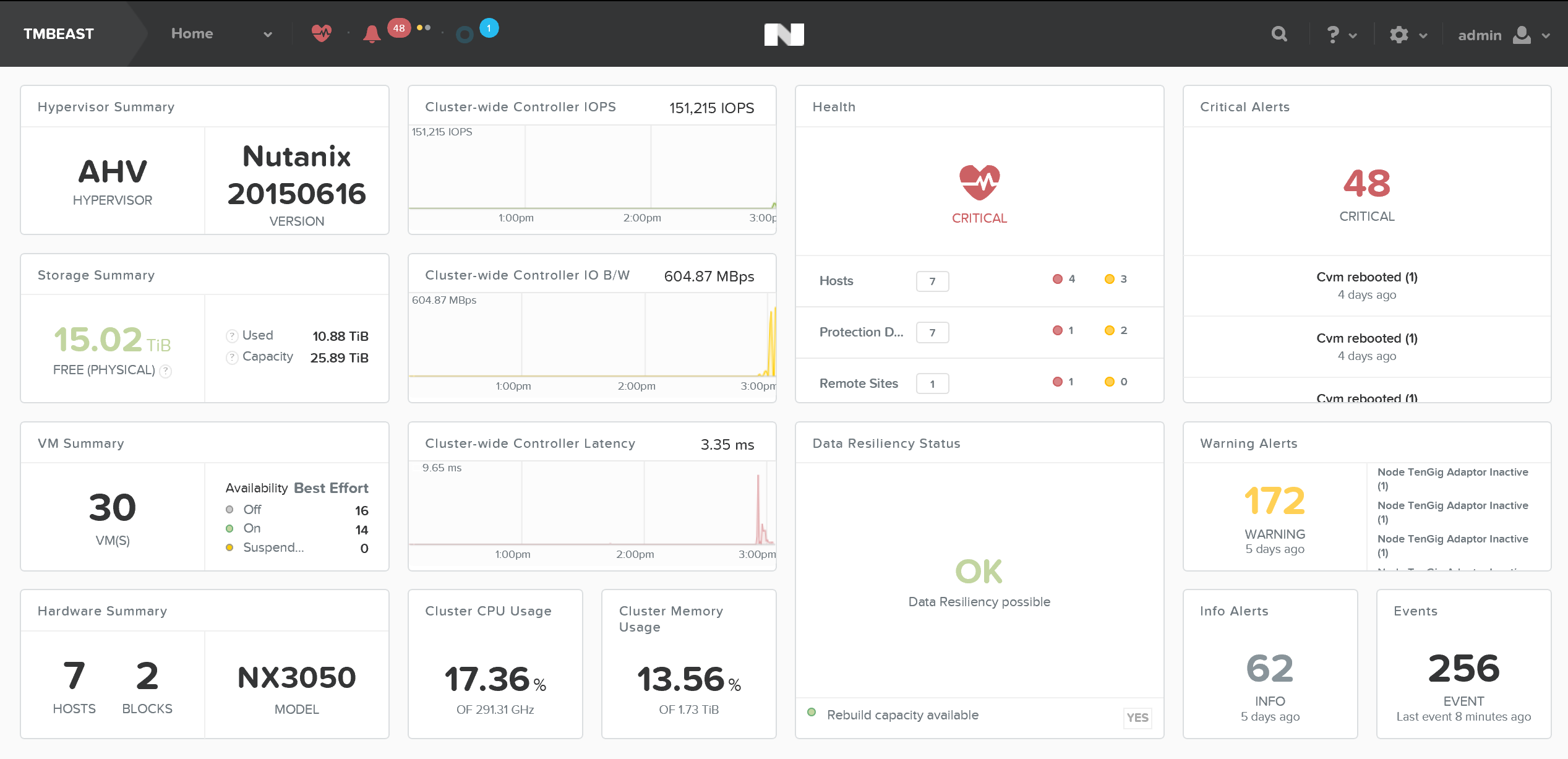

Prism: “The Interface”

Nutanix Platform - Prism

Nutanix Platform - Prism

Simply put, apply some of the design principles fostered by companies like Apple focused on simplicity, consistency and intuitiveness. Since the beginning we’ve invested significant time and effort on the Nutanix product’s “front-end”. Rather than being an afterthought, the UI/UX and design teams have always been pushing the boundaries. Case in point, we were one of the first enterprise software companies (besides the SaaS players), to have the management UI be written in HTML5.

Another core item here is the focus on providing a single interface for the platform and keeping the experience consistent throughout that. Our goal is to converge UIs like we’ve converged infrastructure. We want Prism to be a single interface allowing you to manage and consume the Nutanix platform, whether that is managing virtualization in your datacenter, Desktops-as-a-Service in the cloud, or providing spend visibility.

This is important as we continue to expand the platform through feature / service creation and acquisition. Rather than bolting the new capabilities on, we’d rather spend the time to natively integrate them into the platform. It is a slower process, but in the long run it keeps the experience consistent and reduces risk.

Nutanix: The Platform

To summarize, our vision is simple: “One platform to run applications and data anywhere.”

Nutanix Platform - Architecture

Nutanix Platform - Architecture

This has been our goal from close to the beginning. Testament to this, below is an image created circa 2014 to talk about the Nutanix platform architecture. As you can see not much has changed, we just continue expanding and working towards this goal.

Nutanix Platform - Circa 2014

Nutanix Platform - Circa 2014

» Download this section as PDF (opens in a new tab/window)

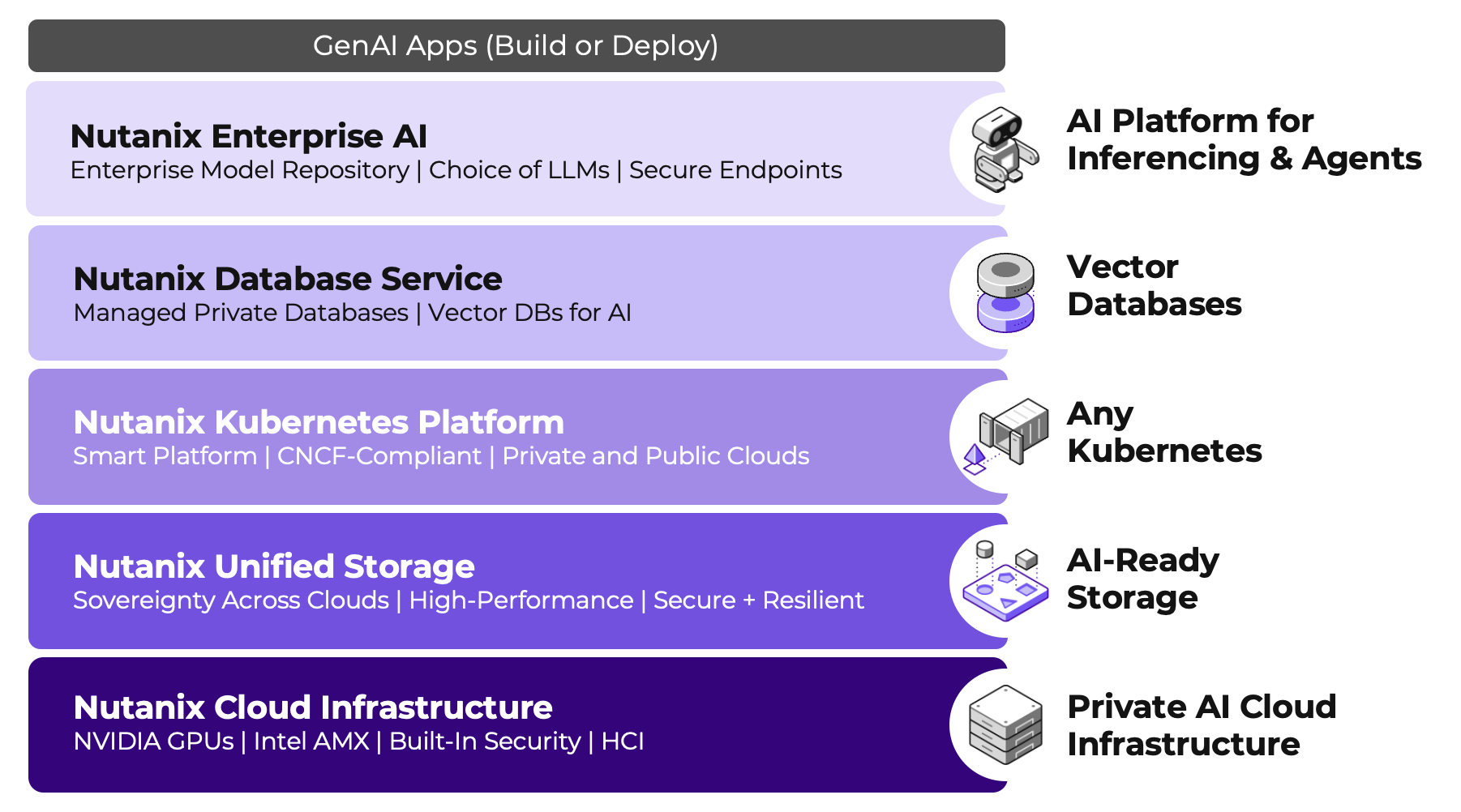

Products

Nutanix pioneered hyperconverged infrastructure (HCI) to break down legacy silos by merging compute, storage, and networking into a single, easy-to-use datacenter platform. Now, as the cloud has emerged as a critical component of IT infrastructure, Nutanix is breaking down silos again—this time between on-premises, cloud, and the edge—to deliver a hybrid multicloud platform.

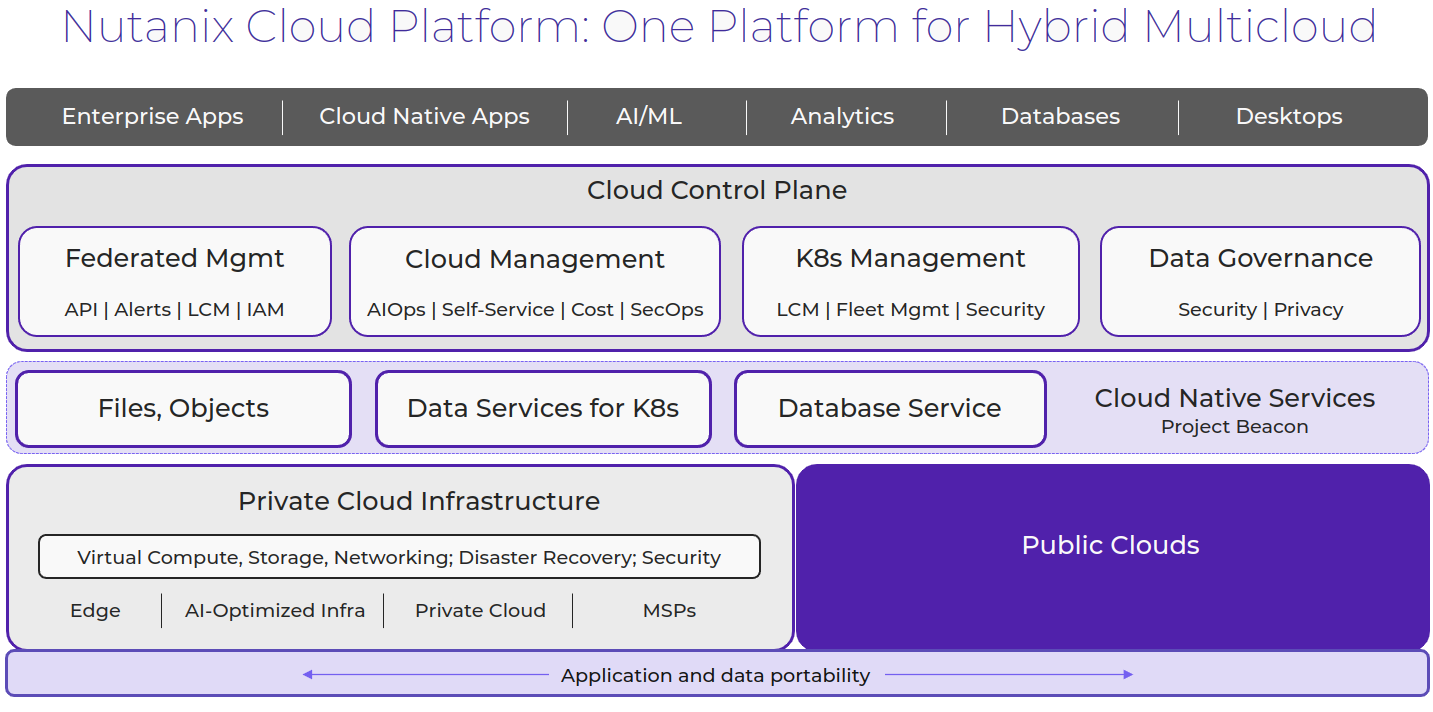

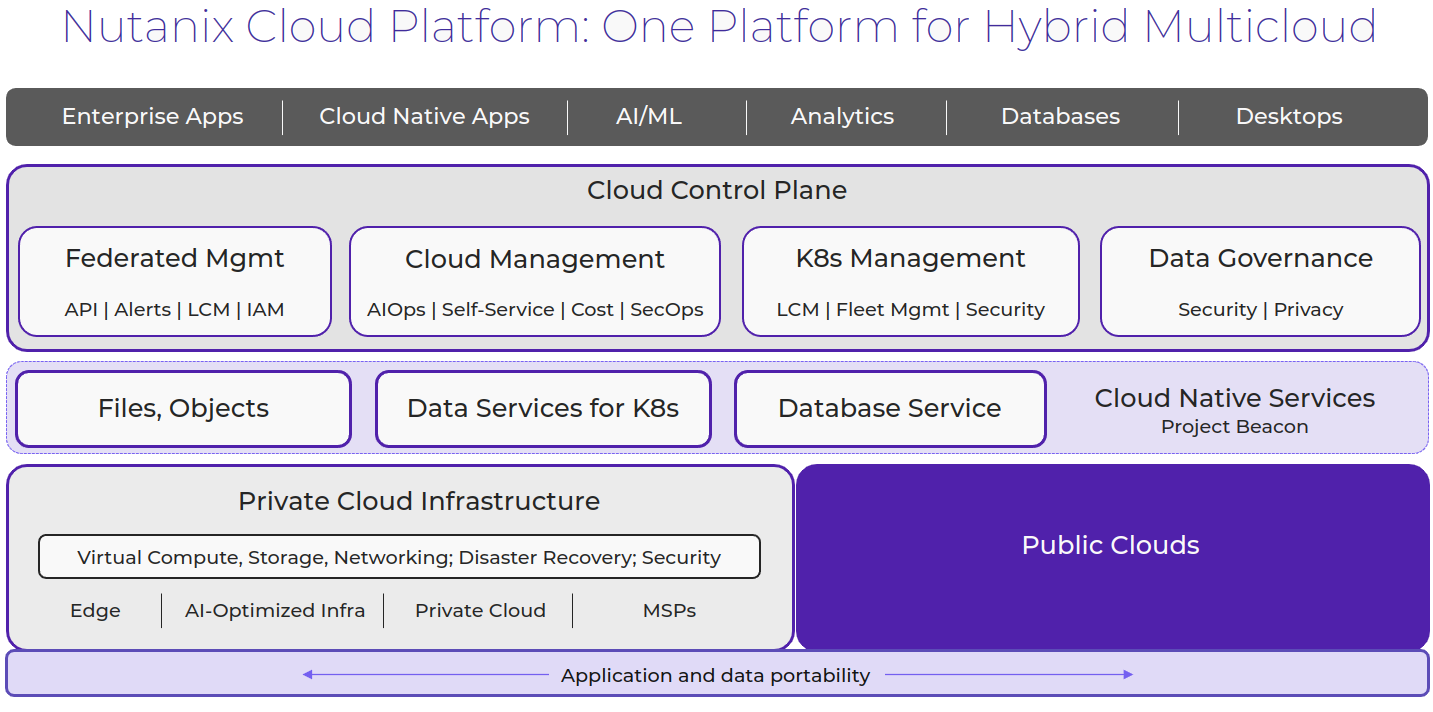

Nutanix Cloud Platform

The Nutanix Cloud Platform is a secure, resilient, and self-healing platform for building your hybrid multicloud infrastructure to support all kinds of workloads and use cases across public and private clouds, multiple hypervisors and containers, with varied compute, storage, and network requirements.

Nutanix Cloud Platform

Nutanix Cloud Platform

The building blocks of Nutanix Cloud Platform are Nutanix Cloud Infrastructure and Nutanix Cloud Manager. These are the products that fall under each of them to form a complete solution.

Nutanix Cloud Infrastructure (NCI)

- AOS Scale-Out Storage

- This is the core of Nutanix Cloud Platform and provides a distributed, performant, and resilient storage platform that scales linearly.

- AHV Hypervisor

- Native enterprise class virtualization, management and monitoring capabilities are provided by AHV. The Nutanix Cloud Platform also supports ESXi and Hyper-V.

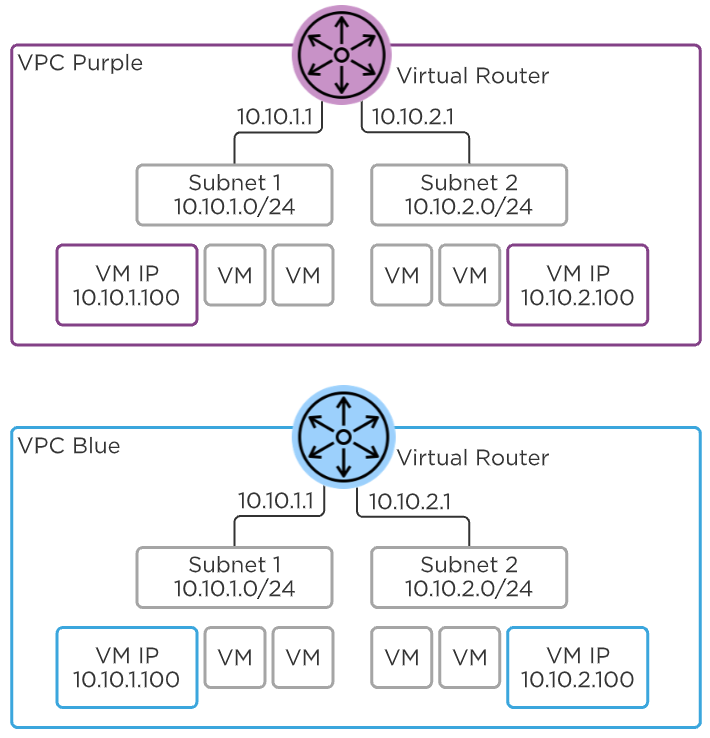

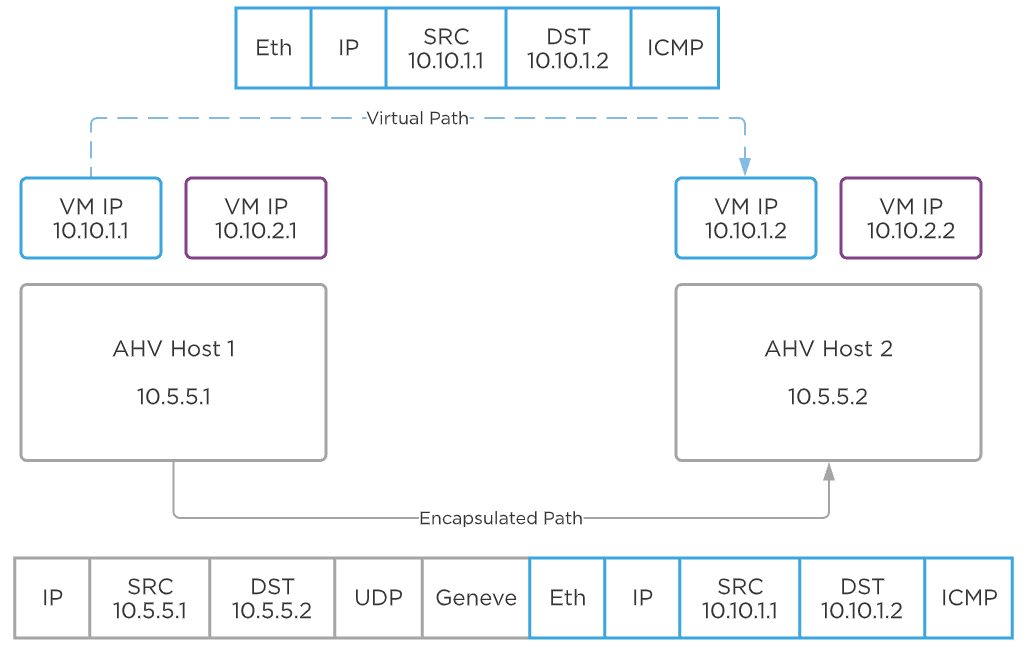

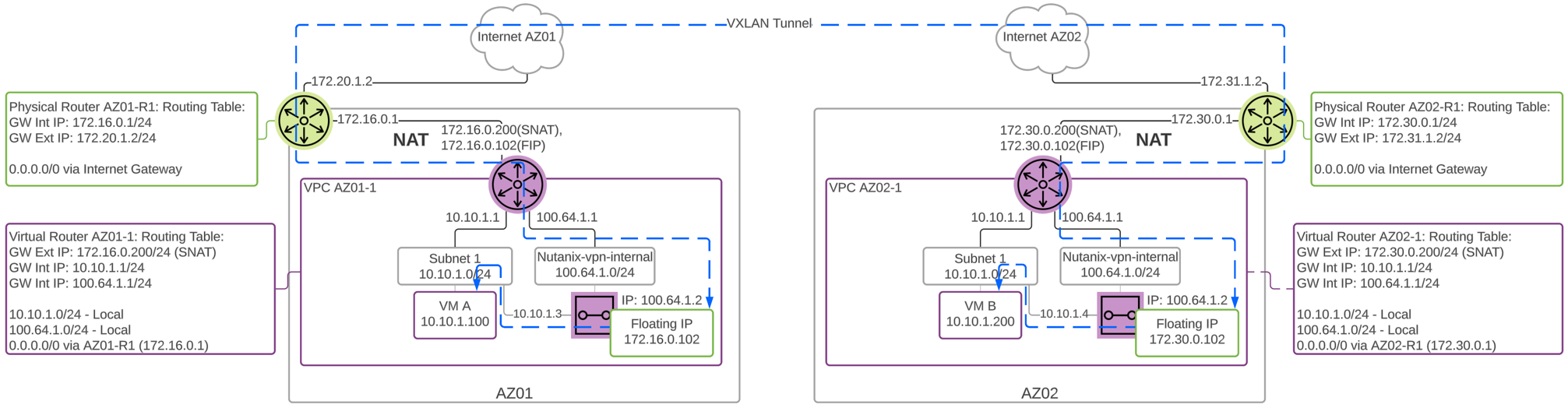

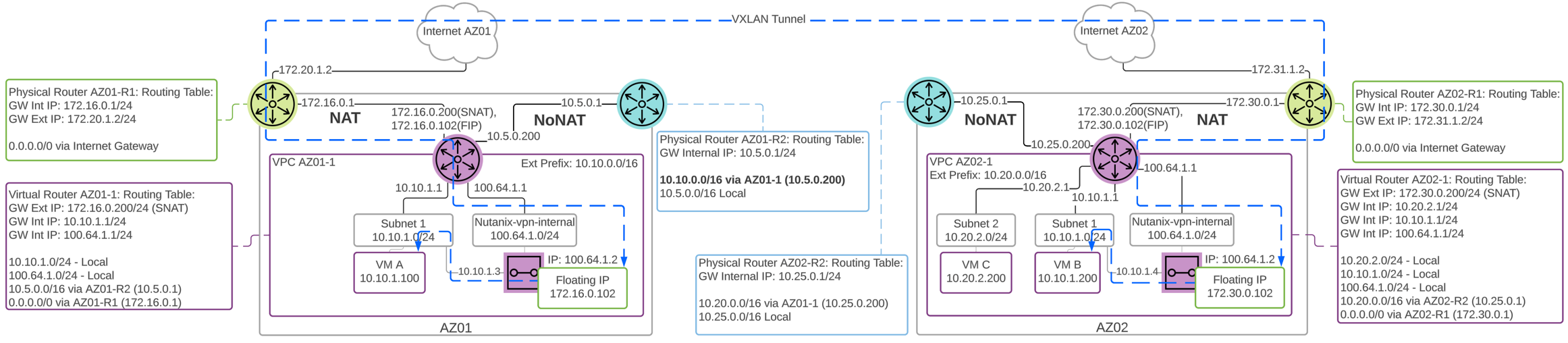

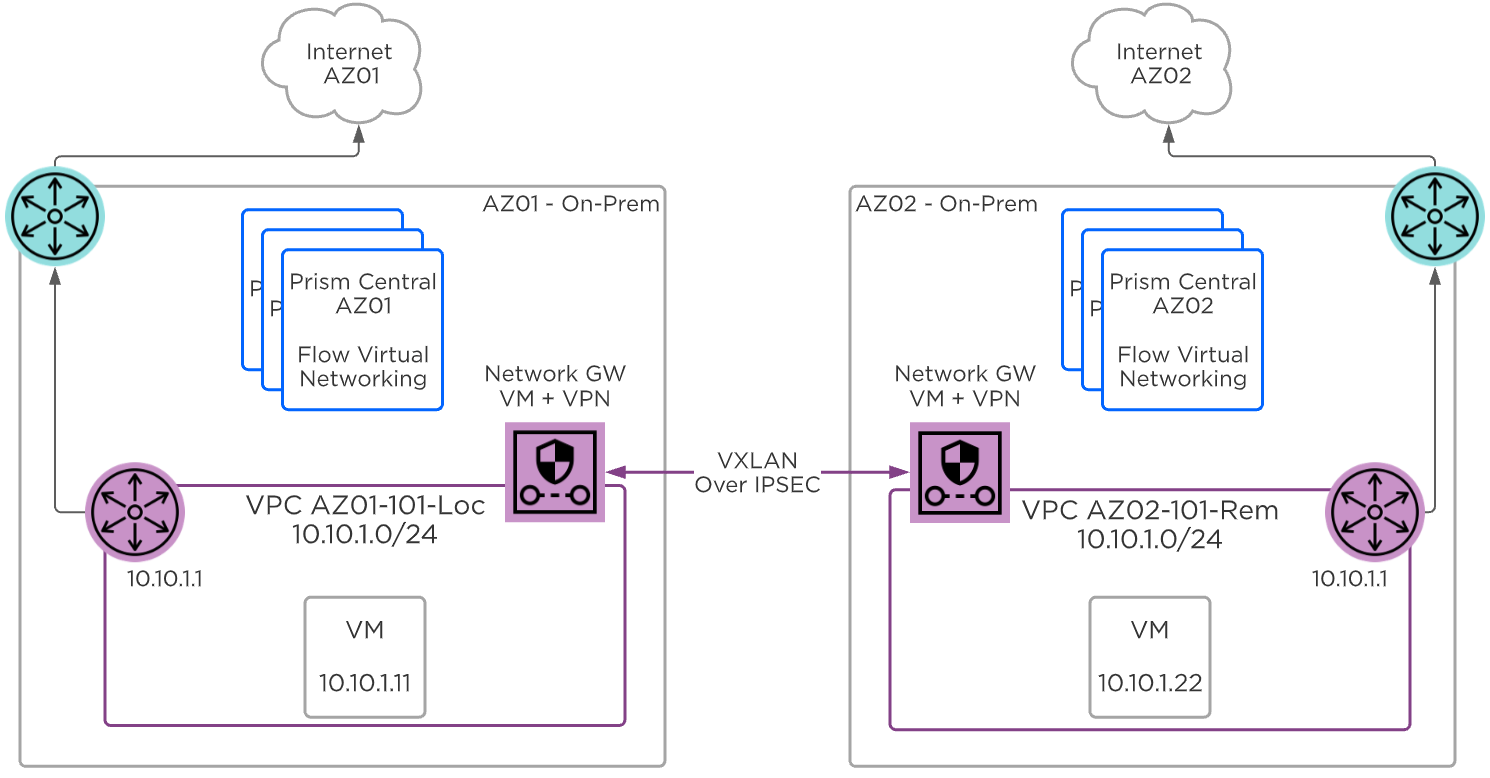

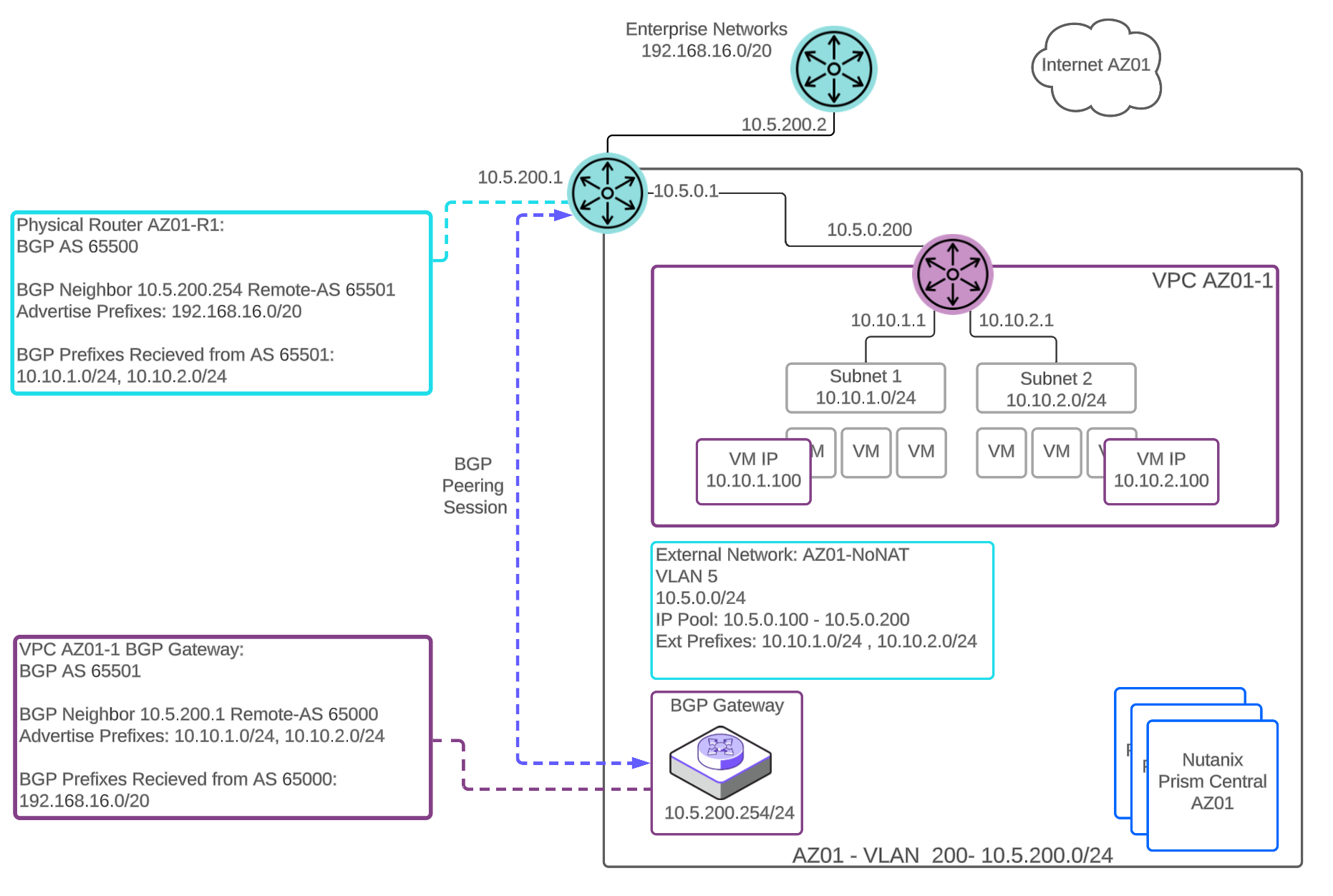

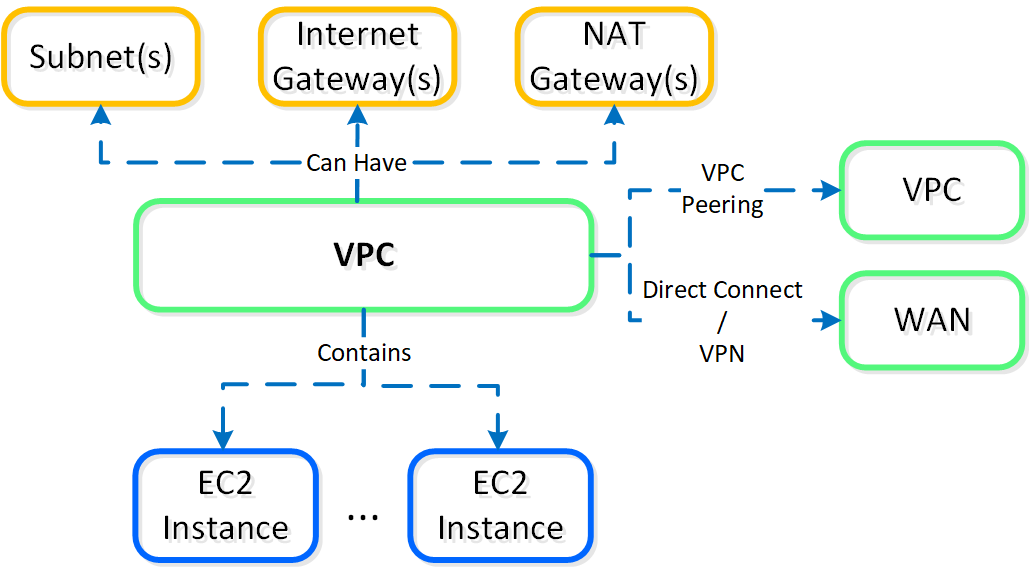

- Virtual Networking

- AHV comes with standard VLAN-backed virtual networking. You can also enable Flow Virtual Networking to provide virtual private cloud (VPC) and other advanced networking constructs in AHV for enhanced isolation, automation, and multi-tenancy.

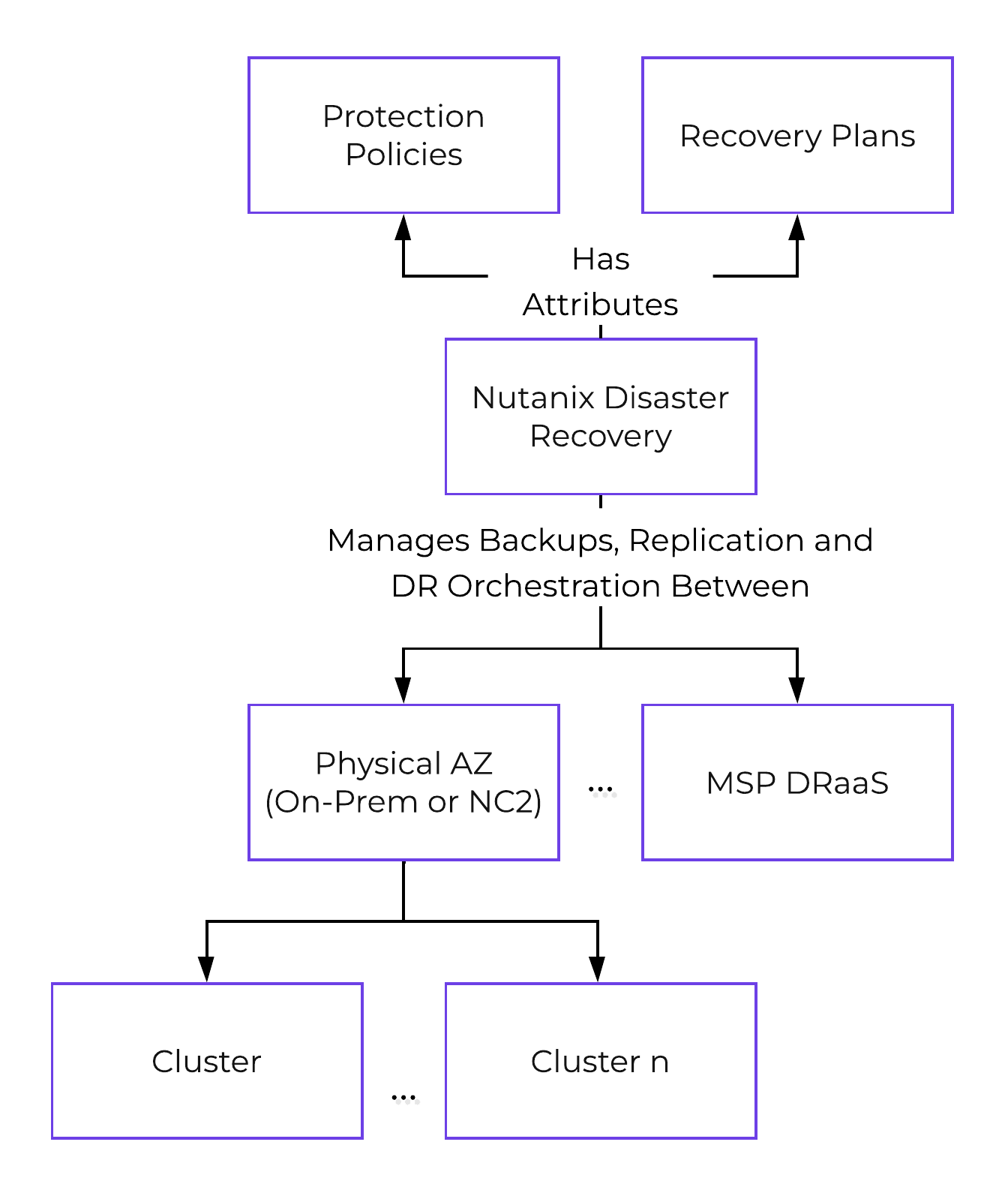

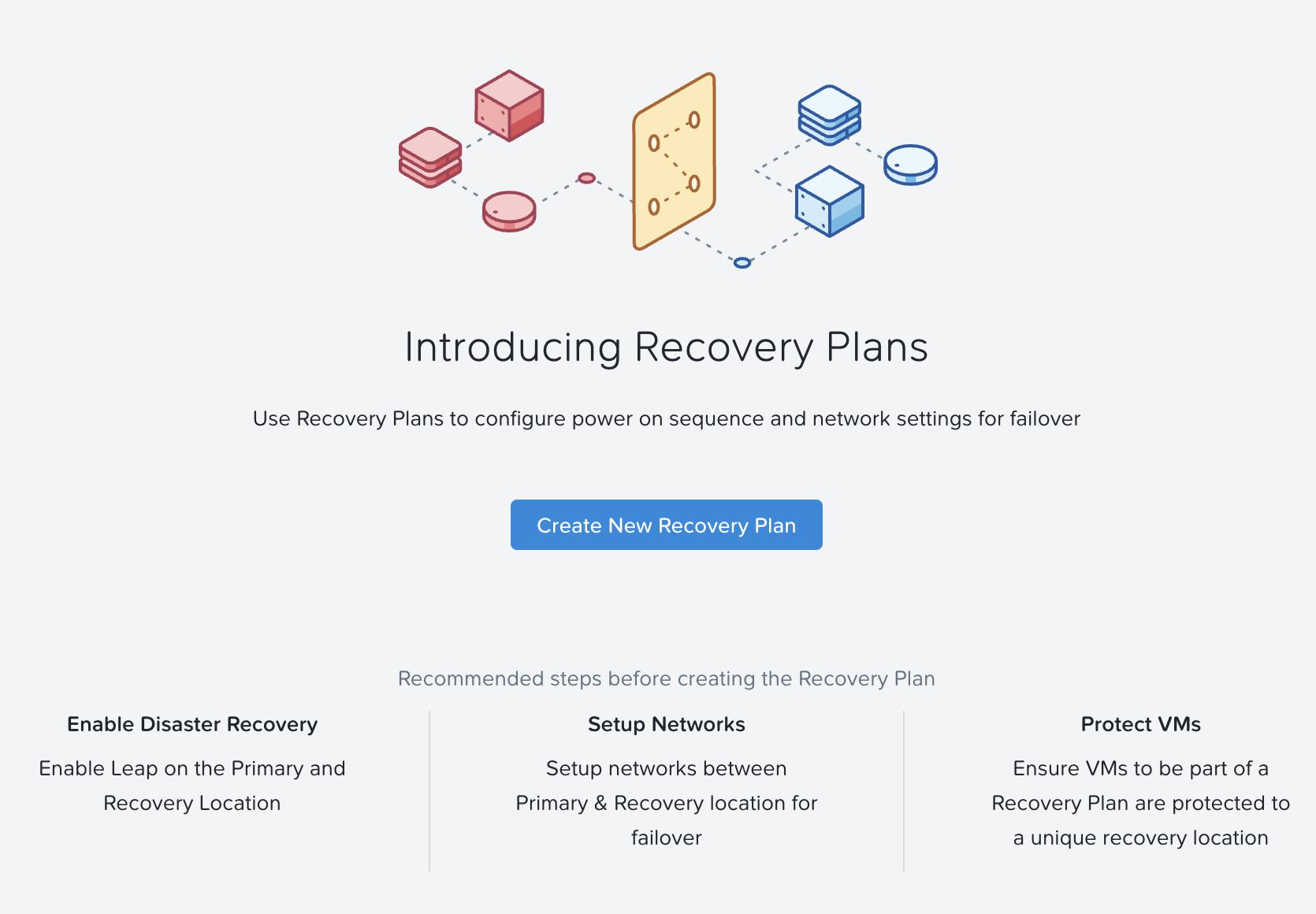

- Disaster Recovery

- Integrated disaster recovery that is simple to deploy and easy to manage, providing flexible RPO and RTO options on-prem and in the cloud

- Container Services

- Nutanix Cloud platform provides compute and data for container services like OpenShift and provides an enterprise Kubernetes management solution (Nutanix Kubernetes Engine), to deliver and manage an end-to-end production ready Kubernetes environment.

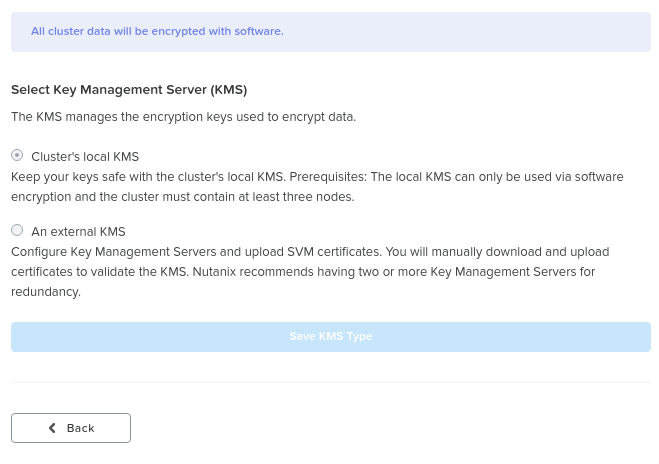

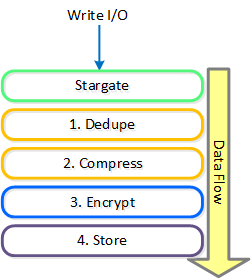

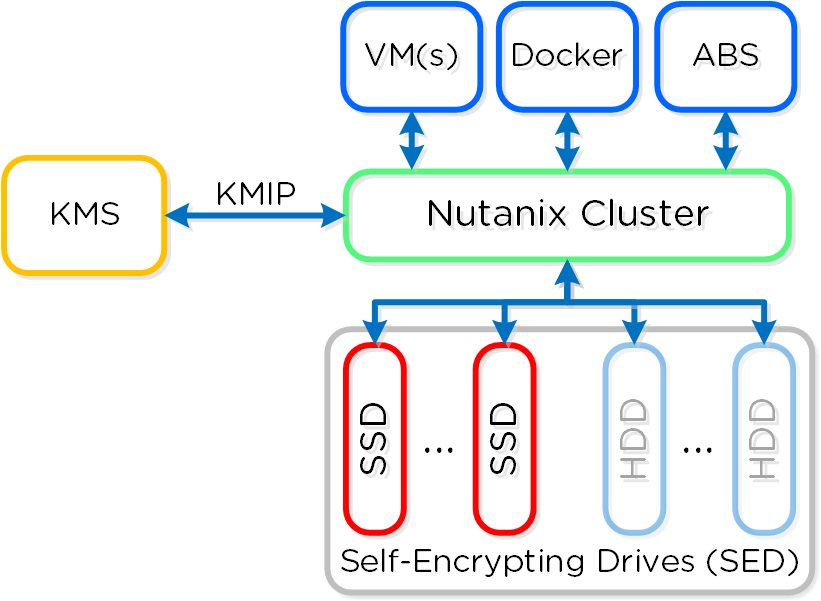

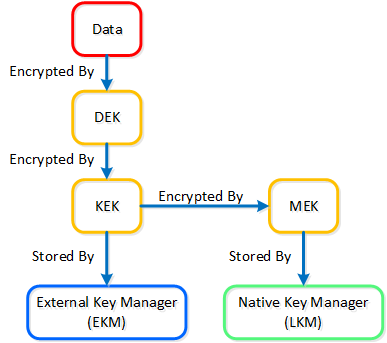

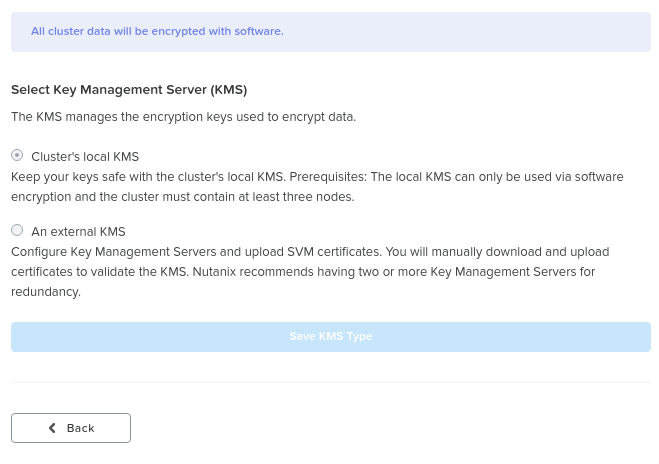

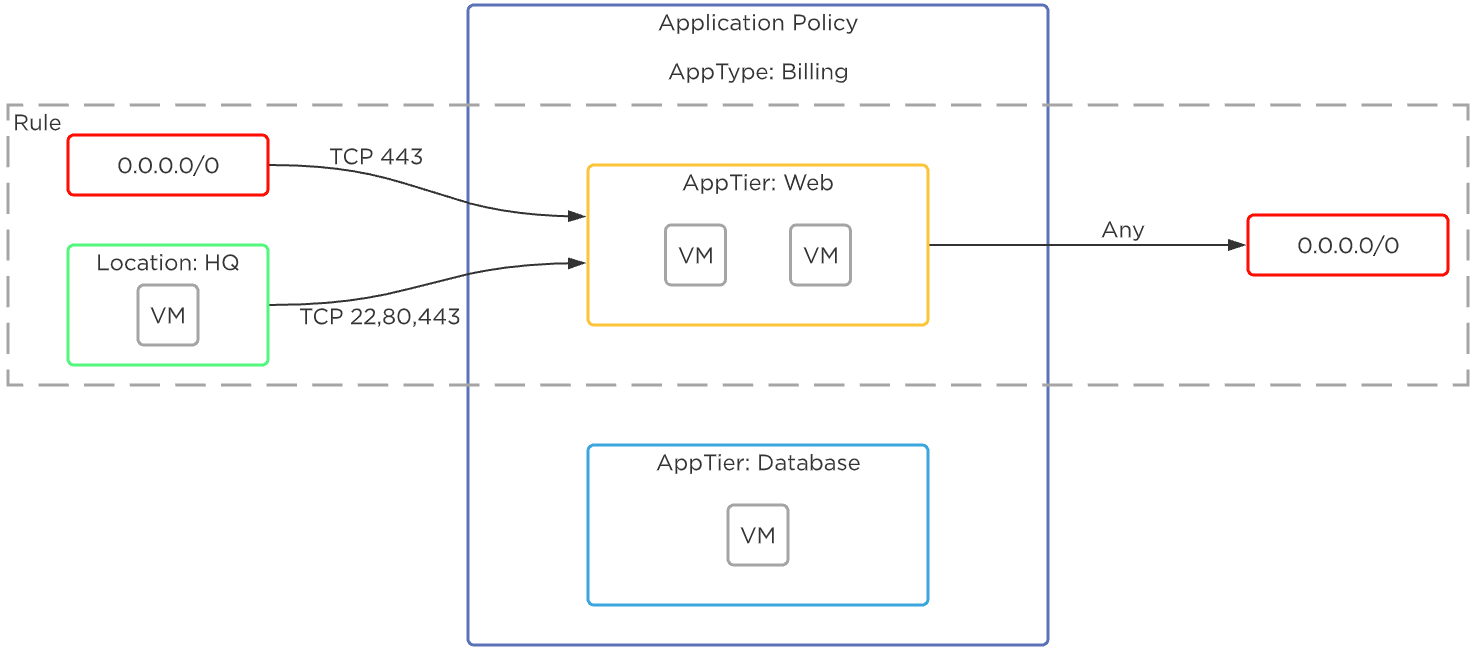

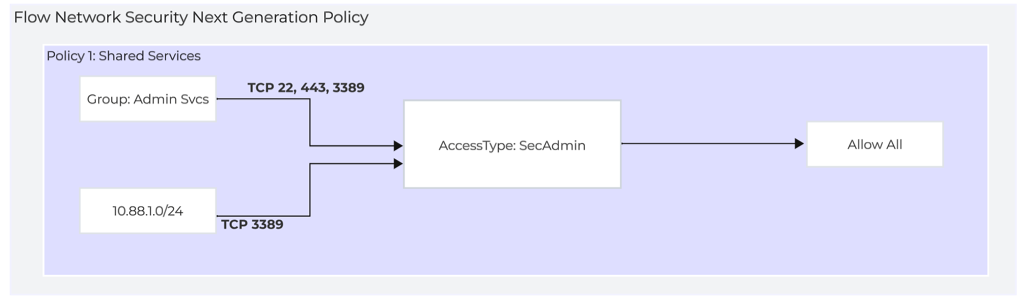

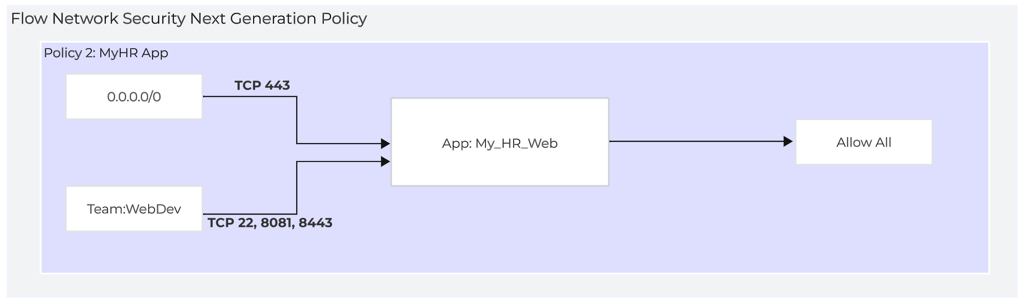

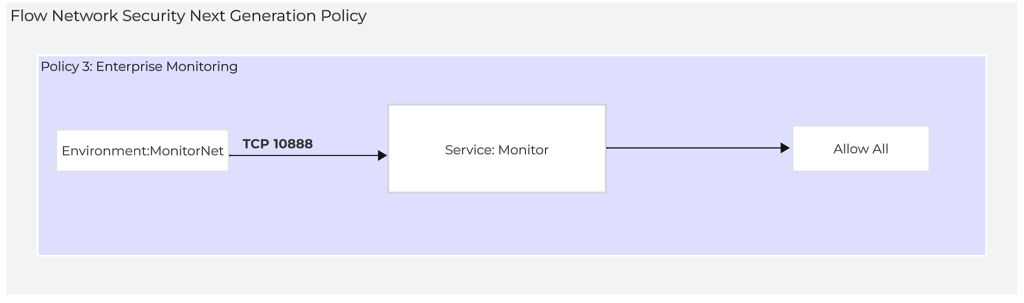

- Data and Network Security

- Comprehensive security for data using encryption with a built-in local key manager and software-based firewalls for network and applications with Flow Network Security.

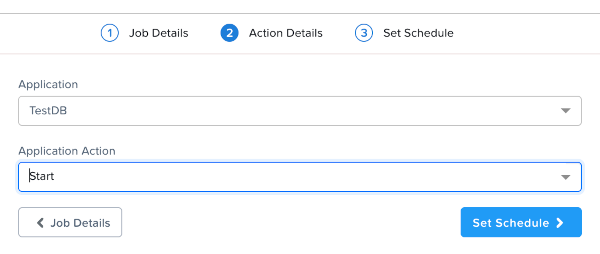

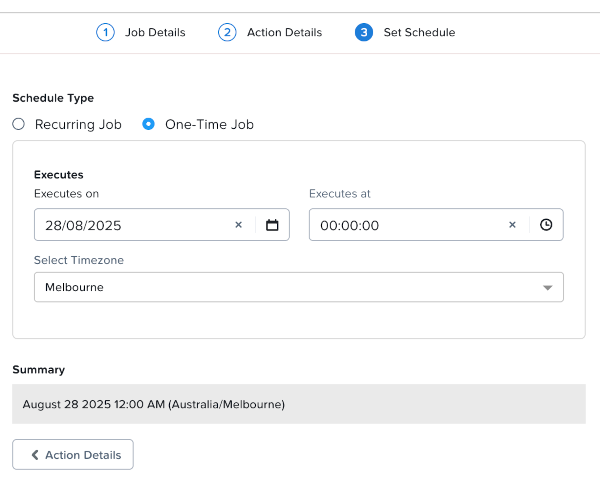

Nutanix Cloud Manager (NCM)

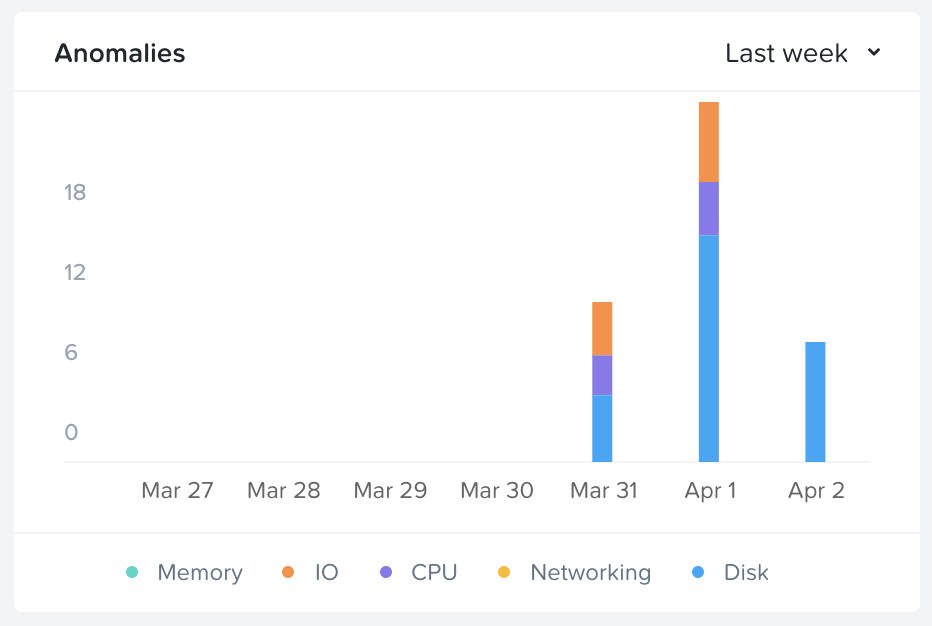

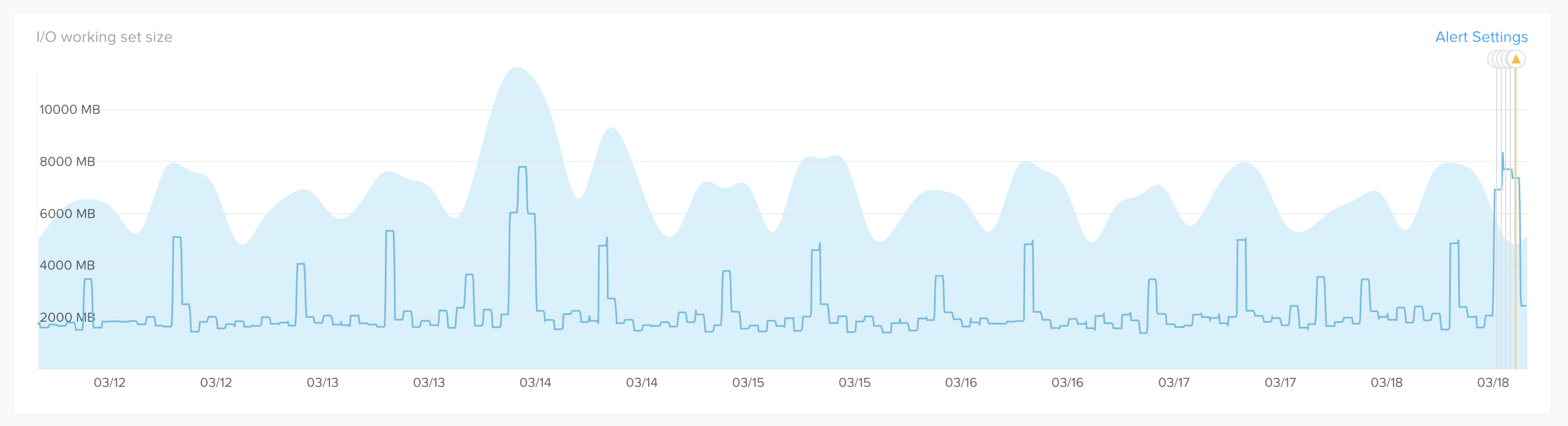

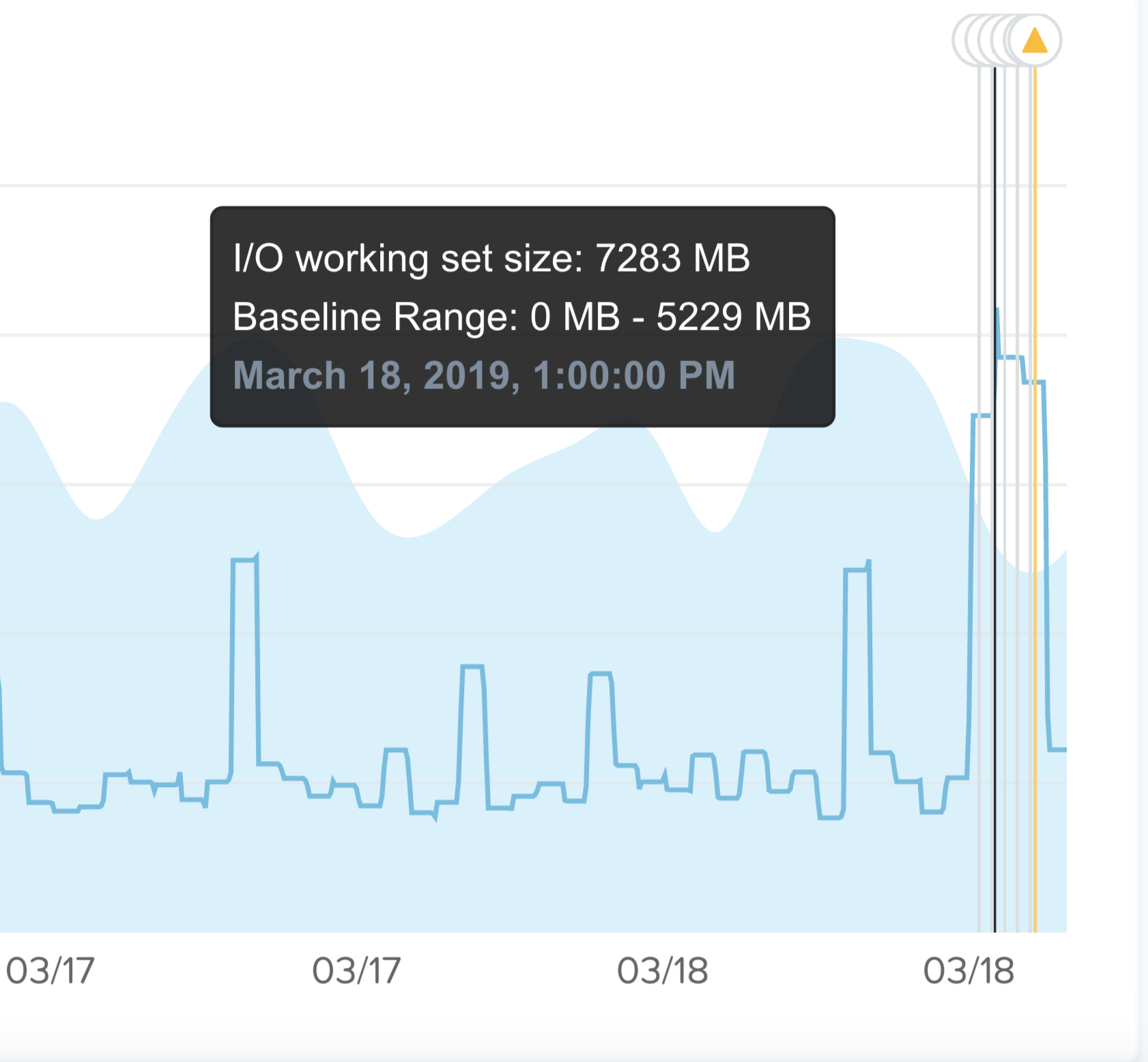

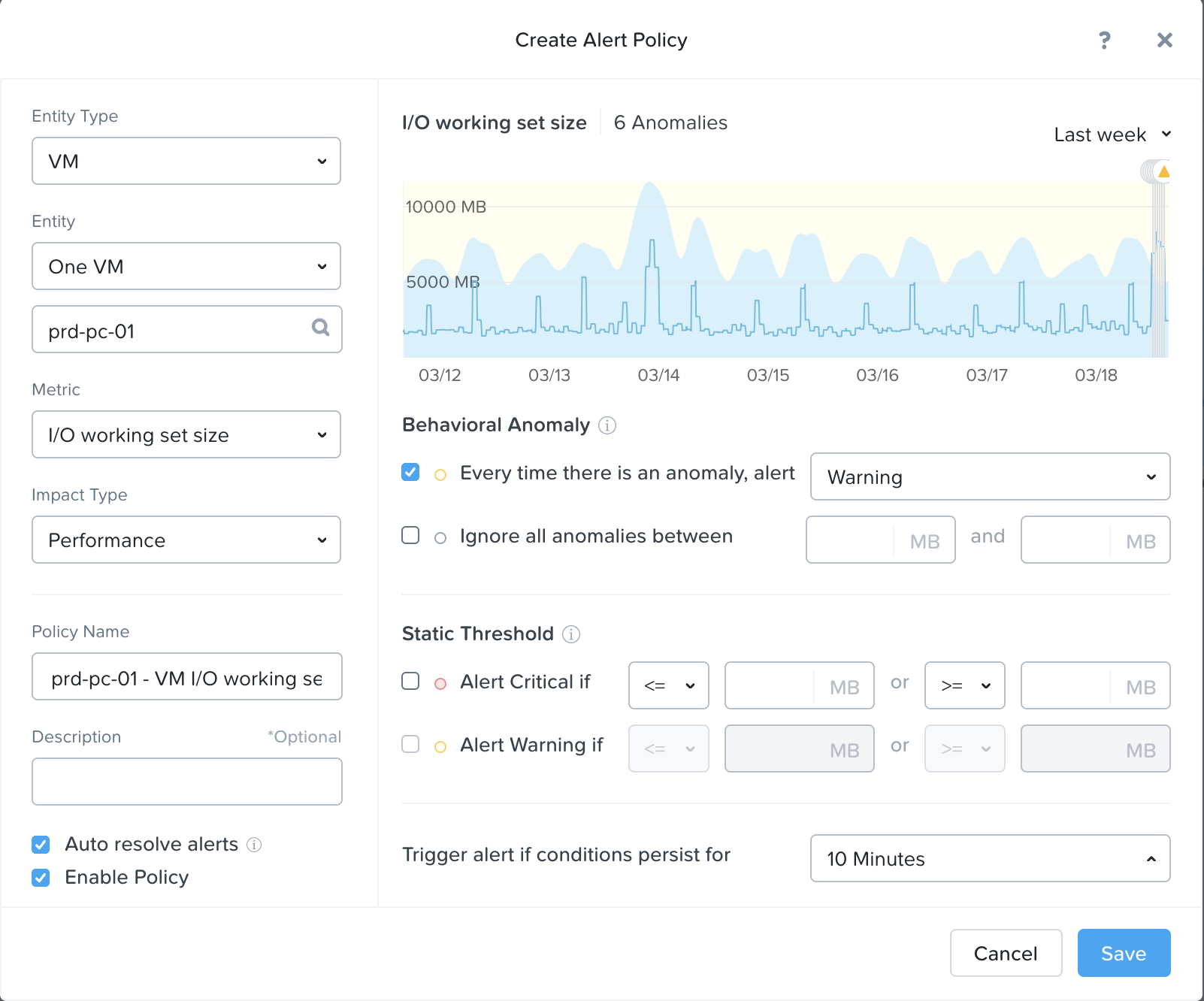

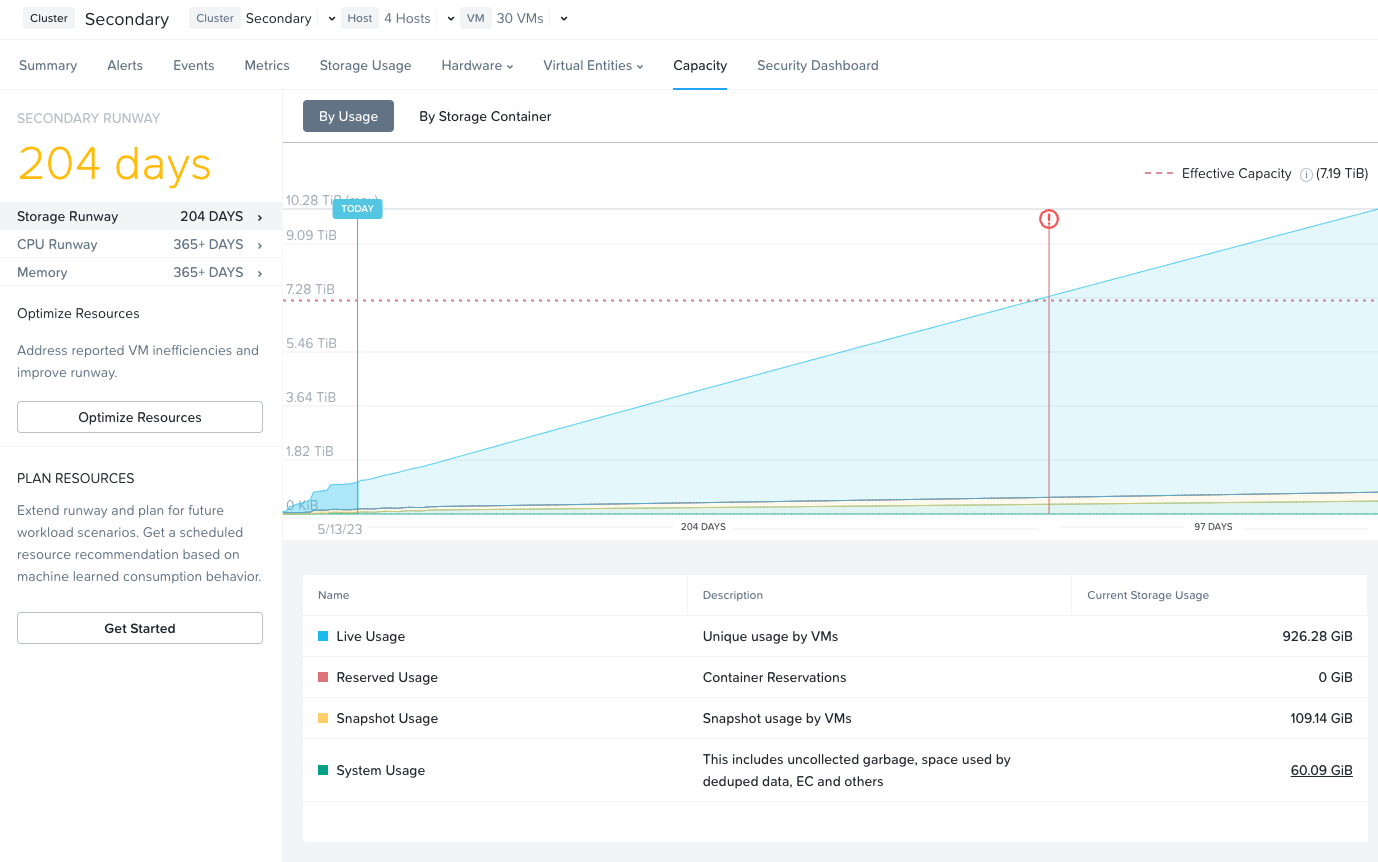

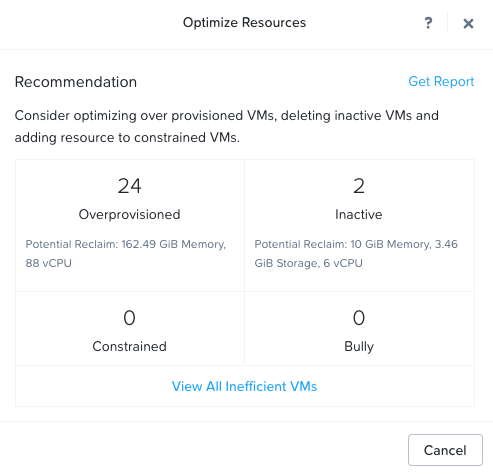

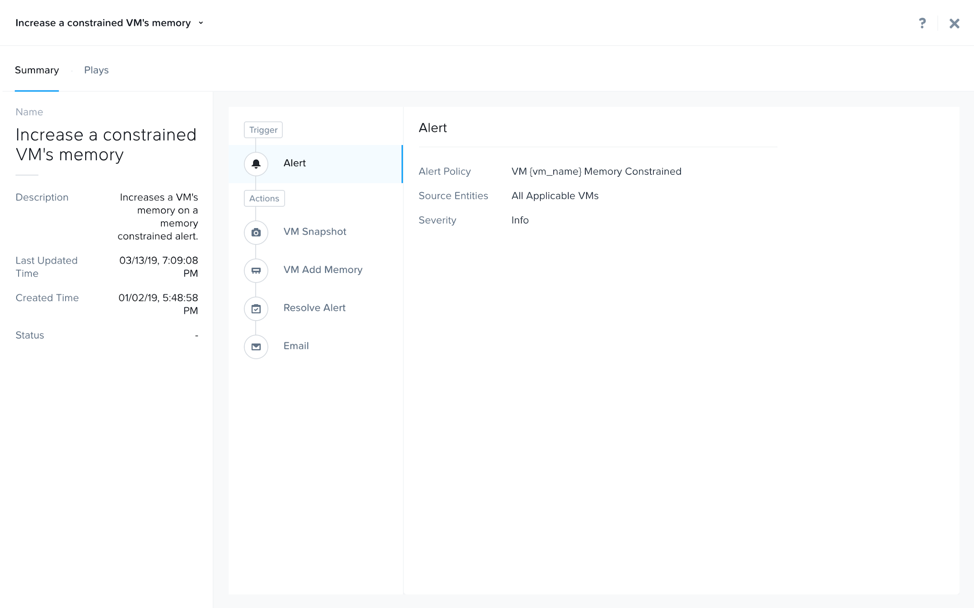

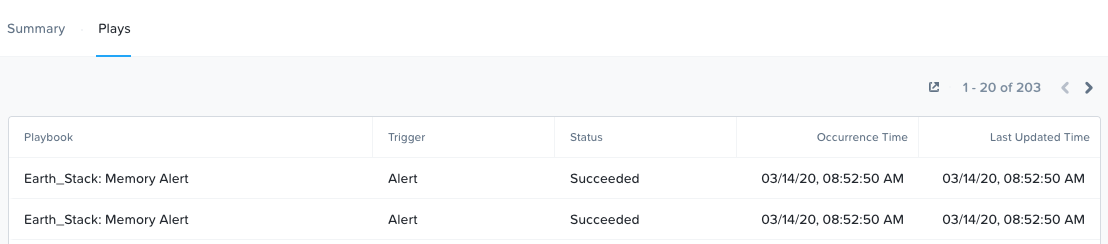

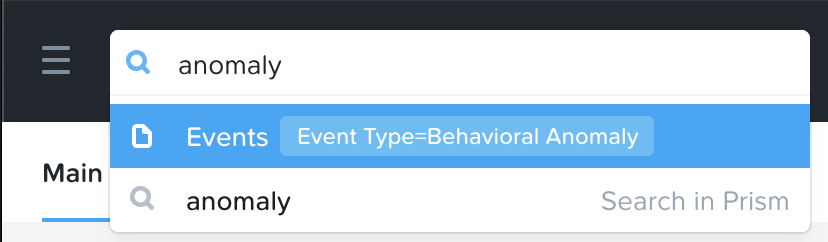

- AI Operations (NCM Intelligent Operations)

- Optimizes capacity, proactively detects performance anomalies and provides capability to automate operational tasks that can streamline infrastructure management

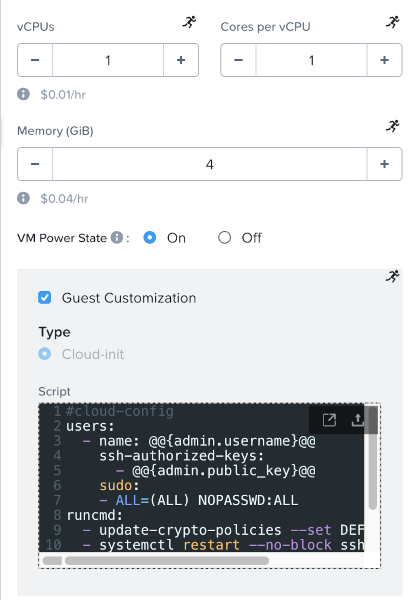

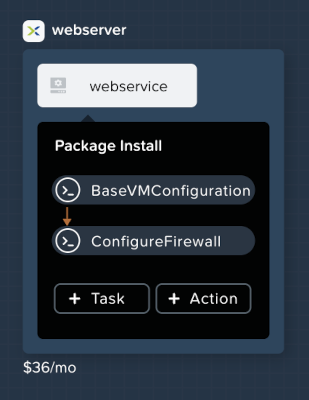

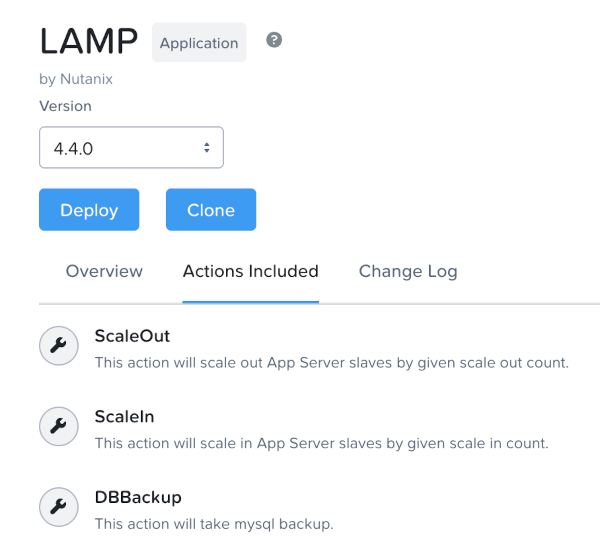

- Self-Service Infrastructure/App Lifecycle Management (NCM Self-Service)

- Orchestration with the ability to manage, deploy and scale applications across hybrid clouds with self-service, automation, and centralized role-based governance.

- Cost Governance

- Drive financial accountability with intelligent resource sizing and accurate visibility into cloud metering and chargeback.

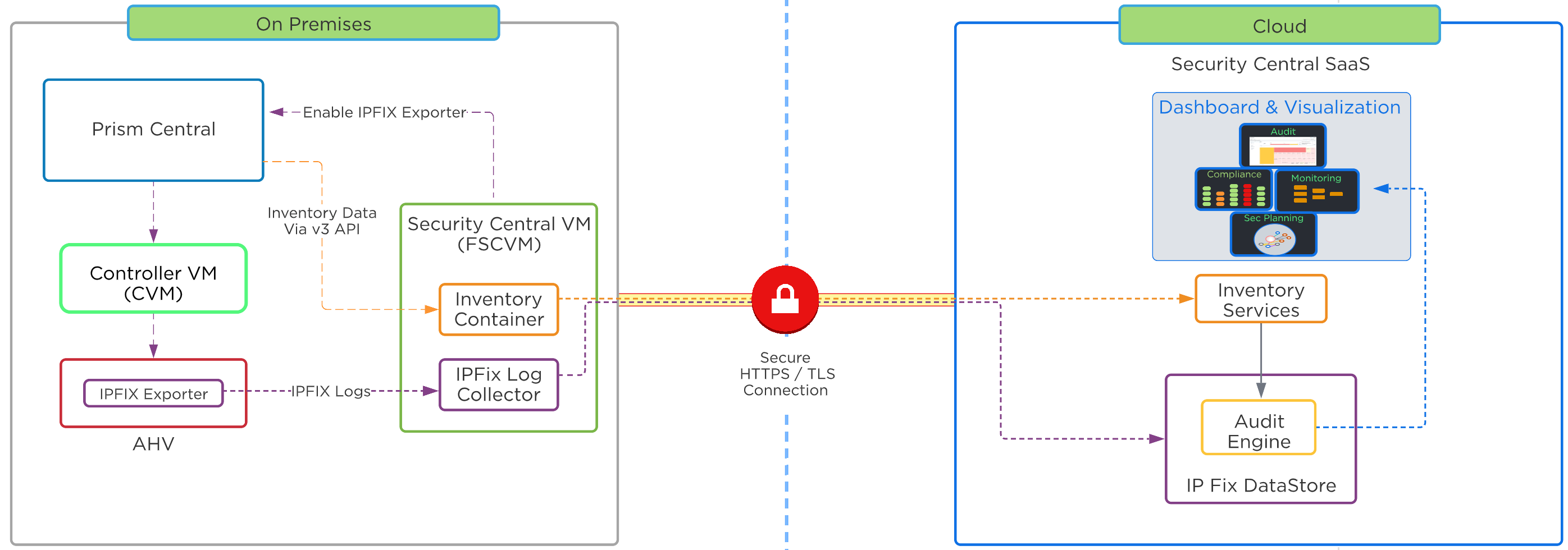

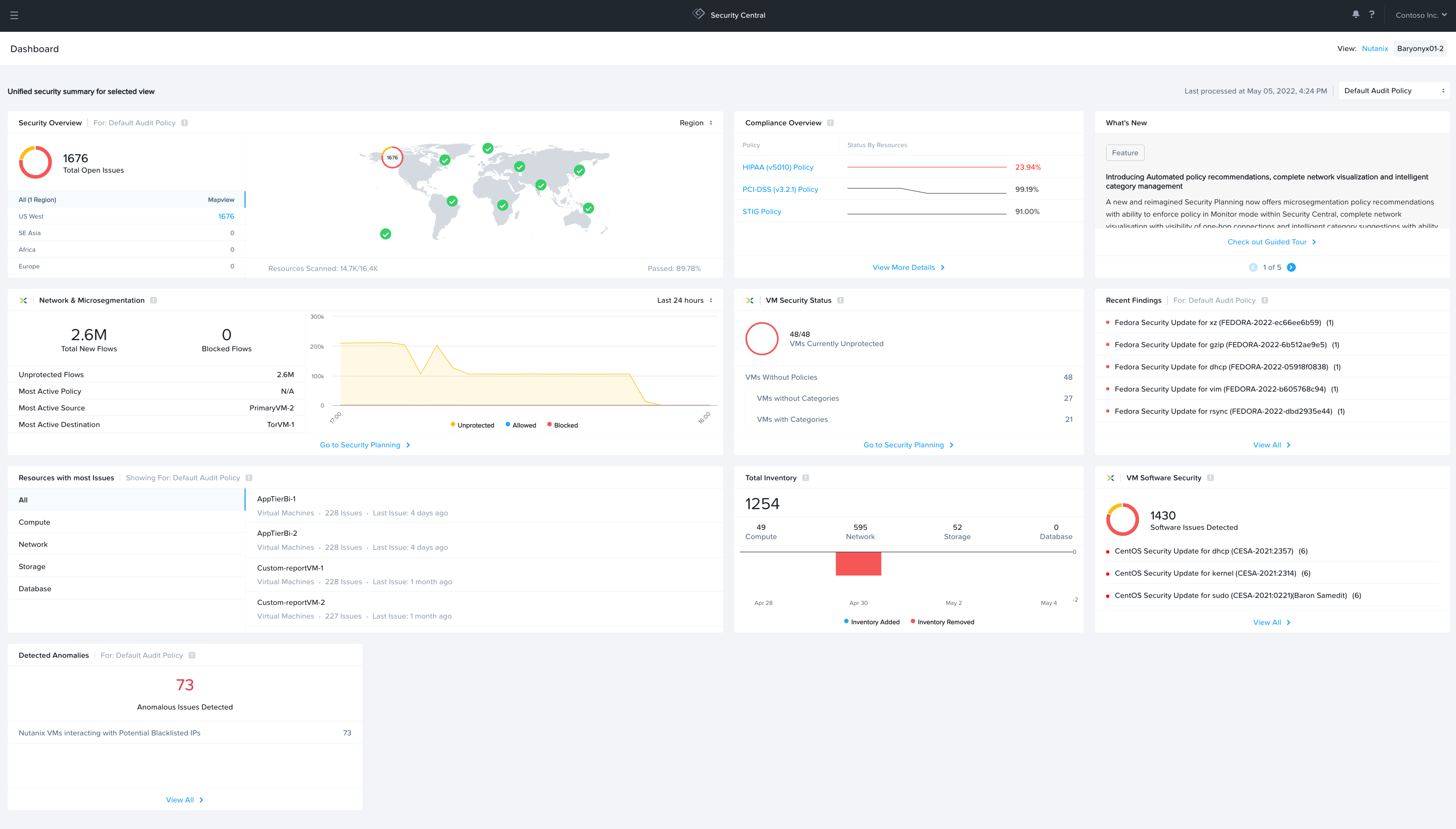

- Security Central

- Security dashboard that unifies cloud security operations, identifies workload vulnerabilities, manages microsegmentation, and adheres to regulatory compliance requirements for strategic initiatives like Zero Trust.

In addition to NCI and NCM, the Nutanix Cloud Platform also provides the following services.

Nutanix Unified Storage Services

- Files Storage

- Simple, Secure, software-defined scale-out file storage and management

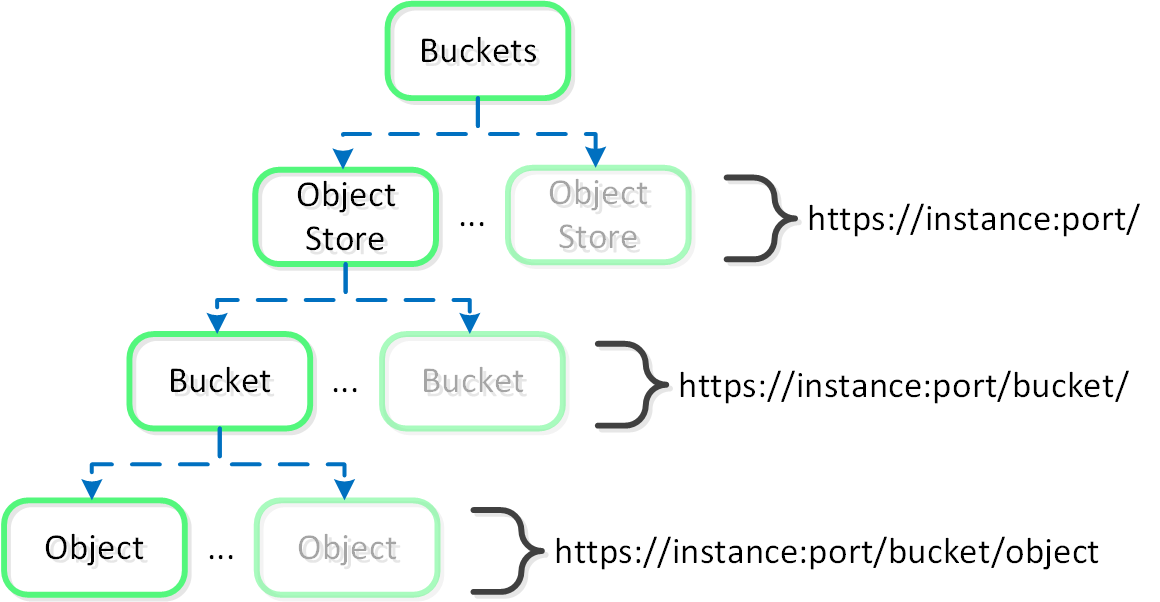

- Objects Storage

- Simple, Secure and scale-out S3 compatible object storage at massive scale

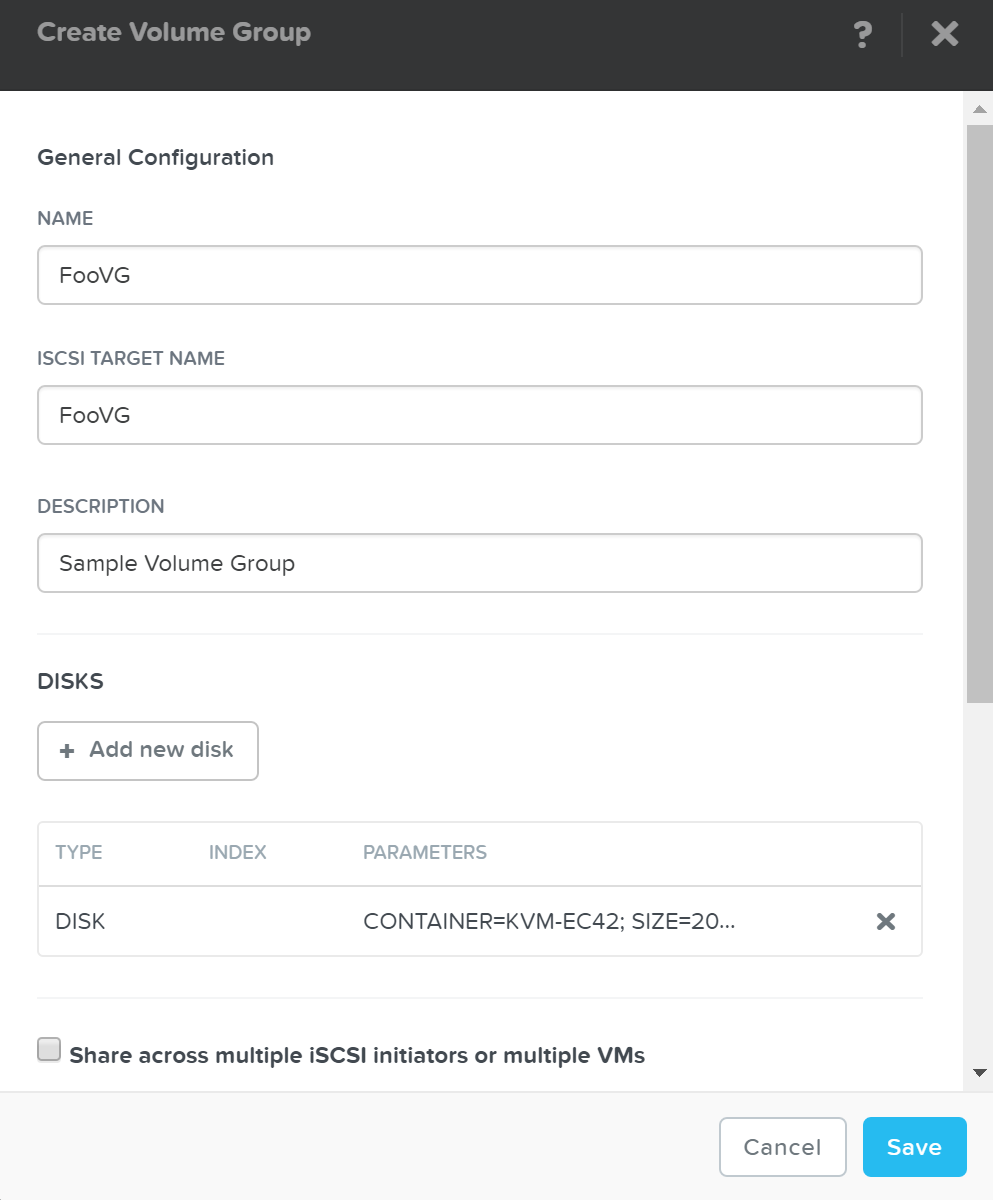

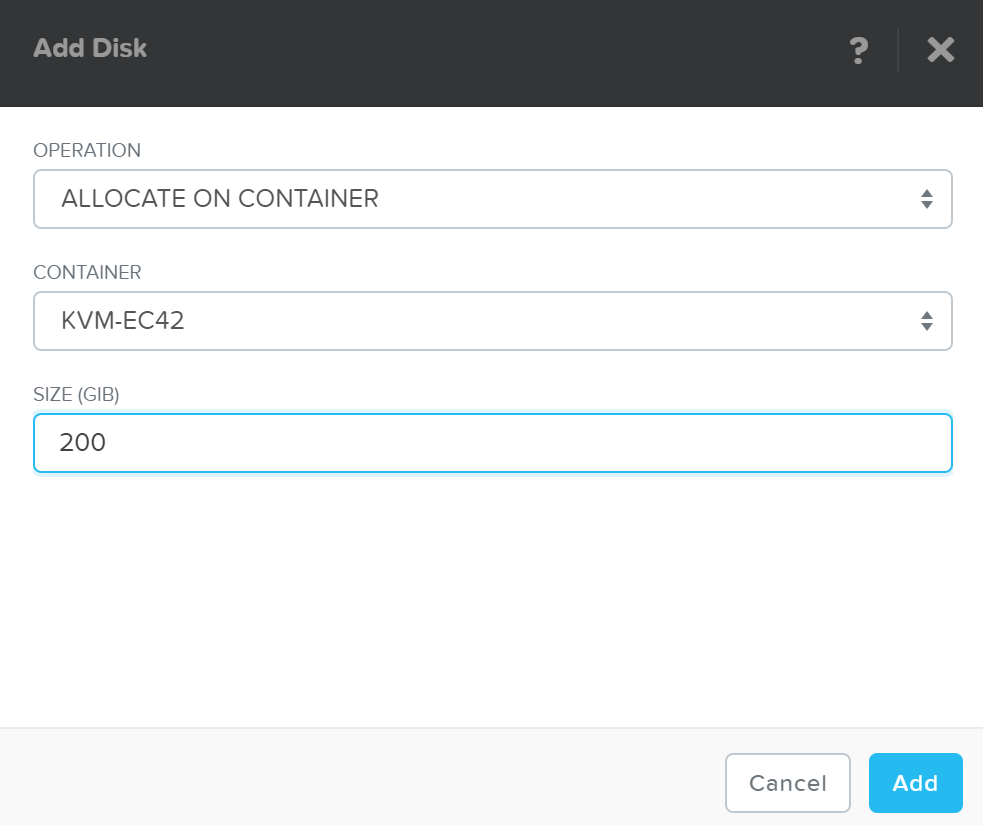

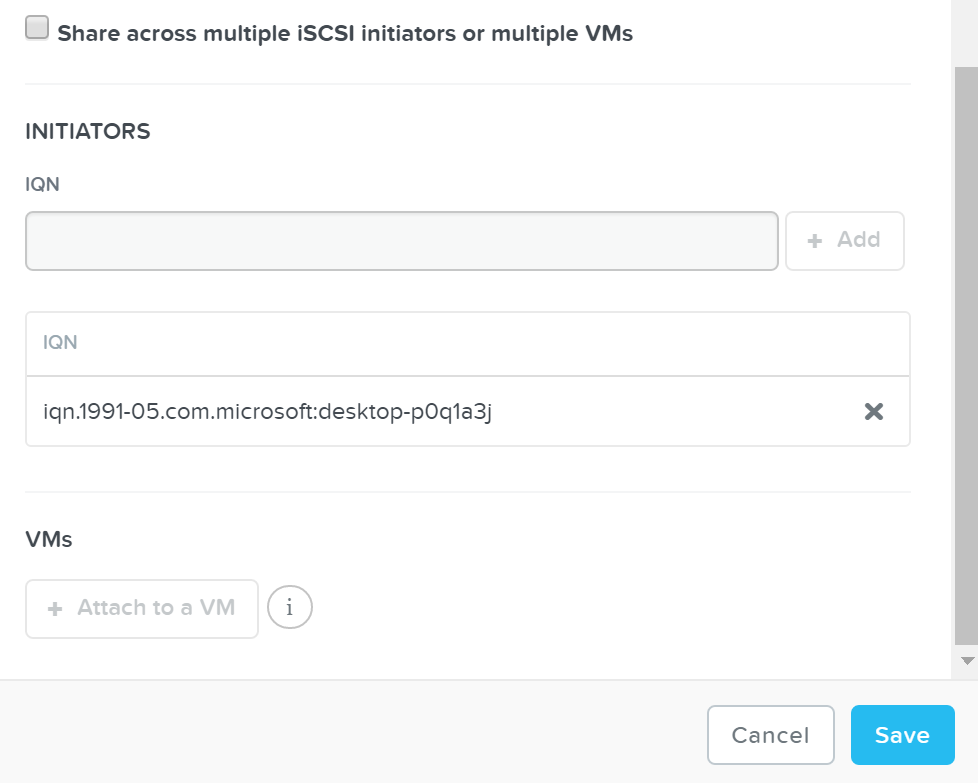

- Volumes Block Storage

- Enterprise class scale-out block storage that exposes storage resources directly to virtualized guest operating systems or physical hosts

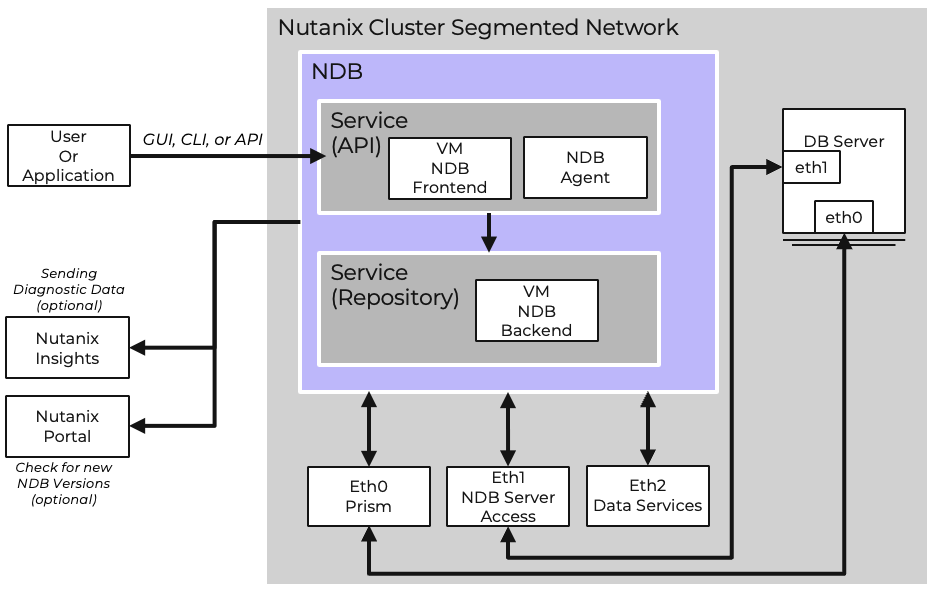

Nutanix Database Service

Simplified and automated database lifecycle management across hybrid clouds

Desktop Services

Deliver virtual desktops and applications on any cloud at scale.

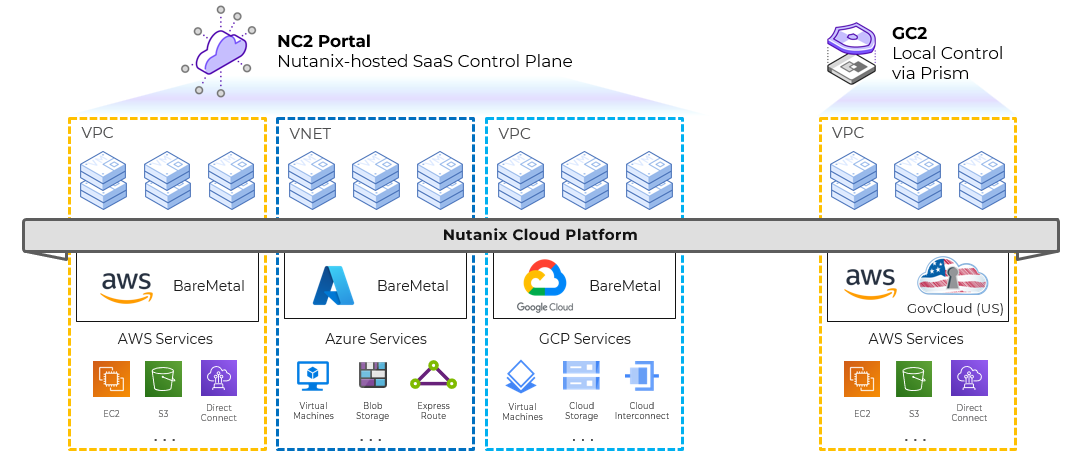

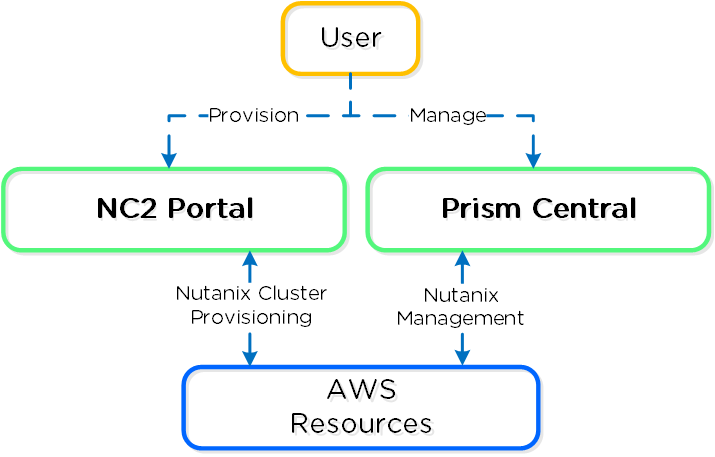

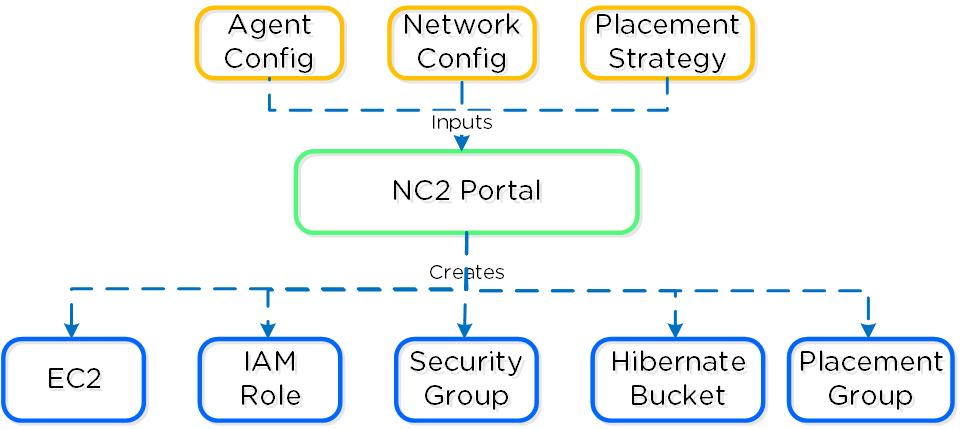

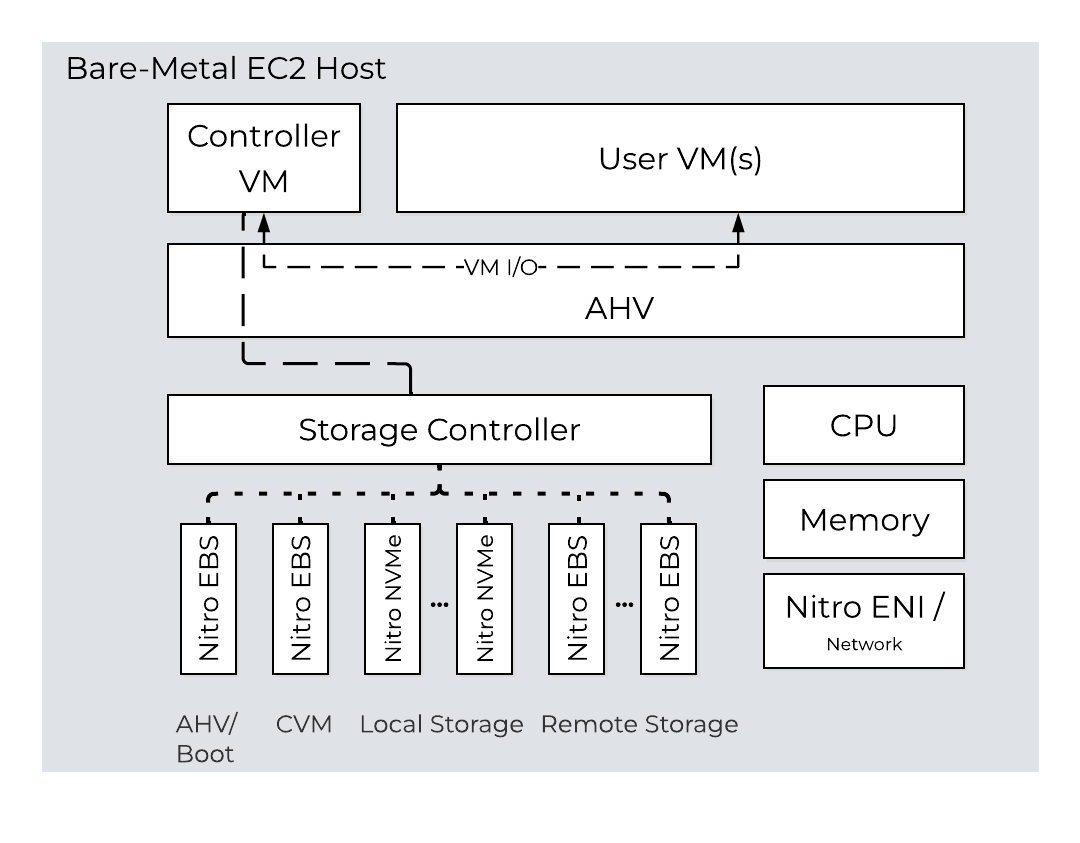

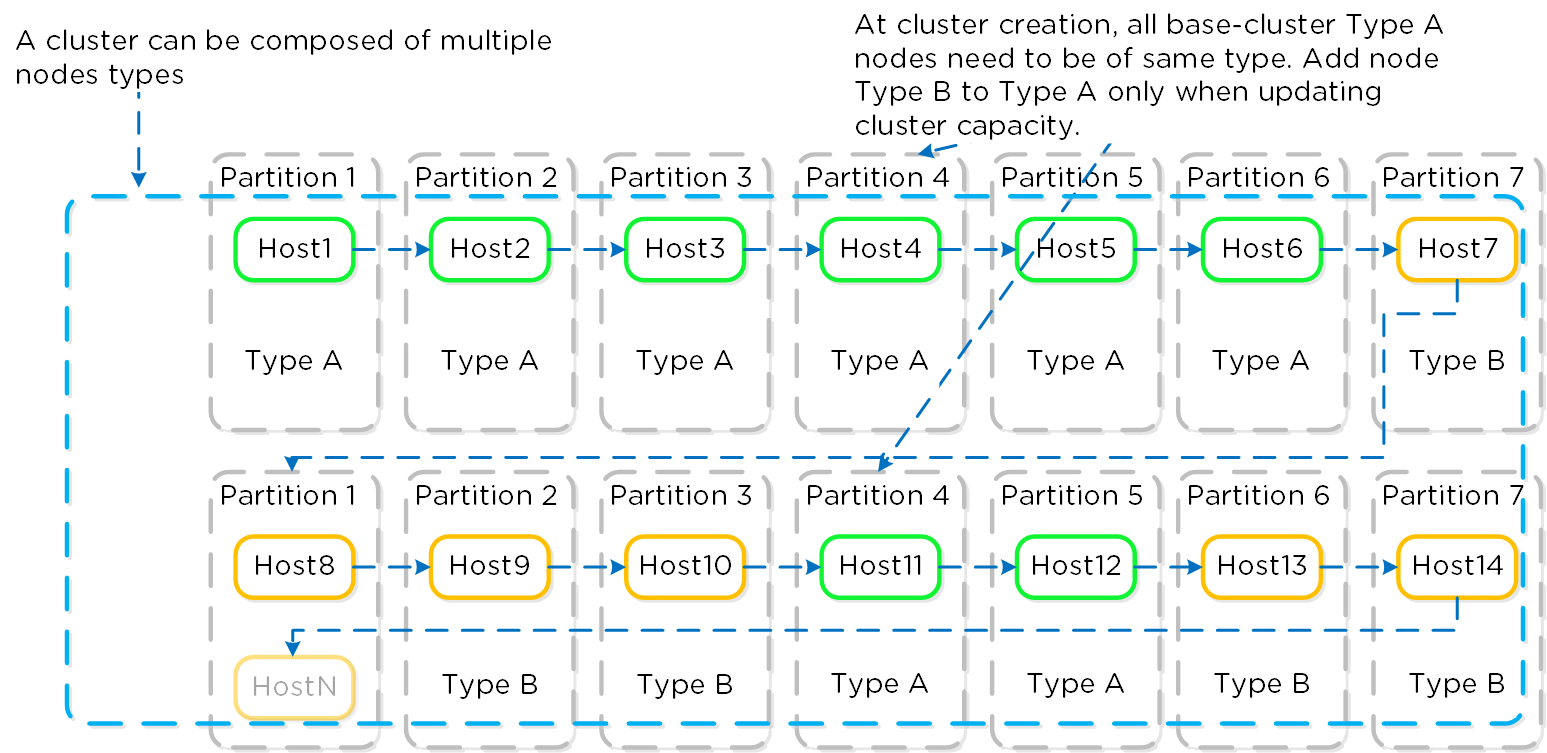

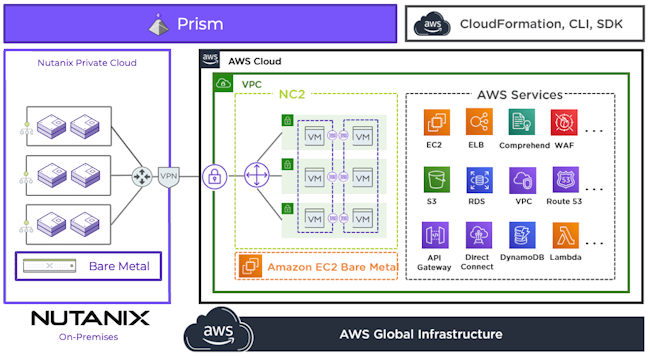

Nutanix Cloud Platform can be deployed on-prem, in public clouds using Nutanix Cloud Clusters (NC2), in a colocation, or at edge as required. For licensing and software options please visit Nutanix.com Software Options Page.

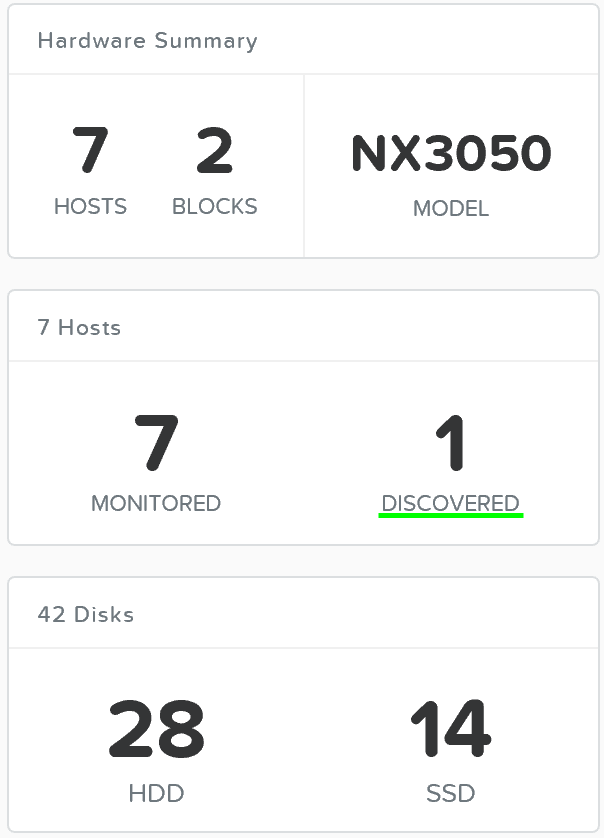

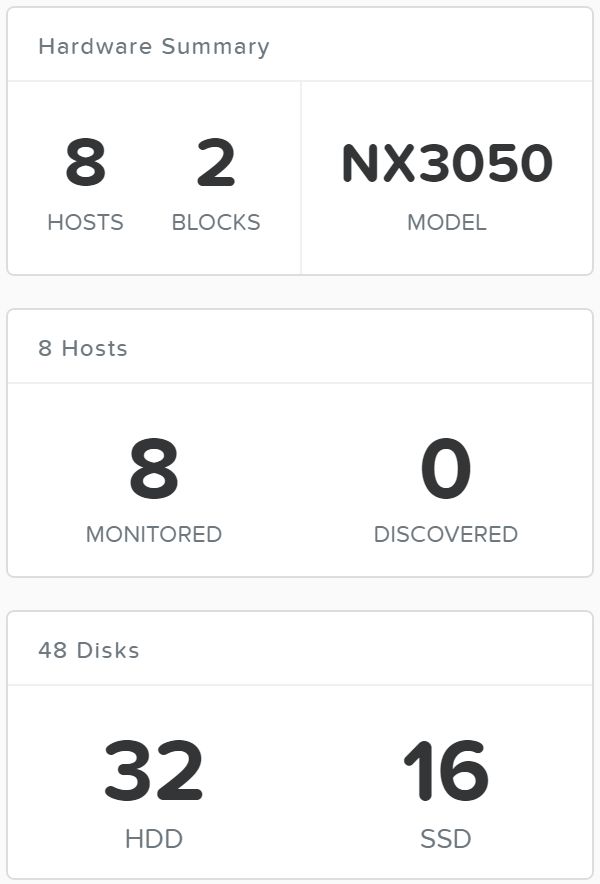

Platform

Nutanix supports a variety of vendor platforms and configurations. Platforms can be Nutanix appliances, OEM platforms and third-party server vendors for on-prem platforms. Additionally, Nutanix software also runs in public cloud platforms with NC2 and service provider clouds. For a complete list, please visit the Nutanix.com Platforms Page.

» Download this section as PDF (opens in a new tab/window)

For a video explanation you can watch the following video: LINK

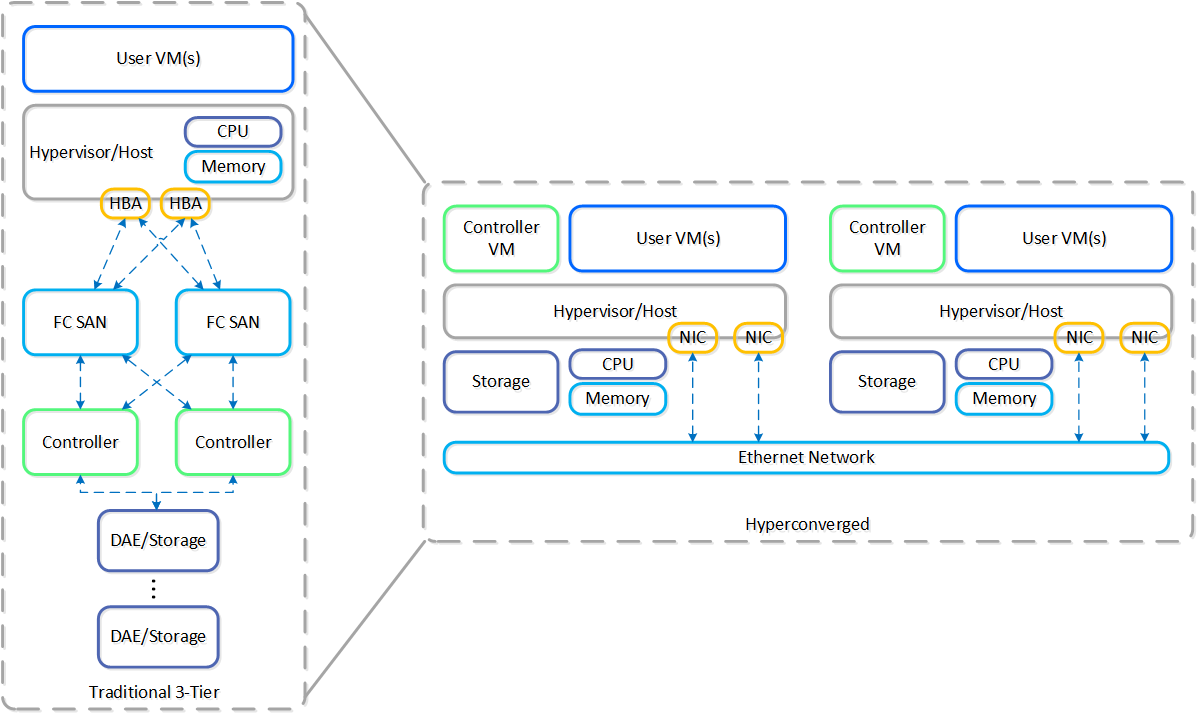

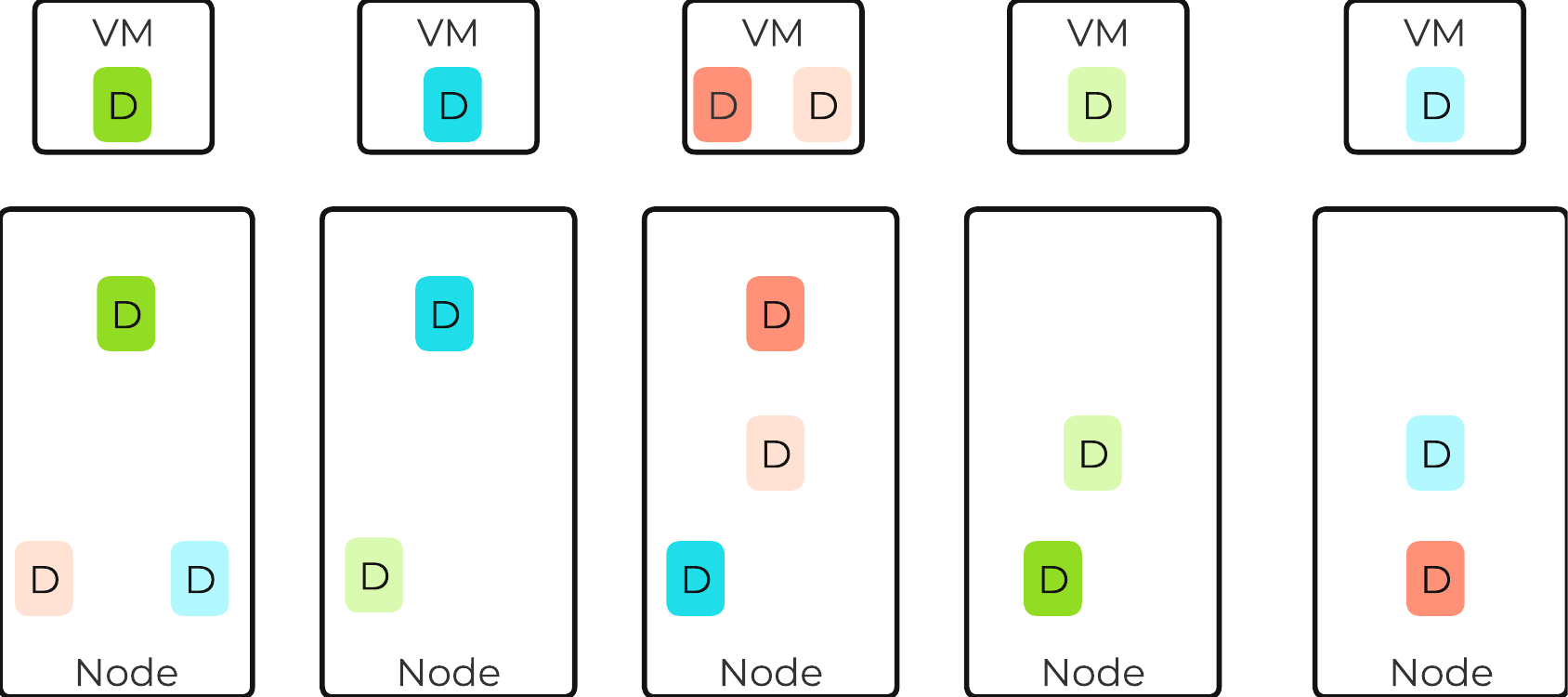

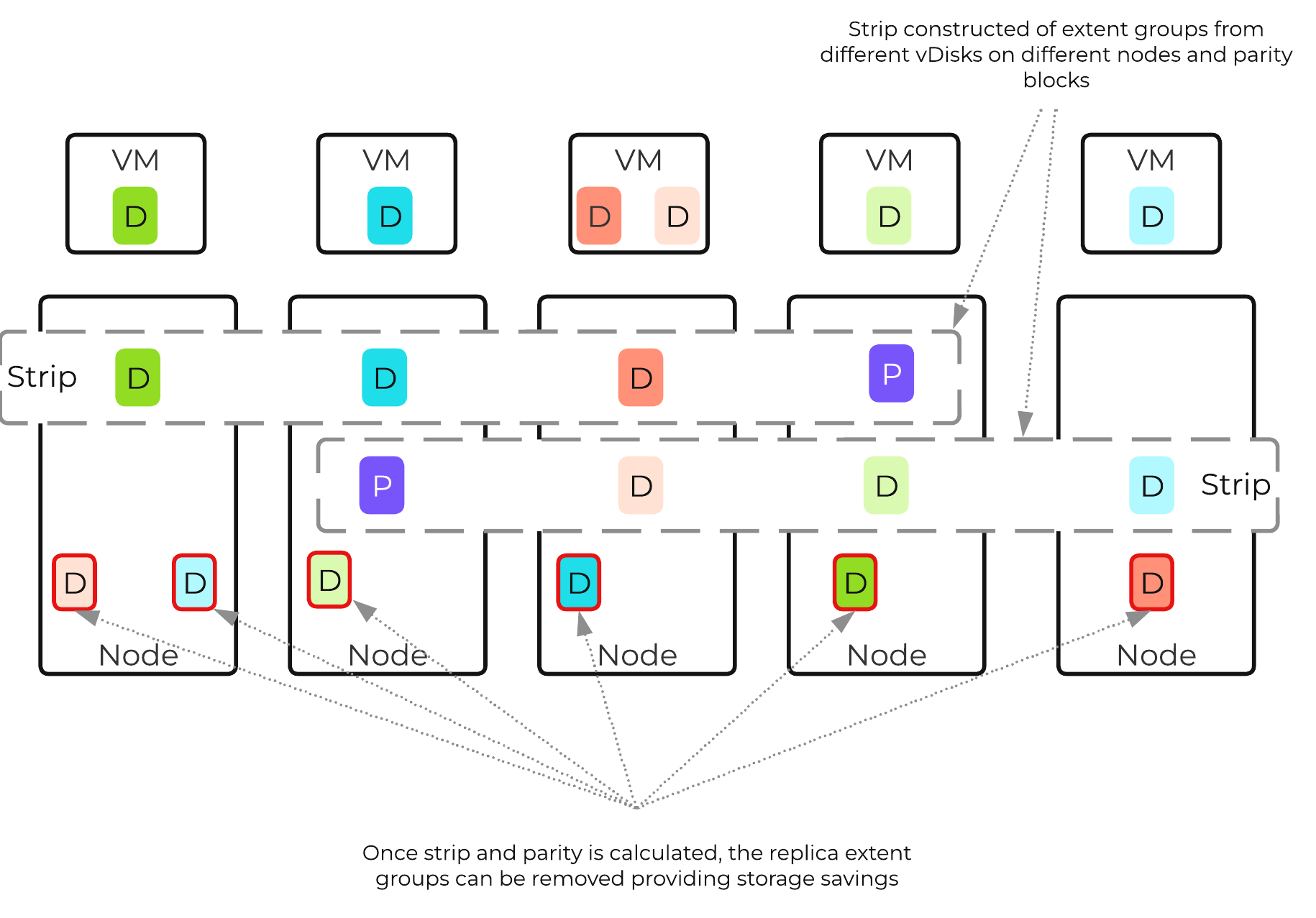

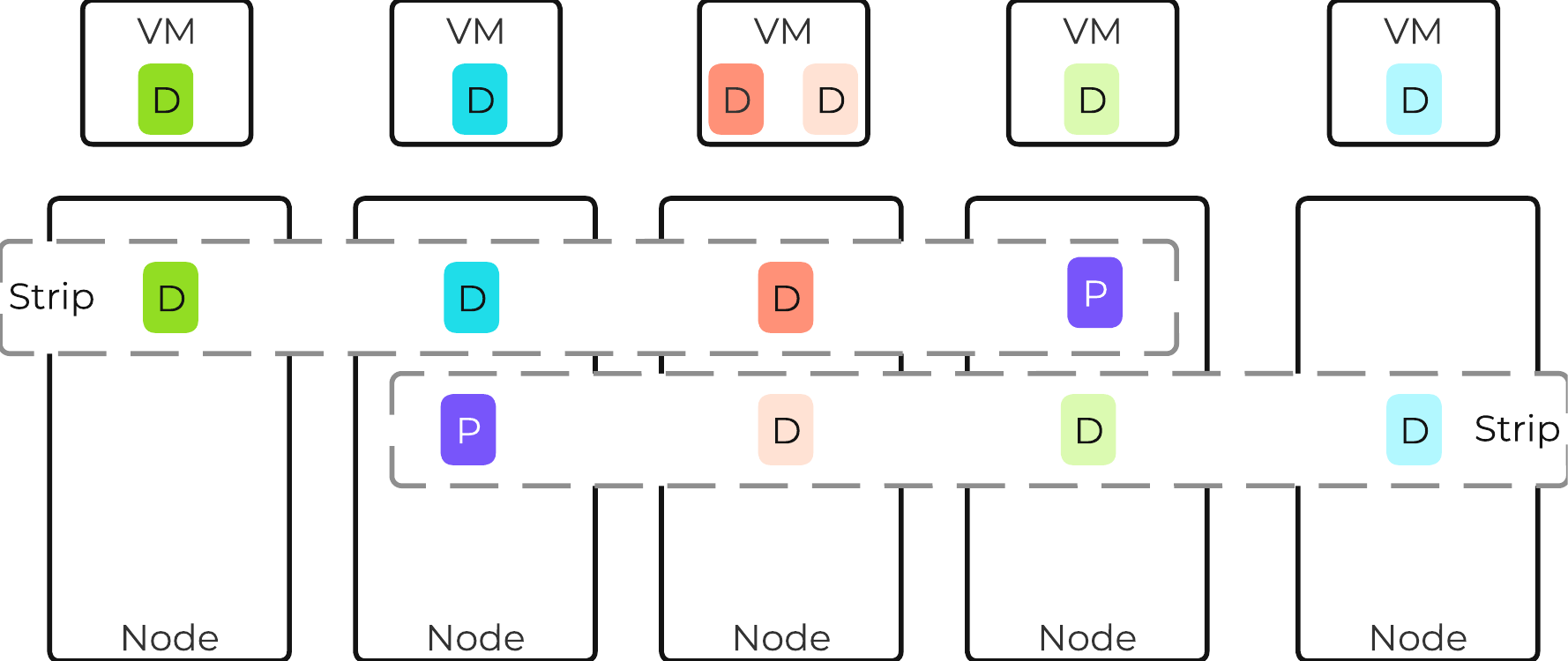

There are a few core structs for hyperconverged systems:

- Must converge and collapse the computing stack (e.g. compute + storage)

- Must shard (distribute) data and services across nodes in the system

- Must appear and provide the same capabilities as centralized storage (e.g. HA, live-migration, etc.)

- Must keep data as close to the execution (compute) as possible (Importance of Latency)

- Should be hypervisor agnostic

- Should be hardware agnostic

The following figure shows an example of a typical 3-tier stack vs. hyperconverged:

3-Tier vs. HCI

3-Tier vs. HCI

As you can see, the hyperconverged system does the following:

- Virtualizes and moves the controllers to the host

- Provides core services and logic through software

- Distributes (shards) data across all nodes in the system

- Moves the storage local to the compute

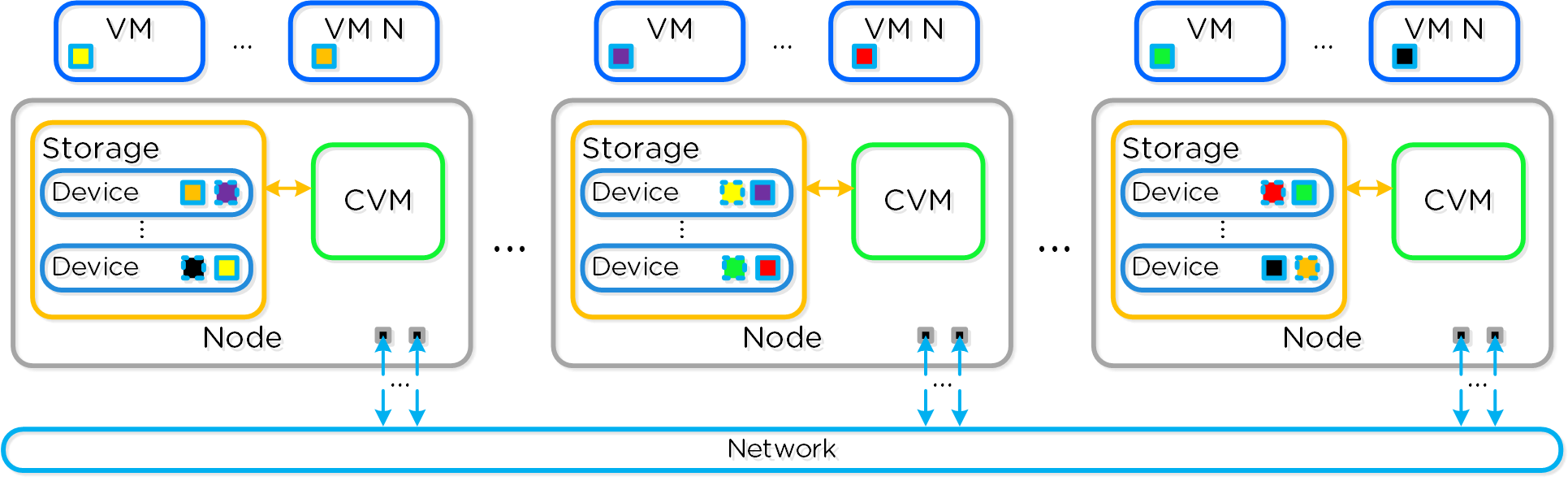

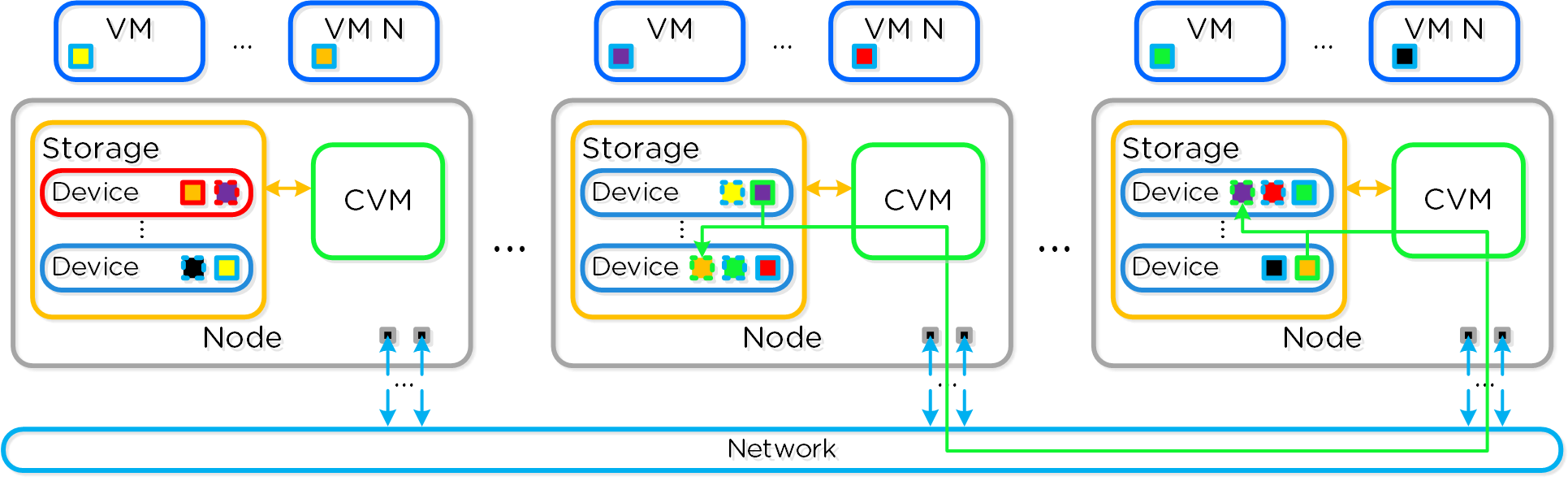

The Nutanix solution is a converged storage + compute solution which leverages local components and creates a distributed platform for running workloads.

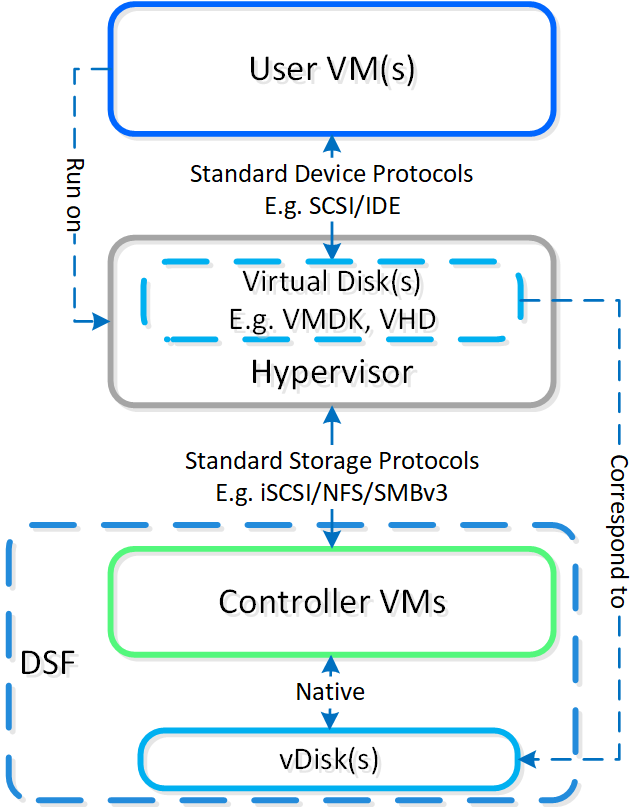

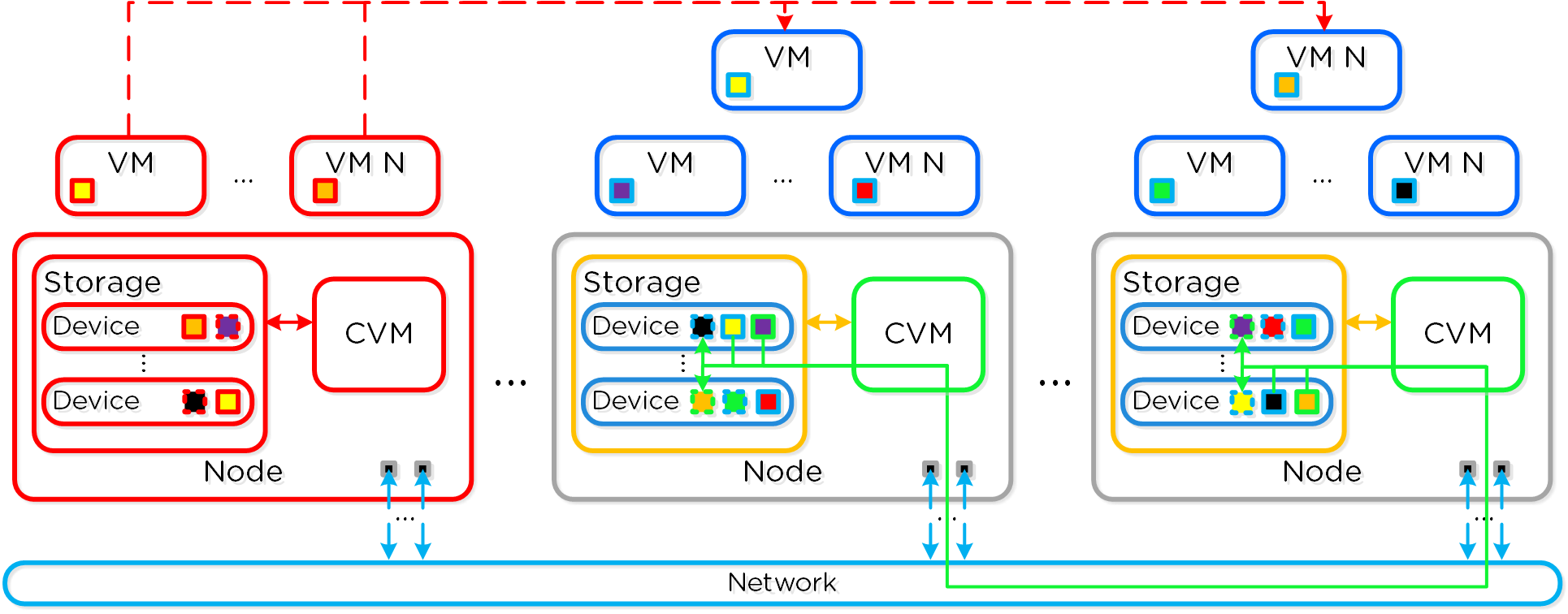

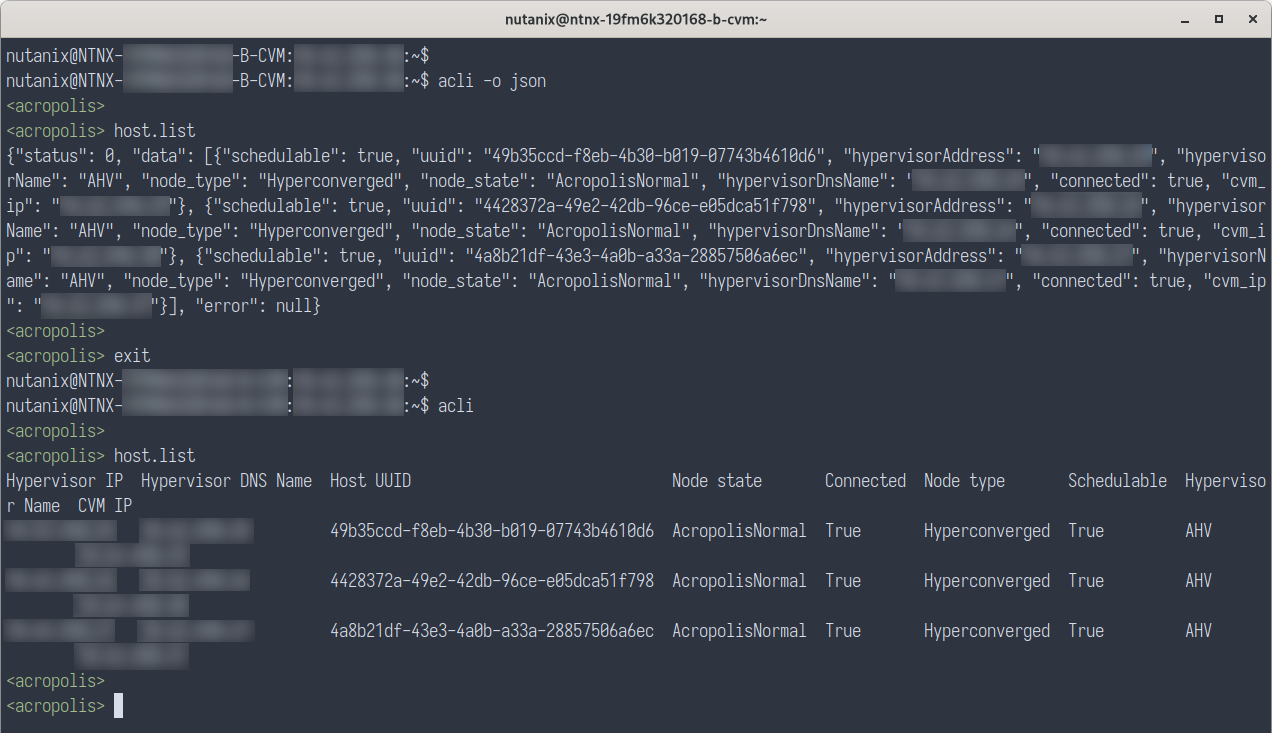

Each node runs an industry-standard hypervisor (ESXi, AHV, and Hyper-V) and the Nutanix Controller VM (CVM). The Nutanix CVM is what runs the Nutanix software and serves all of the I/O operations for the hypervisor and all VMs running on that host.

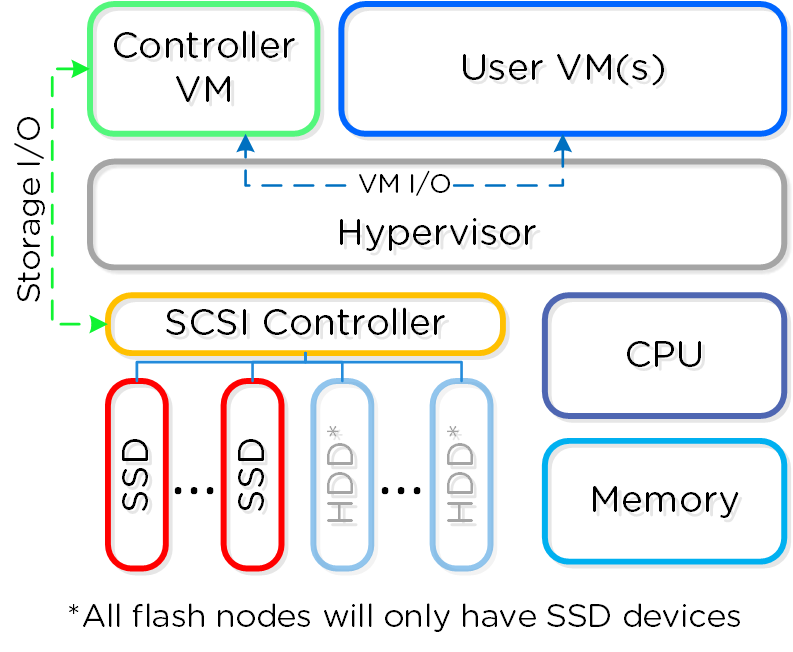

The following figure provides an example of what a typical node logically looks like:

Converged Platform

Converged Platform

The Nutanix CVM is responsible for the core Nutanix platform logic and handles services like:

- Storage I/O & transforms (Deduplication, Compression, EC)

- UI / API

- Upgrades

- DR / Replication

- Etc.

NOTE: Some services / features will spawn additional helper VMs or use the Microservices Platform (MSP). For example, Nutanix Files will deploy additional VMs, whereas Nutanix Objects will deploy VMs for MSP and leverage those.

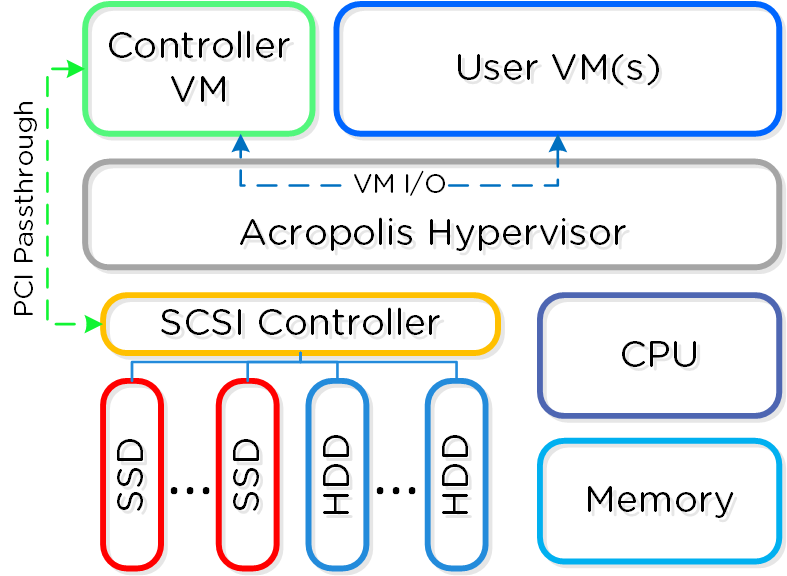

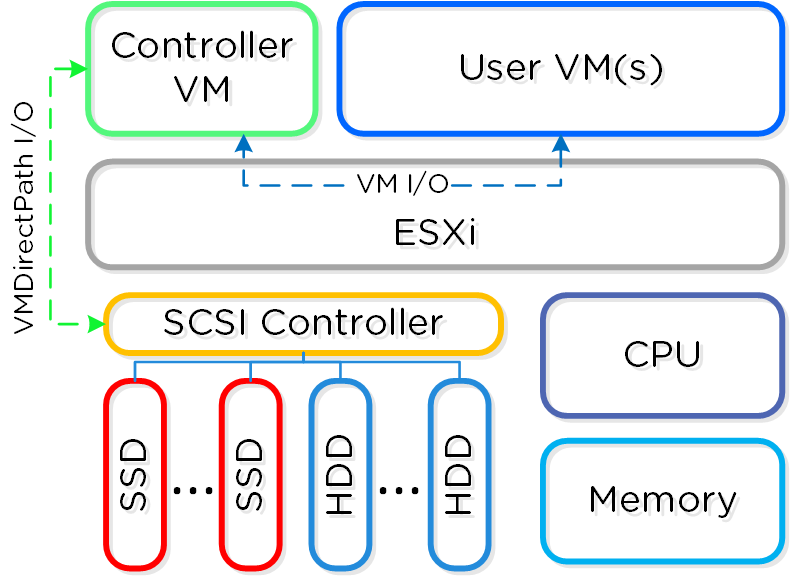

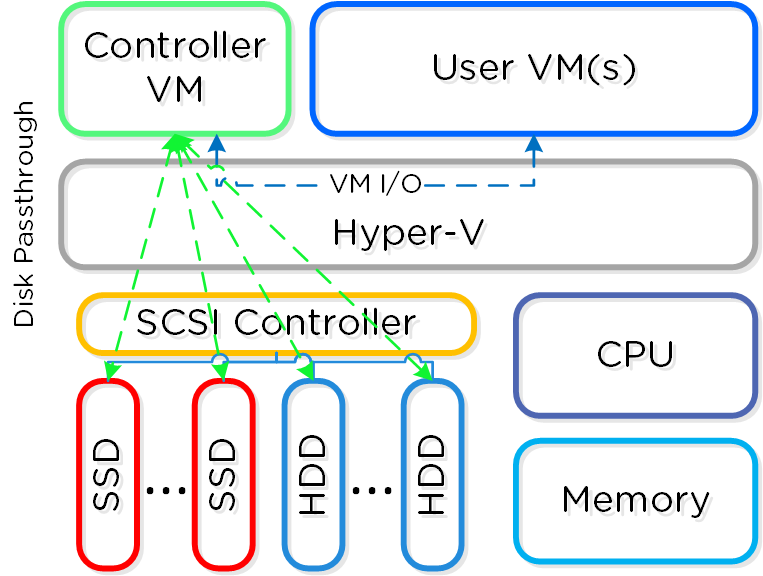

For the Nutanix units running VMware vSphere, the SCSI controller, which manages the SSD and HDD devices, is directly passed to the CVM leveraging VM-Direct Path (Intel VT-d). In the case of Hyper-V, the storage devices are passed through to the CVM.

Note

Virtualizing the Controller

The key reasons for running the Nutanix controllers as VMs in user-space really come down to four core areas:

- Mobility

- Resiliency

- Maintenance / Upgrades

- Performance, yes really

Since the beginning we knew we were more than a single platform company. In that sense, choice has always been a big thing for us, whether it is with hardware, cloud or hypervisor vendors.

By running as a VM in user-space it decouples the Nutanix software from the underlying hypervisor and hardware platforms. This enabled us to rapidly add support for other hypervisors while keeping the core code base the same across all operating environments (on-premises & cloud). Additionally, it gave us flexibility to not be bound to vendor specific release cycles.

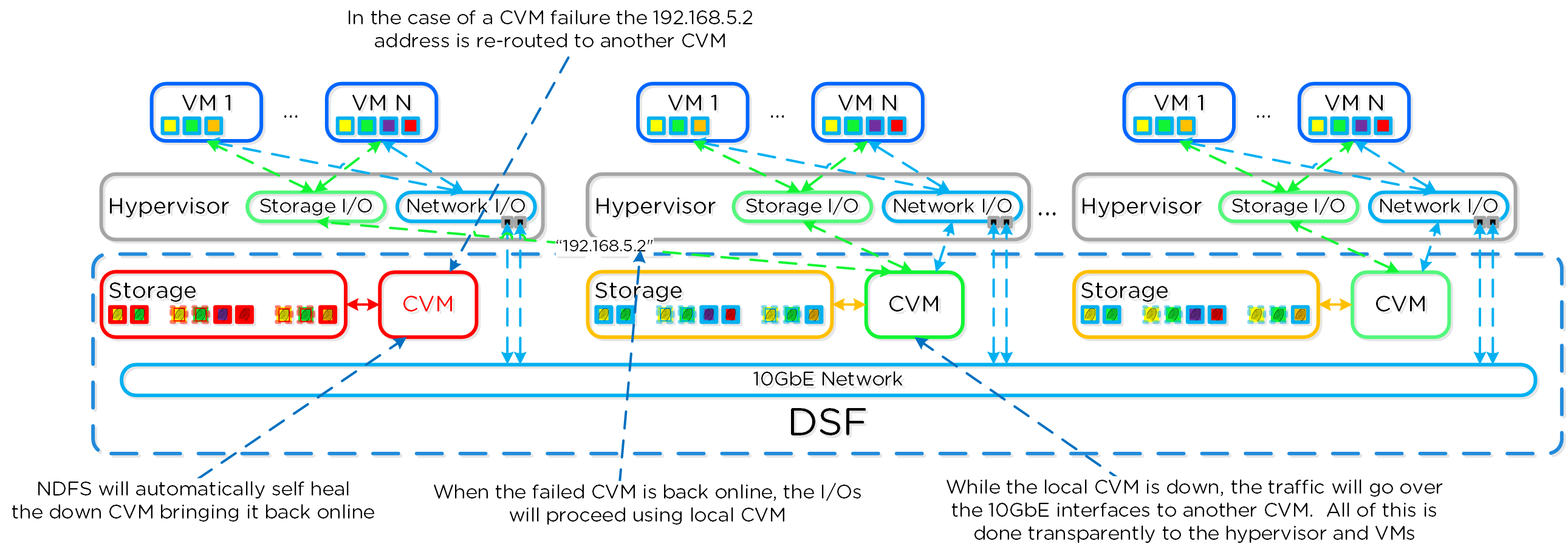

Due to the nature of running as a VM in user-space, we can elegantly handle things like upgrades or CVM "failures" as they are outside of the hypervisor. For example, if there is some catastrophic issue where a CVM goes down, the whole node still continues to operate with storage I/Os and services coming from other CVMs in the cluster. During a AOS (Nutanix Core Software) upgrade, we can reboot the CVM without any impact to the workloads running on that host.

But isn't being in the kernel is so much faster? Simple answer, NO.

A common discussion topic is the debate around being in the kernel vs. in user-space. It is recommended to read through the "User vs. Kernel Space" section; it covers what both actually are and the pros and cons of each.

To summarize, there are two areas of execution in an operating system (OS): the kernel (privileged core of the OS where drivers may sit) and user space (where applications/processes sit). Traditionally moving between user-space and the kernel (aka context switch) can be expensive in terms of CPU and time (~1,000ns / context switch).

The debate is that being in the kernel is always better / faster. Which is false. No matter what there will always be context switches in the guest VM's OS.

» Download this section as PDF (opens in a new tab/window)

There are three core principles for distributed systems:

- Must have no single points of failure (SPOF)

- Must not have any bottlenecks at any scale (must be linearly scalable)

- Must leverage concurrency (MapReduce)

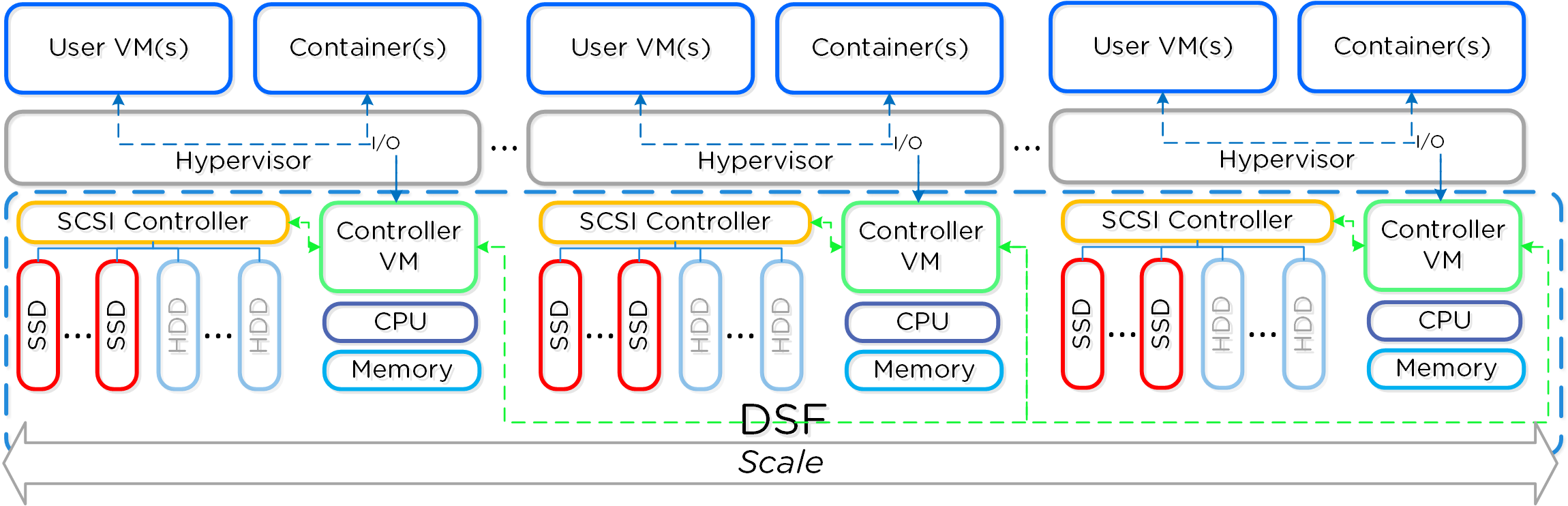

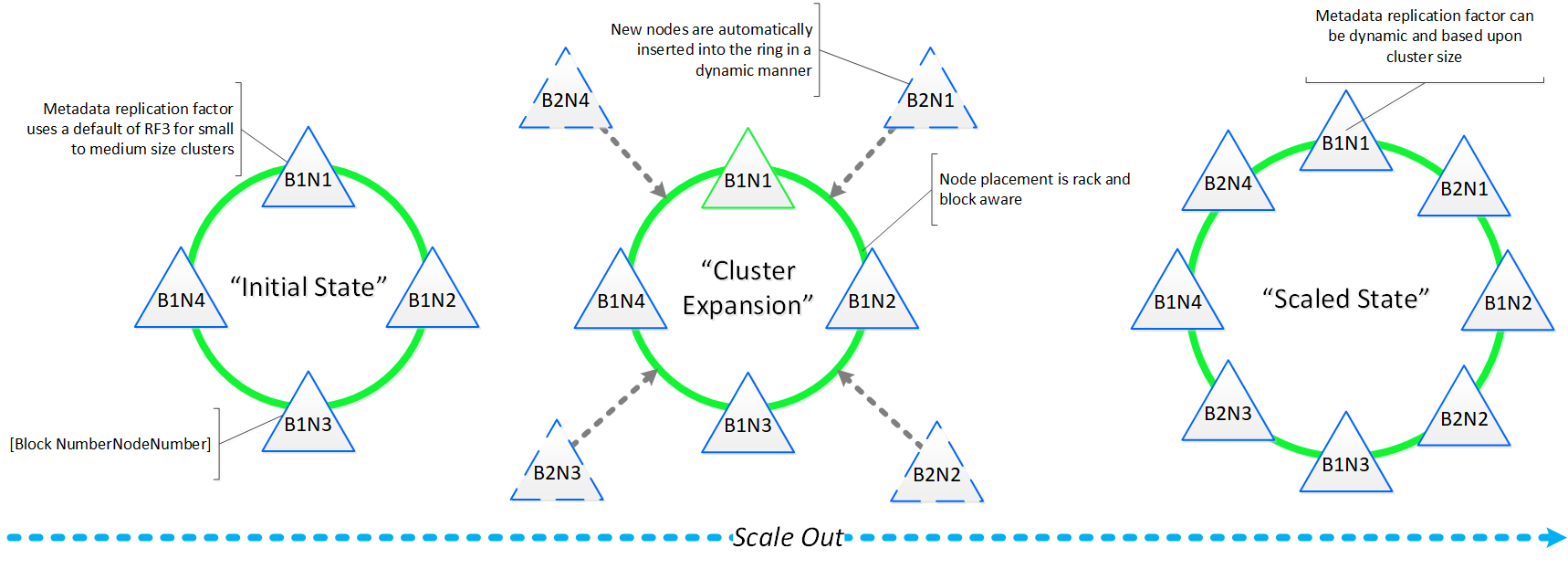

Together, a group of Nutanix nodes forms a distributed system (Nutanix cluster) responsible for providing the Prism and AOS capabilities. All services and components are distributed across all CVMs in a cluster to provide for high-availability and linear performance at scale.

The following figure shows an example of how these Nutanix nodes form a Nutanix cluster:

Nutanix Cluster - Distributed System

Nutanix Cluster - Distributed System

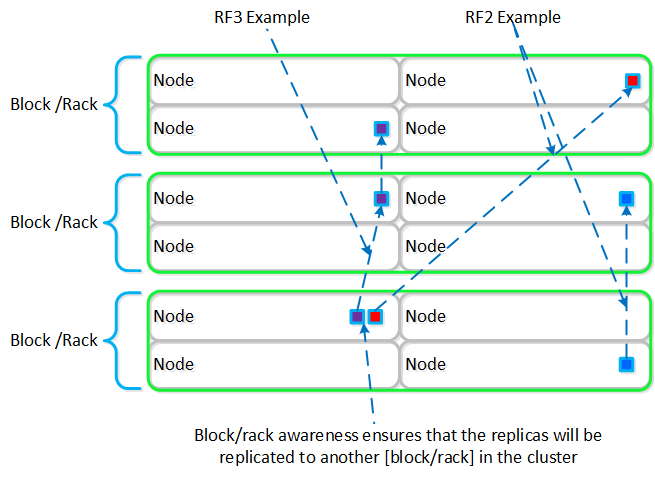

These techniques are also applied to metadata and data alike. By ensuring metadata and data is distributed across all nodes and all disk devices we can ensure the highest possible performance during normal data ingest and re-protection.

This enables our MapReduce Framework (Curator) to leverage the full power of the cluster to perform activities concurrently. Sample activities include that of data re-protection, compression, erasure coding, deduplication, etc.

The Nutanix cluster is designed to accommodate and remediate failure. The system will transparently handle and remediate the failure, continuing to operate as expected. The user will be alerted, but rather than being a critical time-sensitive item, any remediation (e.g. replace a failed node) can be done on the admin’s schedule.

If you need to add additional resources to your Nutanix cluster, you can scale out linearly simply by adding new nodes. With traditional 3-tier architecture, simply adding additional servers will not scale out your storage performance. However, with a hyperconverged platform like Nutanix, when you scale out with new node(s) you’re scaling out:

- The number of hypervisor / compute nodes

- The number of storage controllers

- The compute and storage performance / capacity

- The number of nodes participating in cluster wide operations

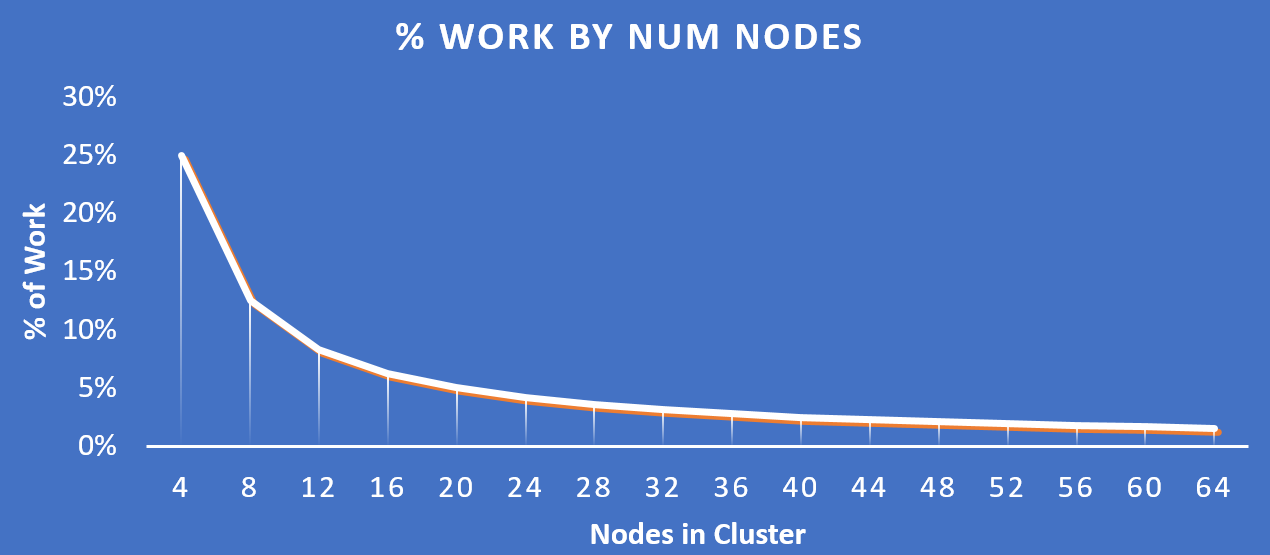

The following figure shows how the % of work handled by each node drastically decreases as the cluster scales:

Work Distribution - Cluster Scale

Work Distribution - Cluster Scale

Key point: As the number of nodes in a cluster increases (cluster scaling), certain activities actually become more efficient as each node is handling only a fraction of the work.

» Download this section as PDF (opens in a new tab/window)

There are four core principles for software definition systems:

- Must provide platform mobility (hardware, hypervisor)

- Must not be reliant on any custom hardware

- Must enable rapid speed of development (features, bug fixes, security patches)

- Must take advantage of Moore’s Law

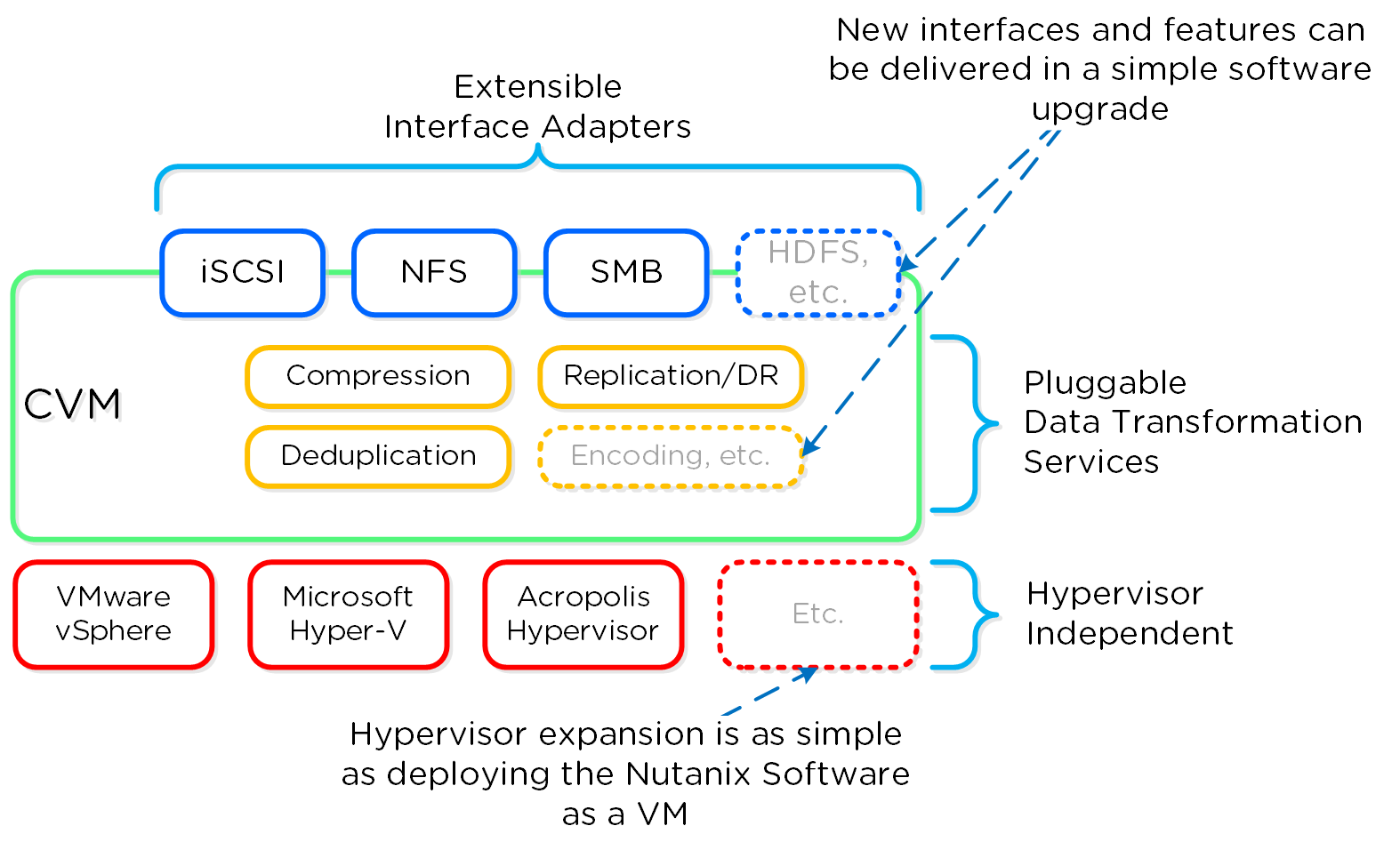

As mentioned above (likely numerous times), the Nutanix platform is a software-based solution which ships as a bundled software + hardware appliance. The controller VM is where the vast majority of the Nutanix software and logic sits and was designed from the beginning to be an extensible and pluggable architecture. A key benefit to being software-defined and not relying upon any hardware offloads or constructs is around extensibility. As with any product life cycle, advancements and new features will always be introduced.

By not relying on any custom ASIC/FPGA or hardware capabilities, Nutanix can develop and deploy these new features through a simple software update. This means that the deployment of a new feature (e.g., deduplication) can be deployed by upgrading the current version of the Nutanix software. This also allows newer generation features to be deployed on legacy hardware models. For example, say you’re running a workload running an older version of Nutanix software on a prior generation hardware platform (e.g., 2400). The running software version doesn’t provide deduplication capabilities which your workload could benefit greatly from. To get these features, you perform a rolling upgrade of the Nutanix software version while the workload is running, and you now have deduplication. It’s really that easy.

Similar to features, the ability to create new “adapters” or interfaces into DSF is another key capability. When the product first shipped, it solely supported iSCSI for I/O from the hypervisor, this has now grown to include NFS and SMB. In the future, there is the ability to create new adapters for various workloads and hypervisors (HDFS, etc.). And again, all of this can be deployed via a software update. This is contrary to most legacy infrastructures, where a hardware upgrade or software purchase is normally required to get the “latest and greatest” features. With Nutanix, it’s different. Since all features are deployed in software, they can run on any hardware platform, any hypervisor, and be deployed through simple software upgrades.

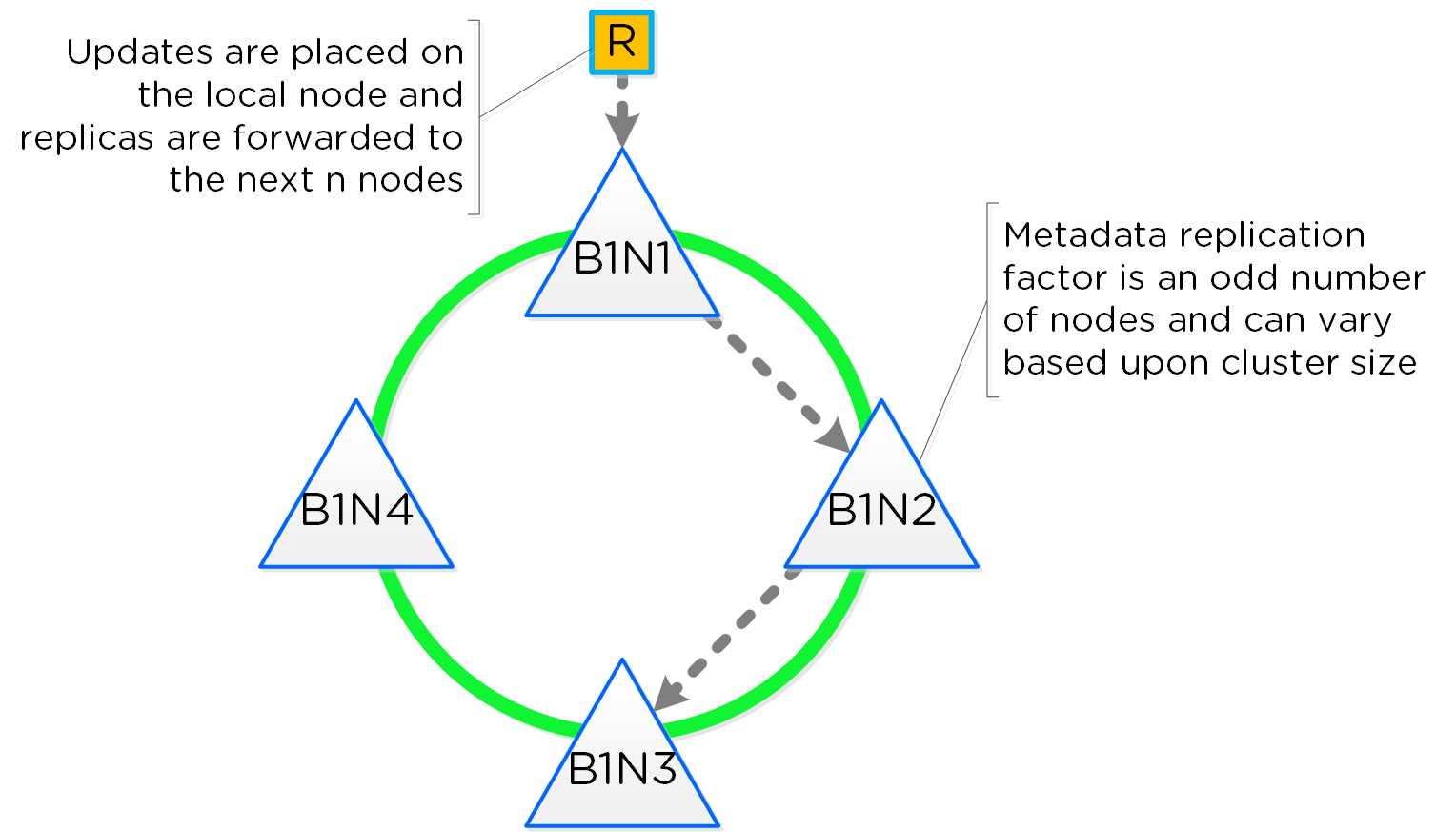

The following figure shows a logical representation of what this software-defined controller framework looks like:

Software-Defined Controller Framework

Software-Defined Controller Framework

» Download this section as PDF (opens in a new tab/window)

The user-facing Nutanix product is extremely simple to deploy and use. This is primarily possible through abstraction and a lot of automation / integration in the software.

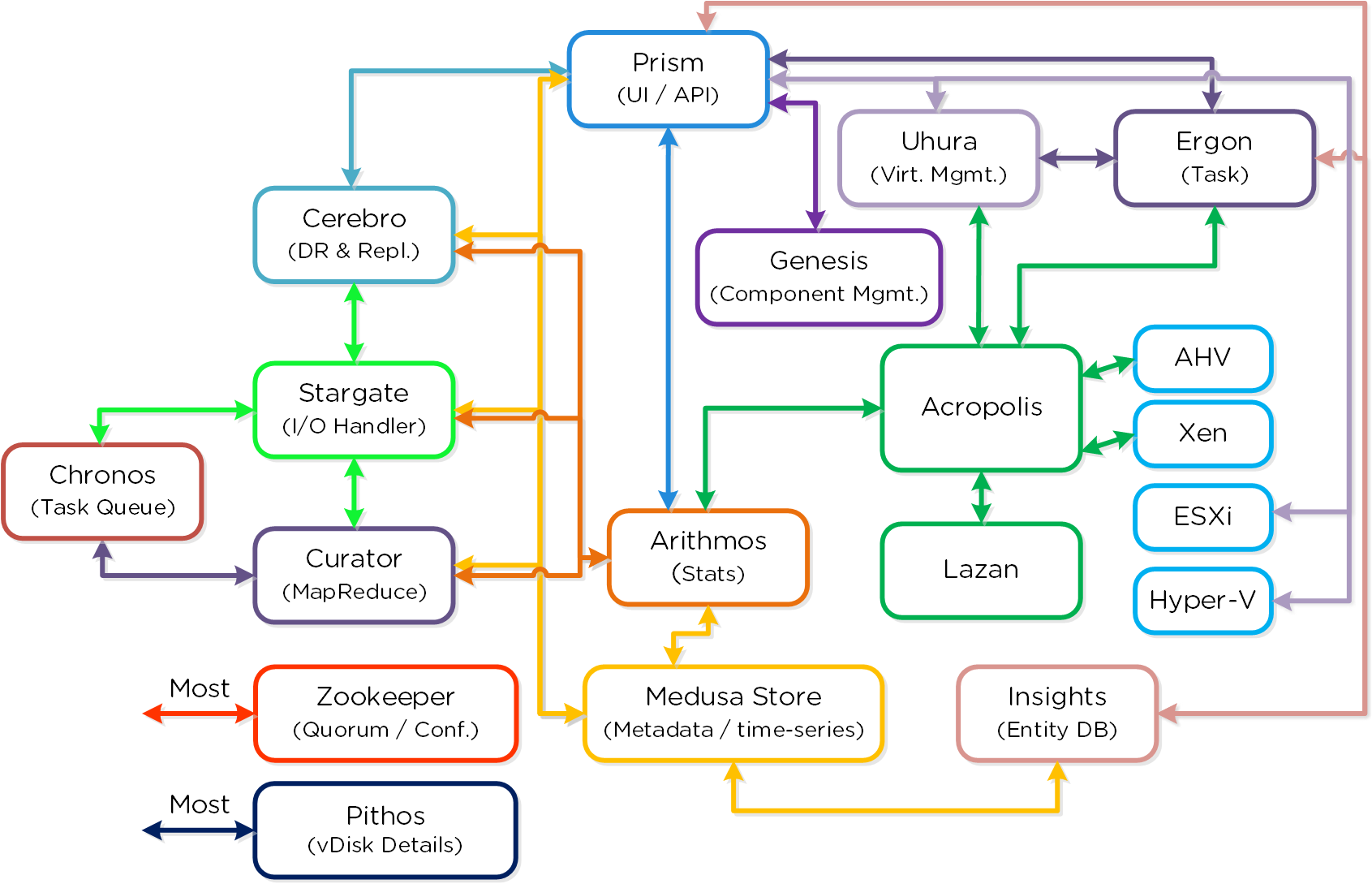

The following is a detailed view of the main Nutanix Cluster components (don’t worry, no need to memorize or know what everything does):

Nutanix Cluster Components

Nutanix Cluster Components

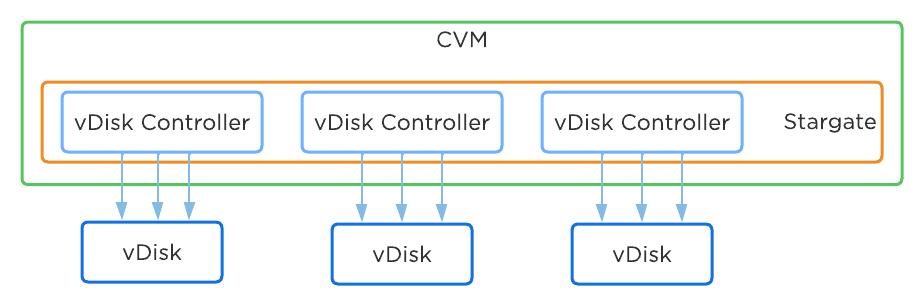

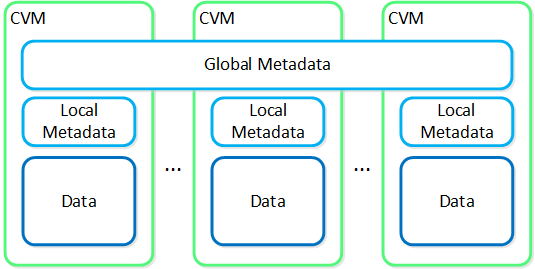

Cassandra

- Key Role: Distributed metadata store

- Description: Cassandra stores and manages all of the cluster metadata in a distributed ring-like manner based upon a heavily modified Apache Cassandra. The Paxos algorithm is utilized to enforce strict consistency. This service runs on every node in the cluster. The Cassandra is accessed via an interface called Medusa.

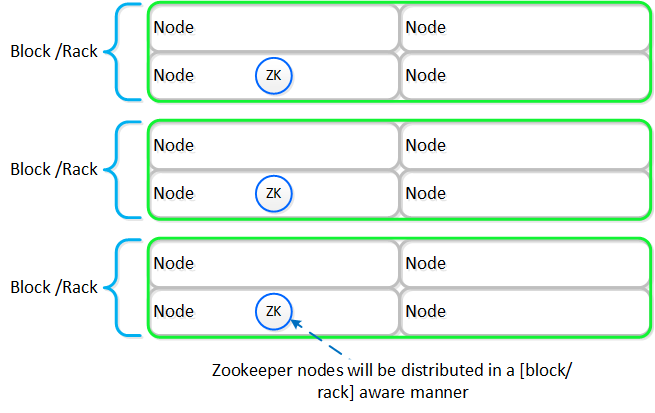

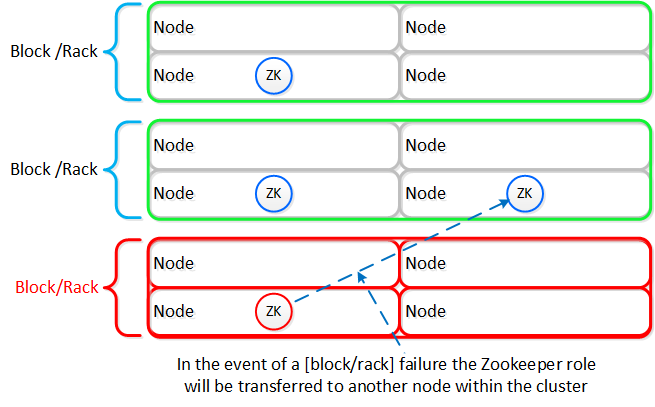

Zookeeper

- Key Role: Cluster configuration manager

- Description: Zookeeper stores all of the cluster configuration including hosts, IPs, state, etc. and is based upon Apache Zookeeper. This service runs on three nodes in the cluster, one of which is elected as a leader. The leader receives all requests and forwards them to its peers. If the leader fails to respond, a new leader is automatically elected. Zookeeper is accessed via an interface called Zeus.

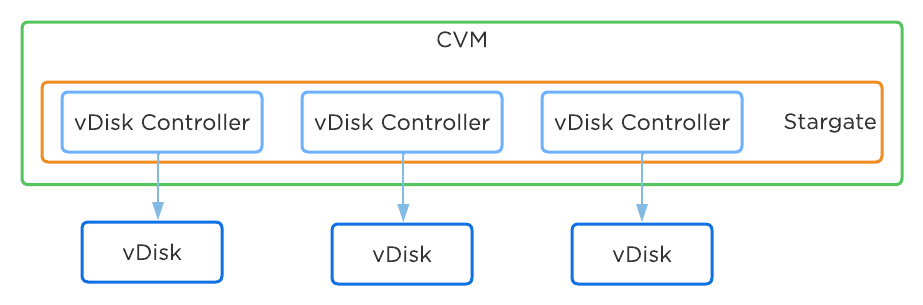

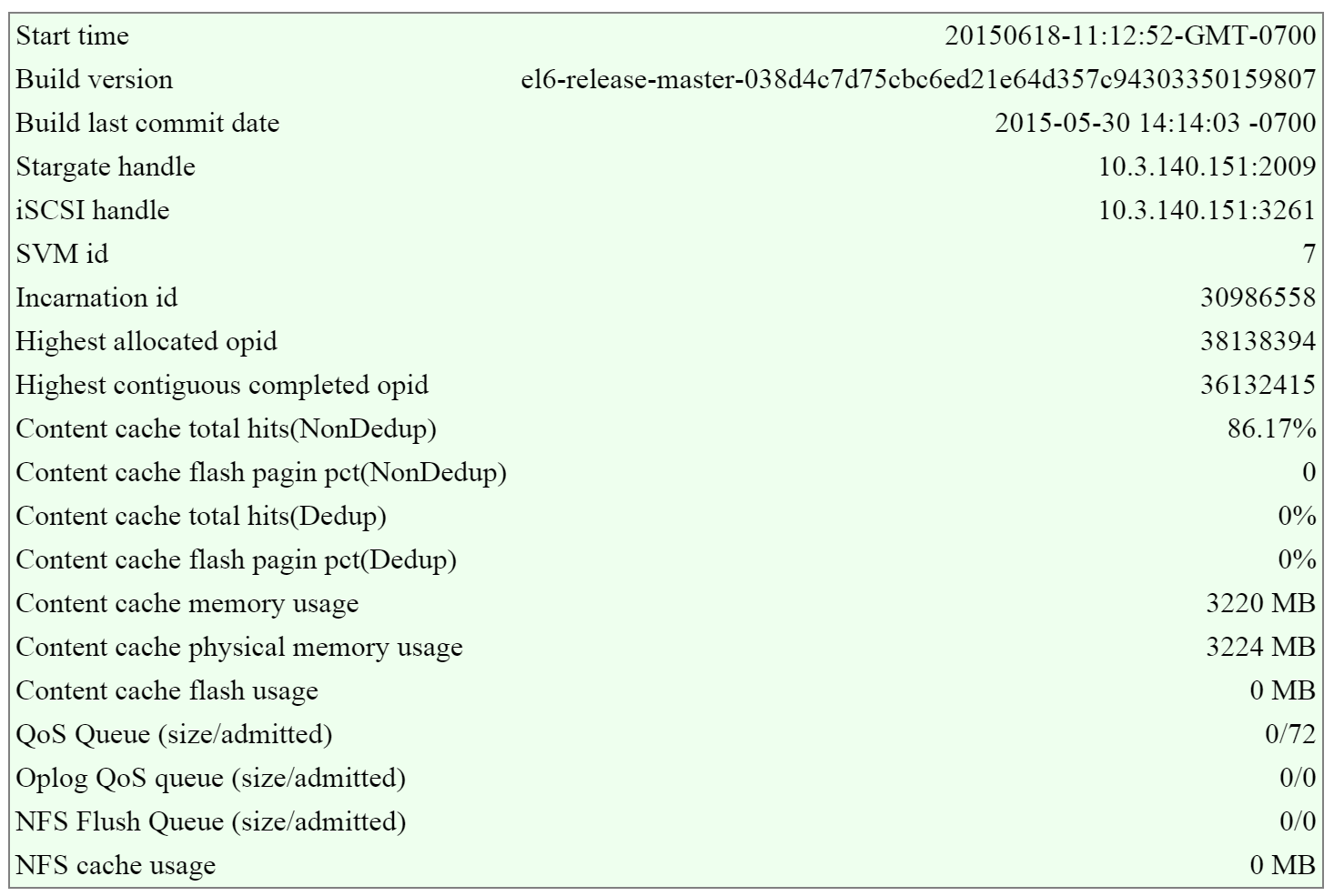

Stargate

- Key Role: Data I/O manager

- Description: Stargate is responsible for all data management and I/O operations and is the main interface from the hypervisor (via NFS, iSCSI, or SMB). This service runs on every node in the cluster in order to serve localized I/O.

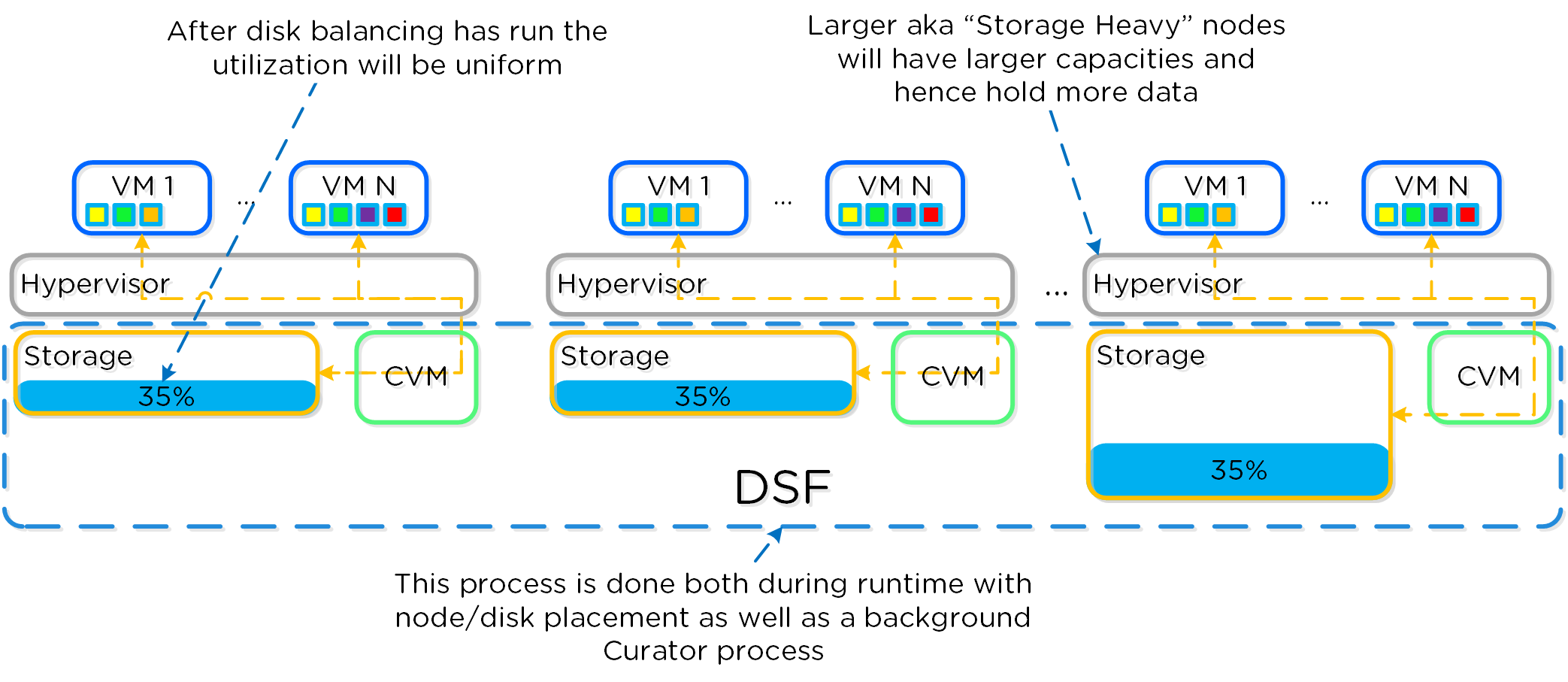

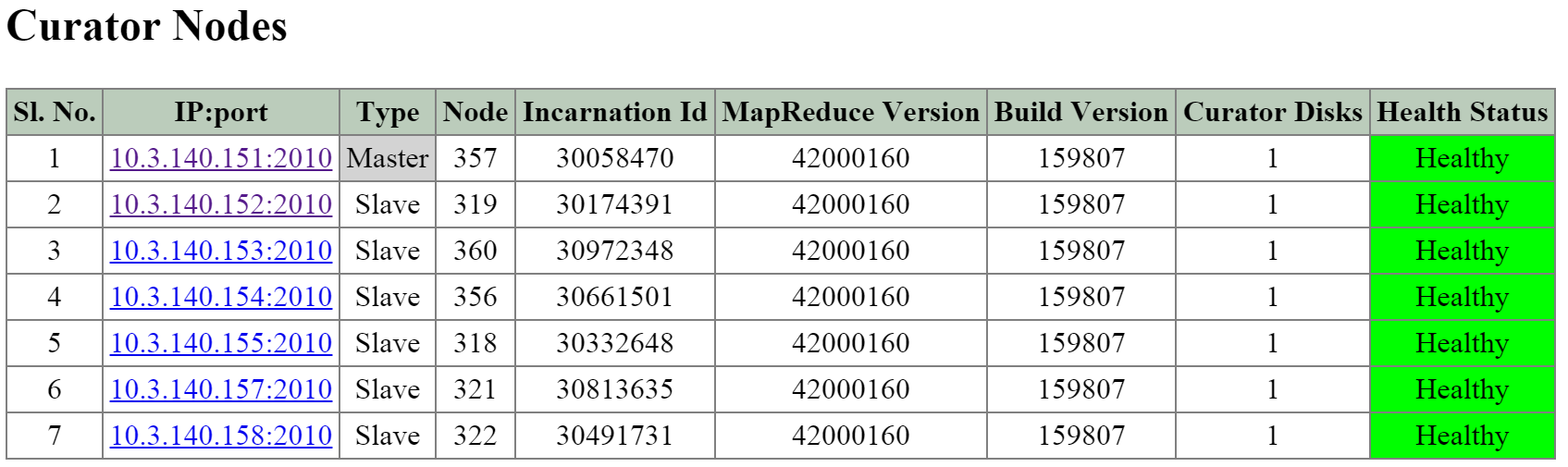

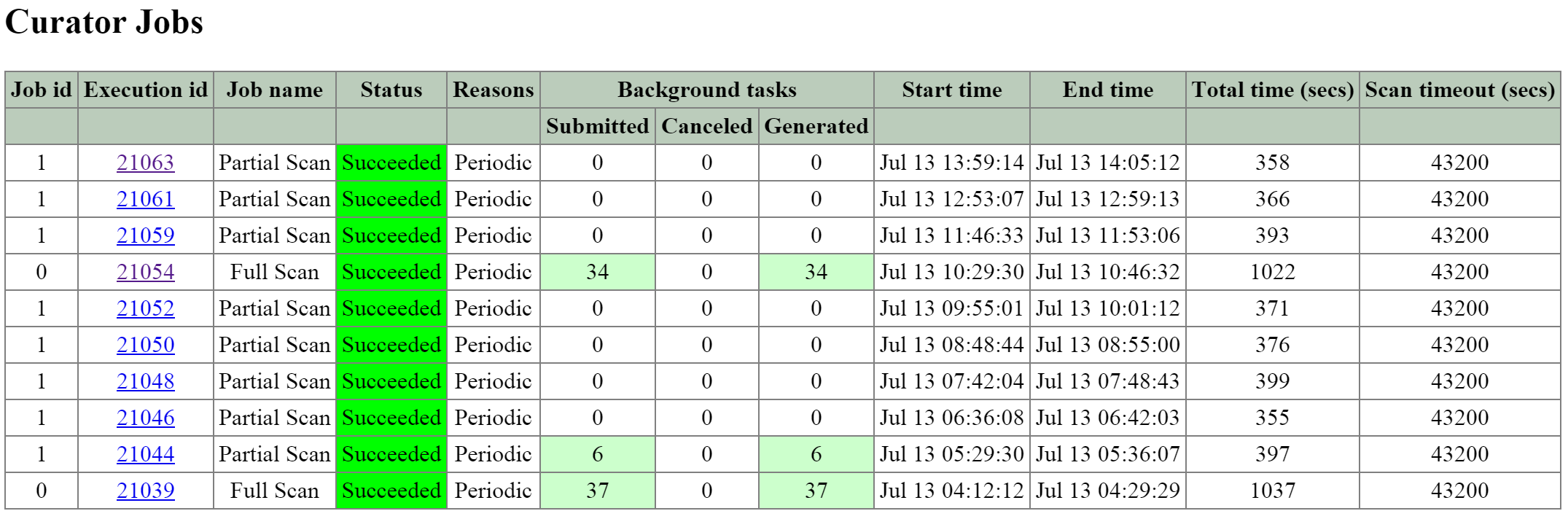

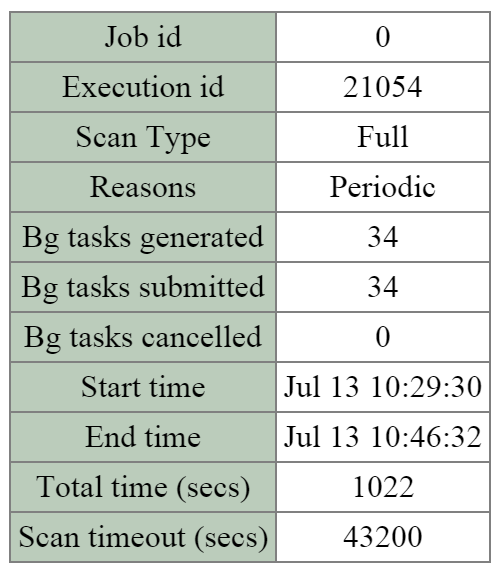

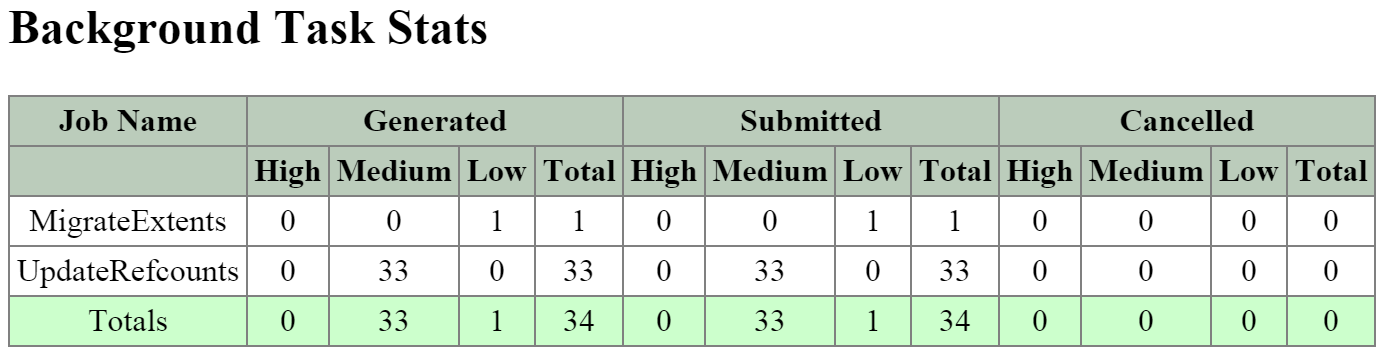

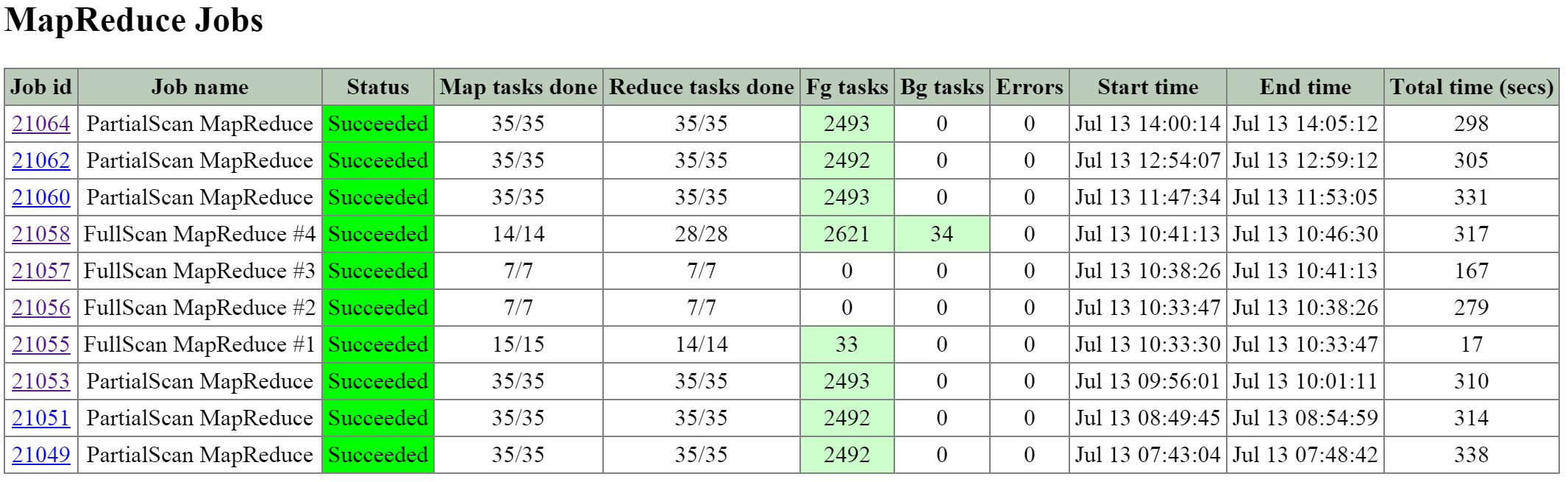

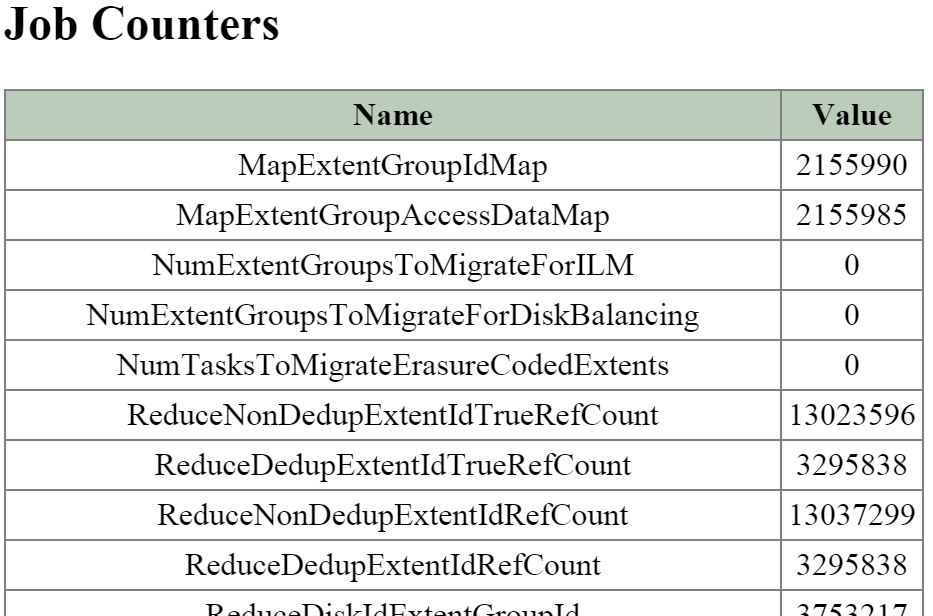

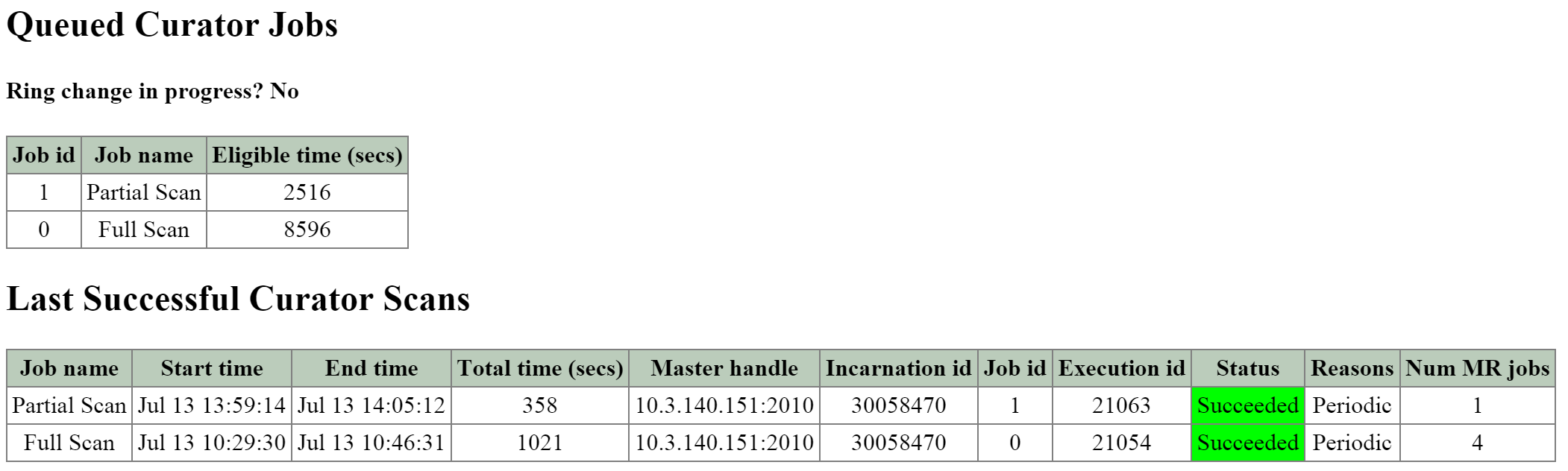

Curator

- Key Role: MapReduce cluster management and cleanup

- Description: Curator is responsible for managing and distributing tasks throughout the cluster, including disk balancing, proactive scrubbing, and many more items. Curator runs on every node and is controlled by an elected Curator Leader who is responsible for the task and job delegation. There are two scan types for Curator, a full scan which occurs around every 6 hours and a partial scan which occurs every hour.

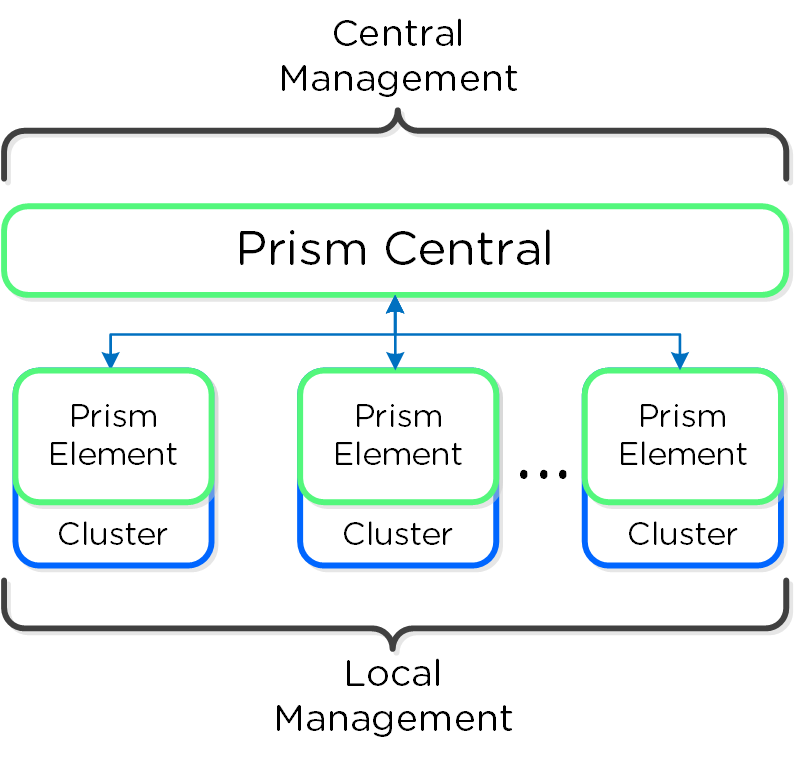

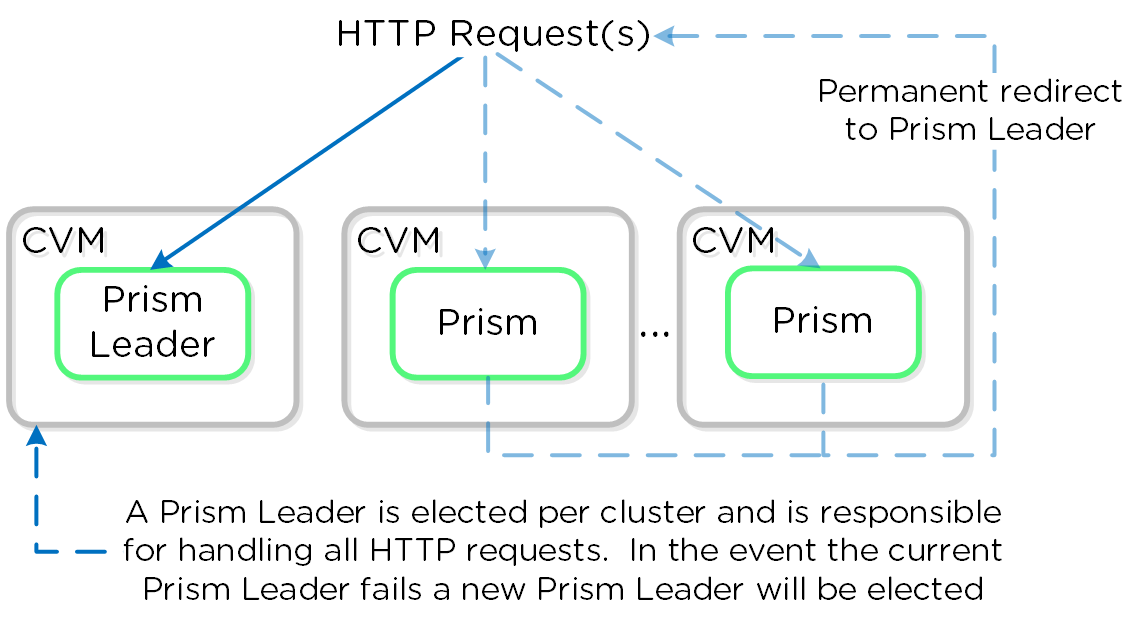

Prism

- Key Role: UI and API

- Description: Prism is the management gateway for component and administrators to configure and monitor the Nutanix cluster. This includes Ncli, the HTML5 UI, and REST API. Prism runs on every node in the cluster and uses an elected leader like all components in the cluster.

Genesis

- Key Role: Cluster component & service manager

- Description: Genesis is a process which runs on each node and is responsible for any services interactions (start/stop/etc.) as well as for the initial configuration. Genesis is a process which runs independently of the cluster and does not require the cluster to be configured/running. The only requirement for Genesis to be running is that Zookeeper is up and running. The cluster_init and cluster_status pages are displayed by the Genesis process.

Chronos

- Key Role: Job and task scheduler

- Description: Chronos is responsible for taking the jobs and tasks resulting from a Curator scan and scheduling/throttling tasks among nodes. Chronos runs on every node and is controlled by an elected Chronos Leader that is responsible for the task and job delegation and runs on the same node as the Curator Leader.

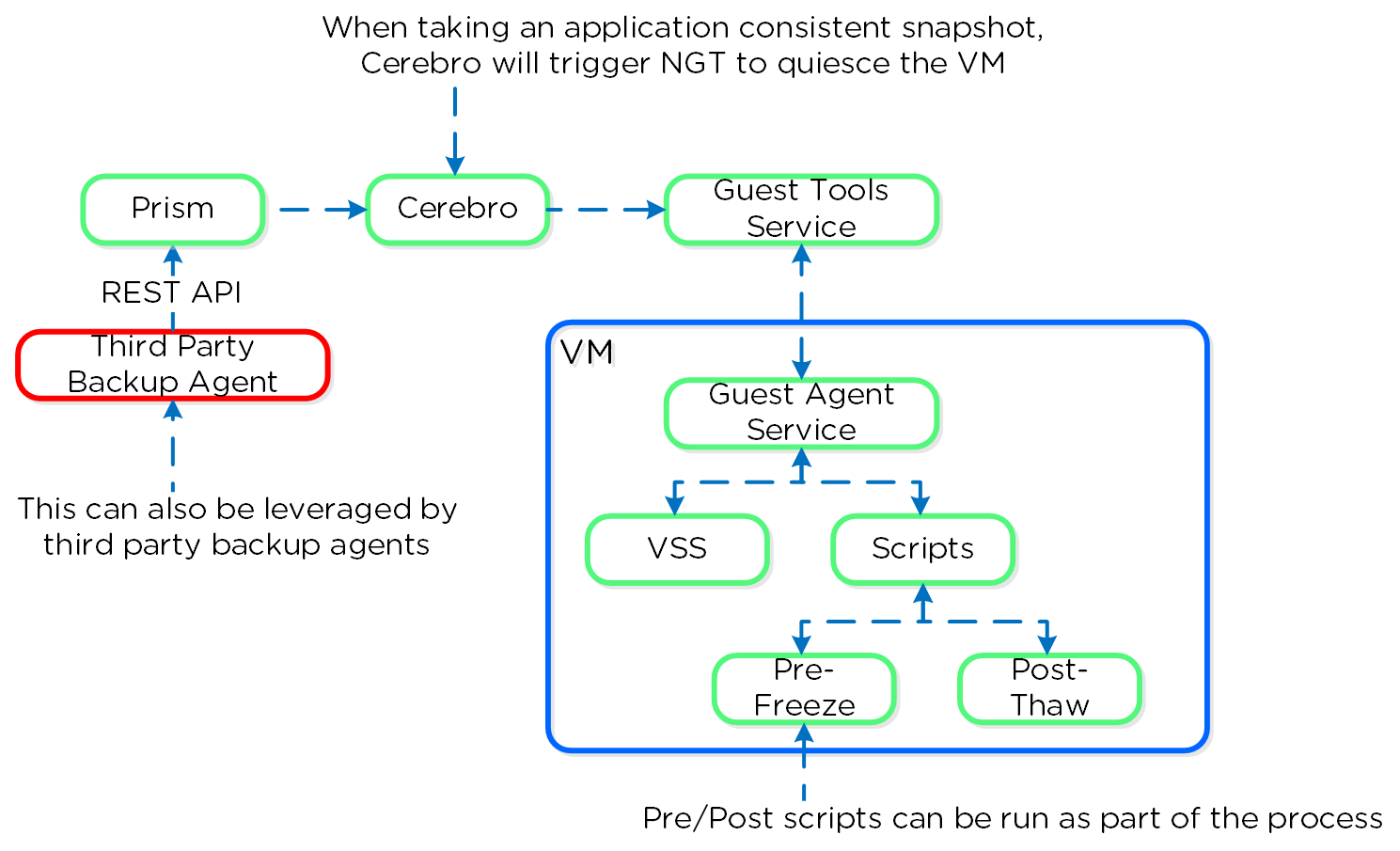

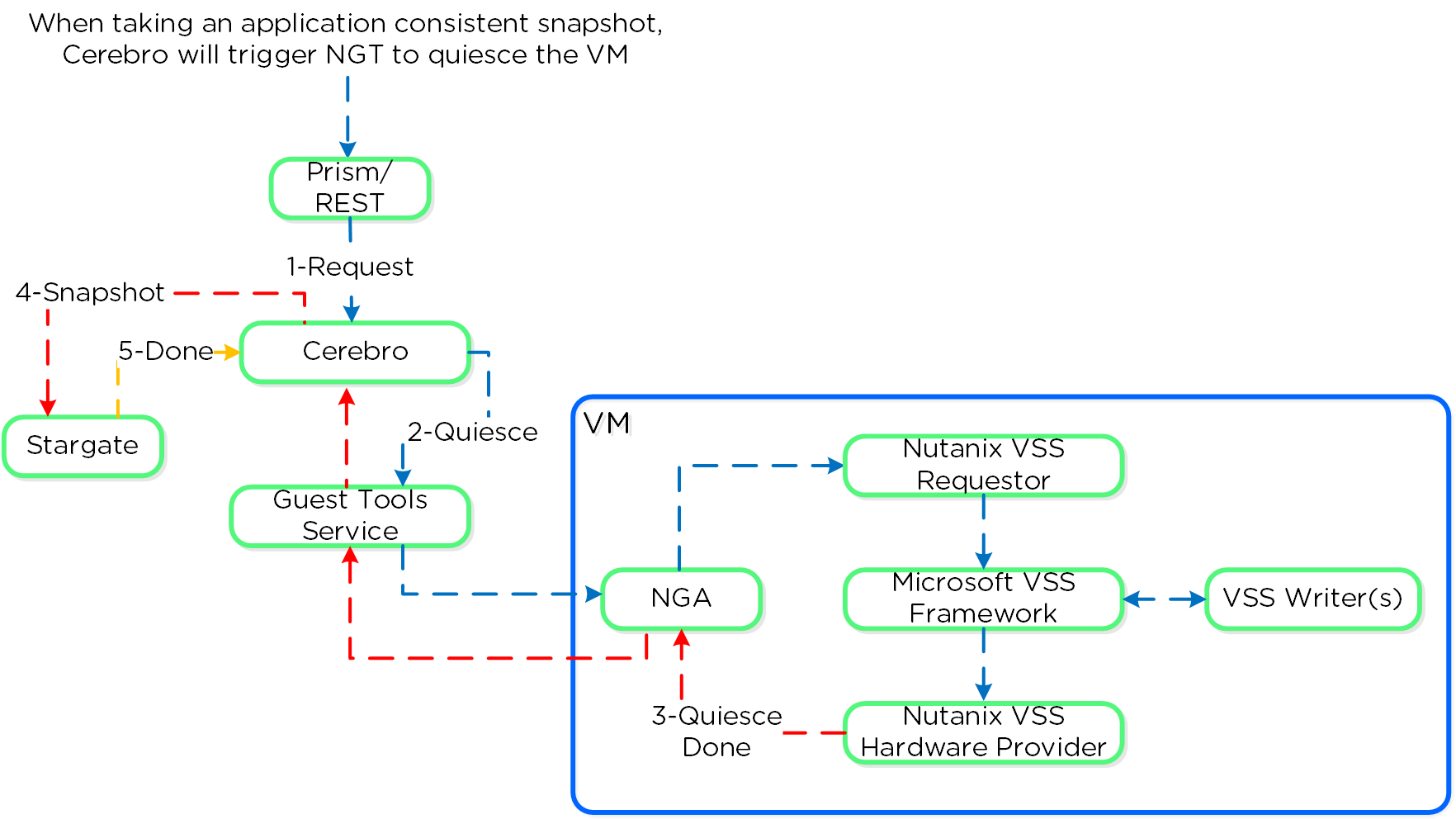

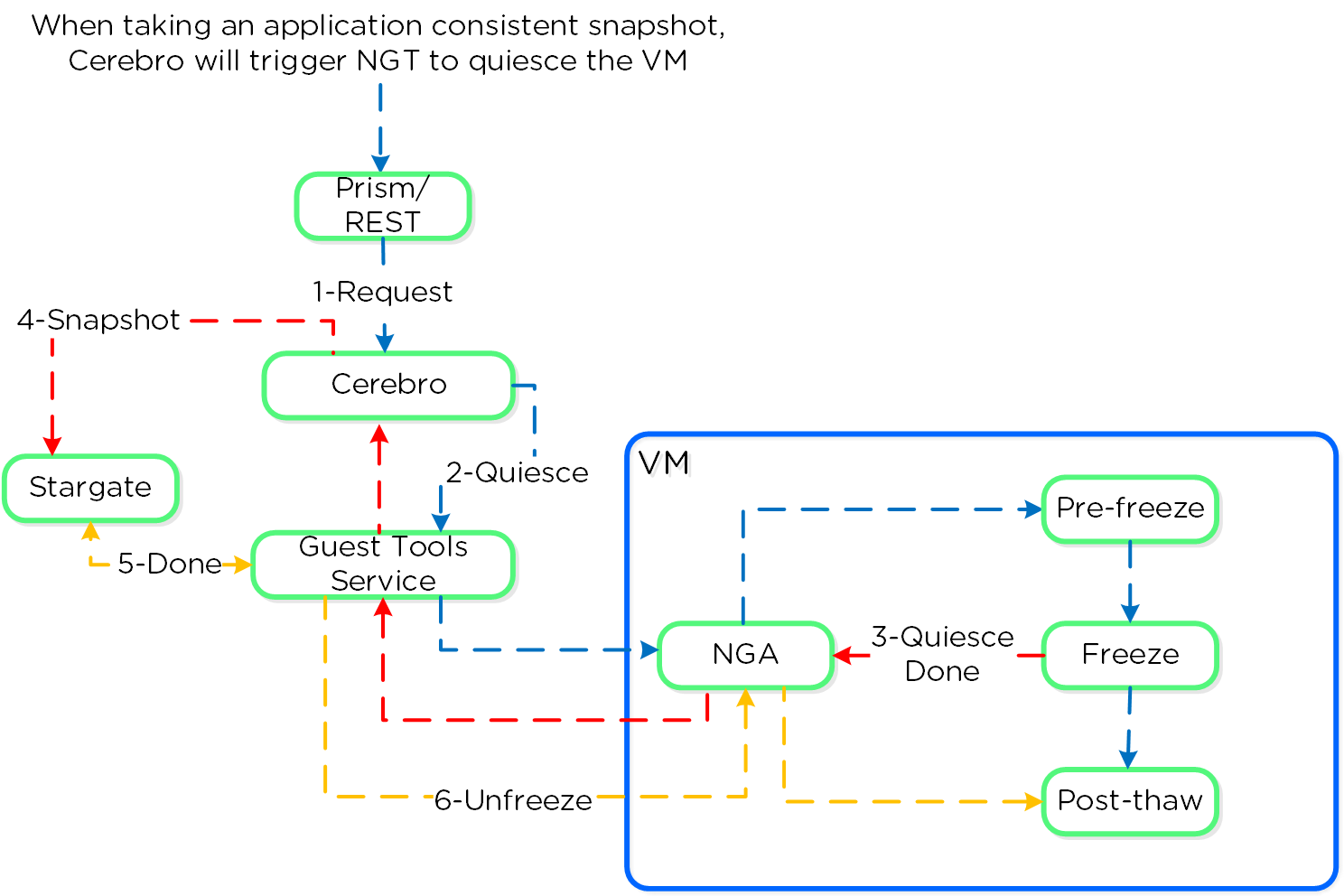

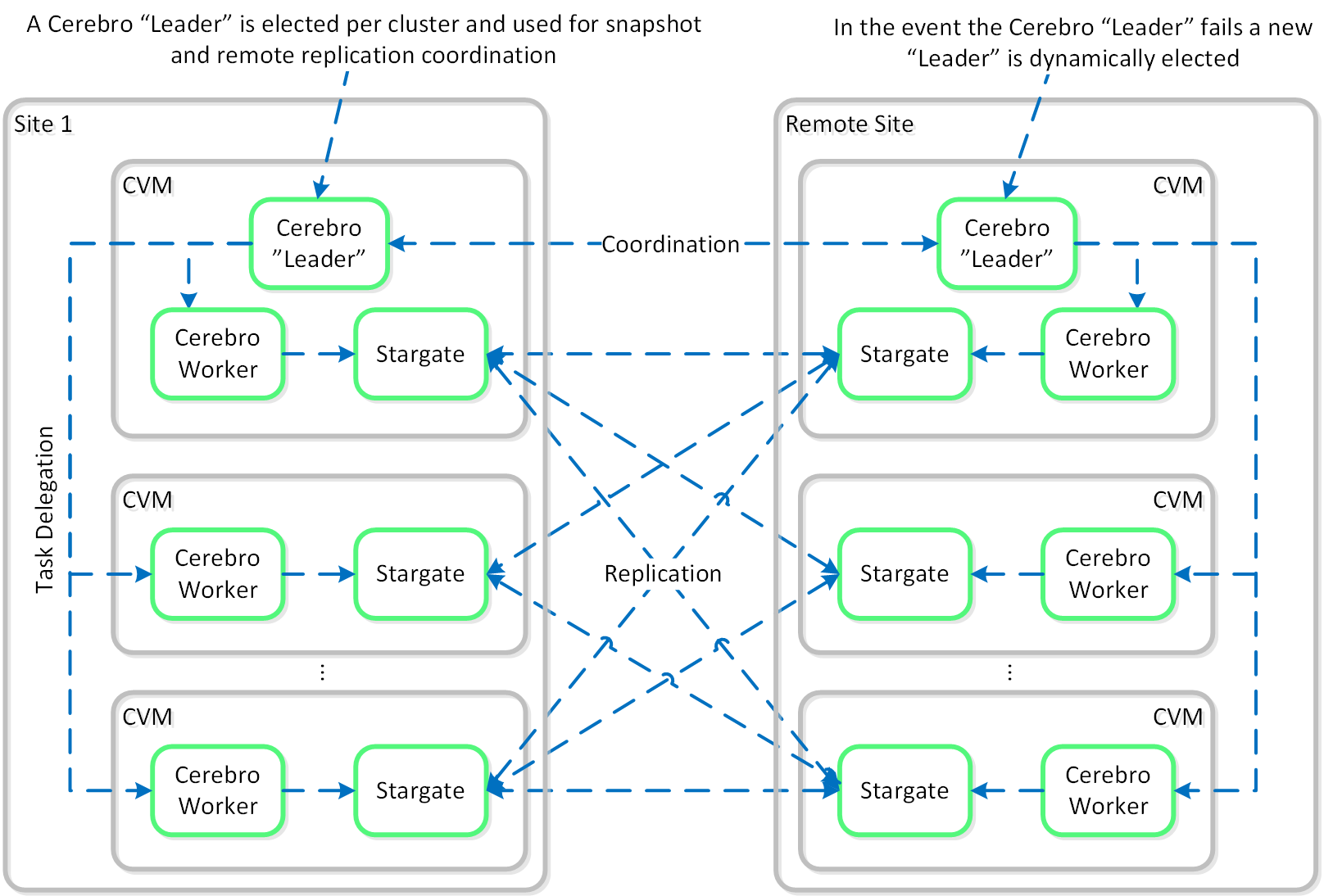

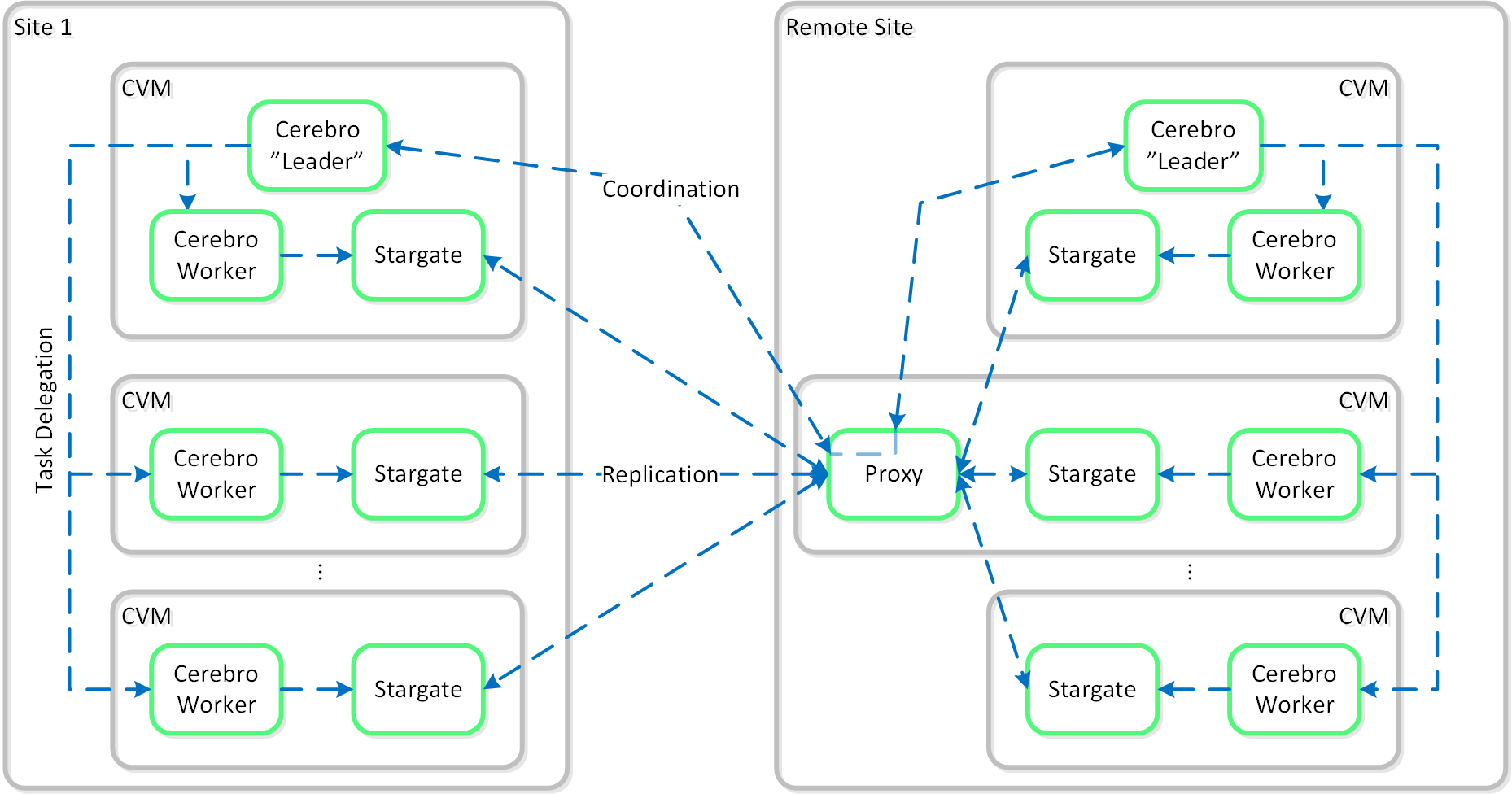

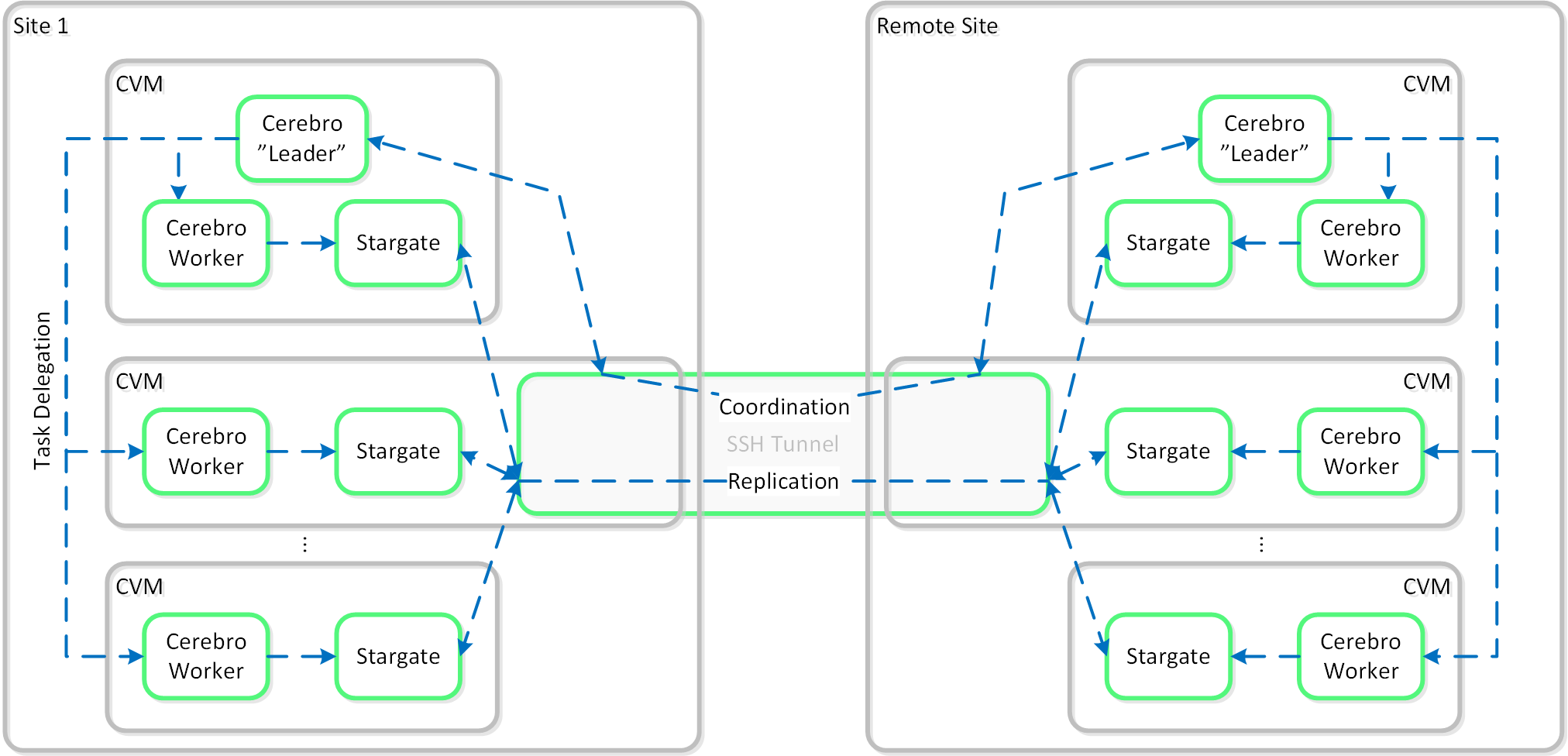

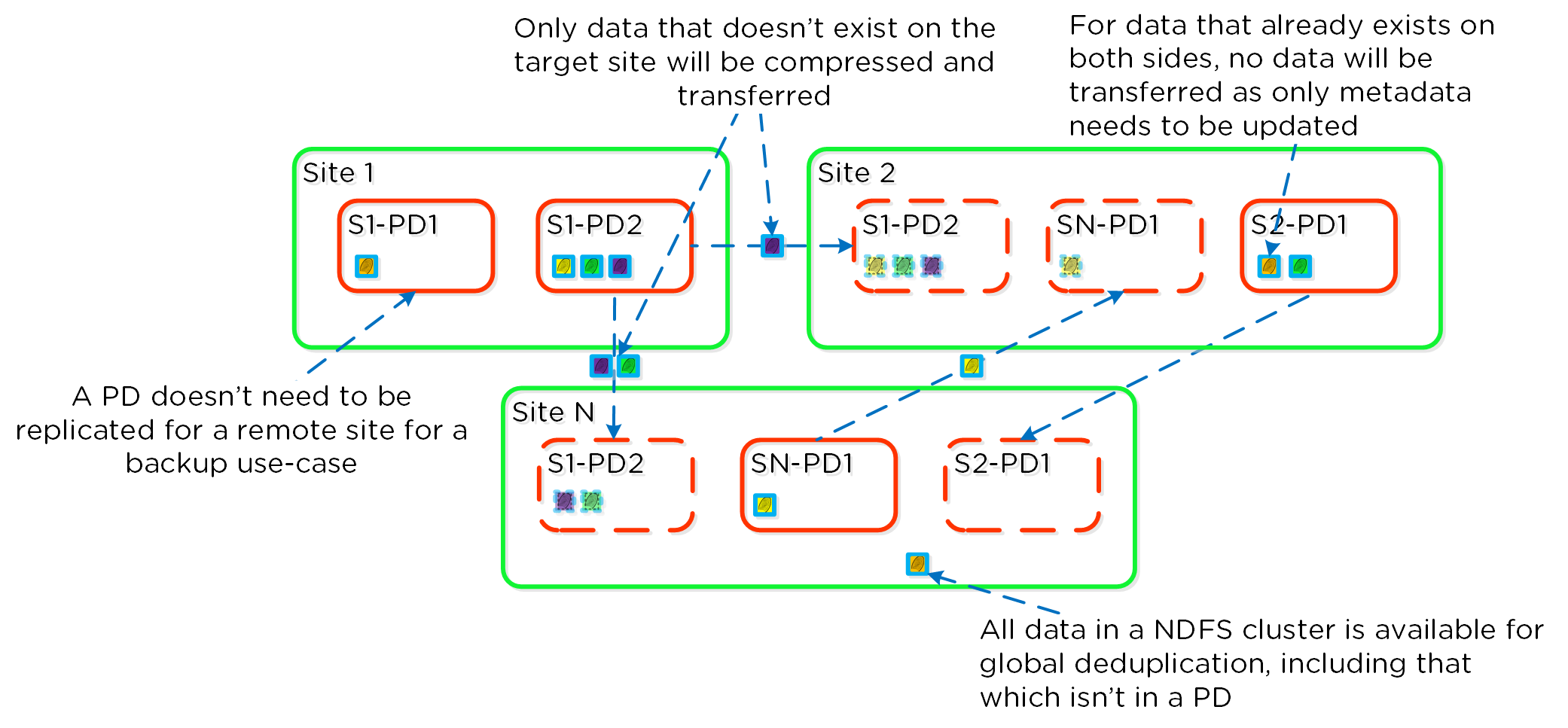

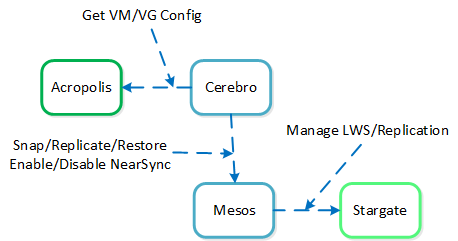

Cerebro

- Key Role: Replication/DR manager

- Description: Cerebro is responsible for the replication and DR capabilities of DSF. This includes the scheduling of snapshots, the replication to remote sites, and the site migration/failover. Cerebro runs on every node in the Nutanix cluster and all nodes participate in replication to remote clusters/sites.

Pithos

- Key Role: vDisk configuration manager

- Description: Pithos is responsible for vDisk (DSF file) configuration data. Pithos runs on every node and is built on top of Cassandra.

» Download this section as PDF (opens in a new tab/window)

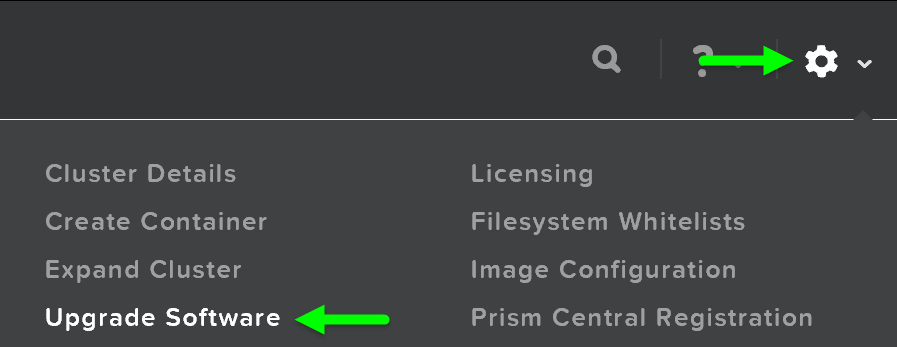

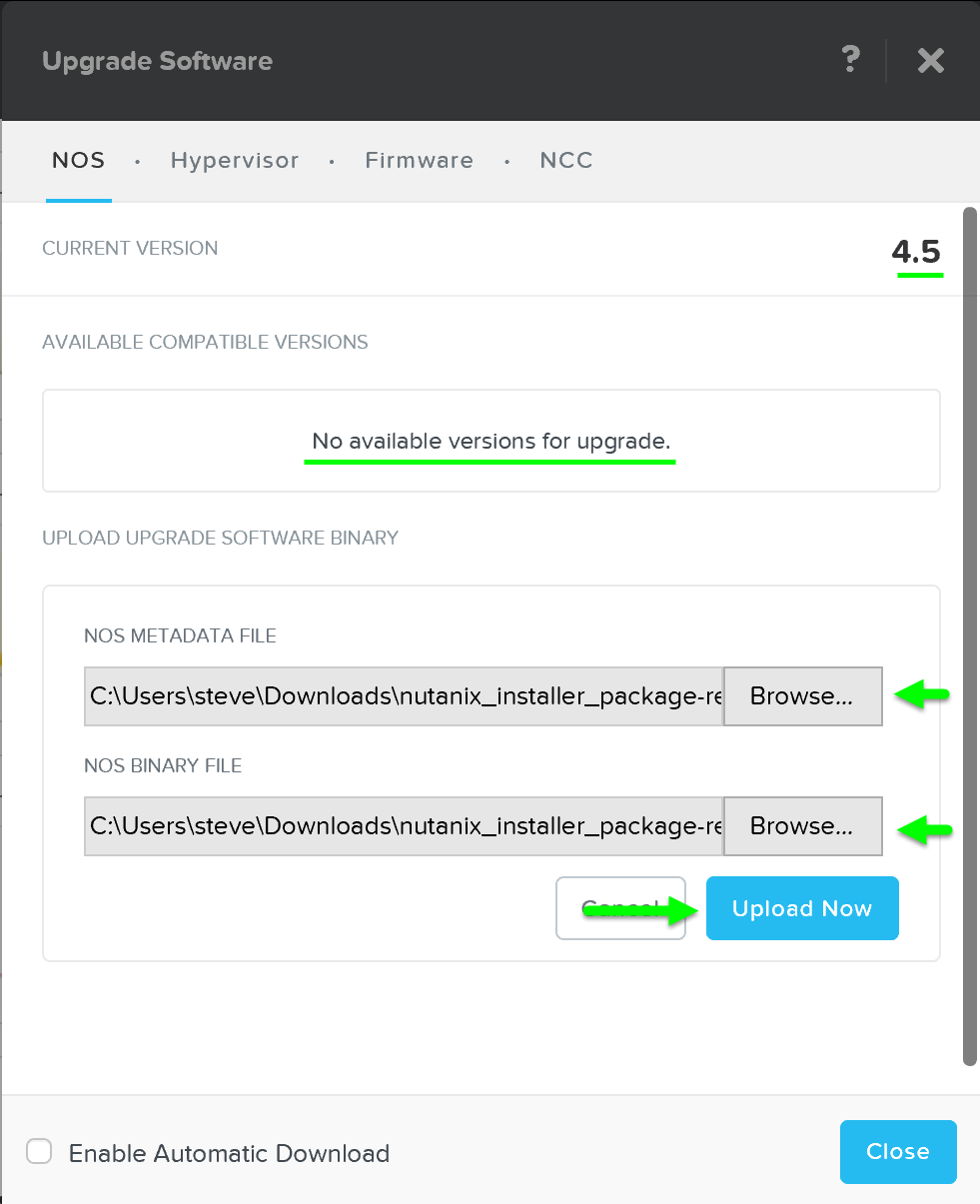

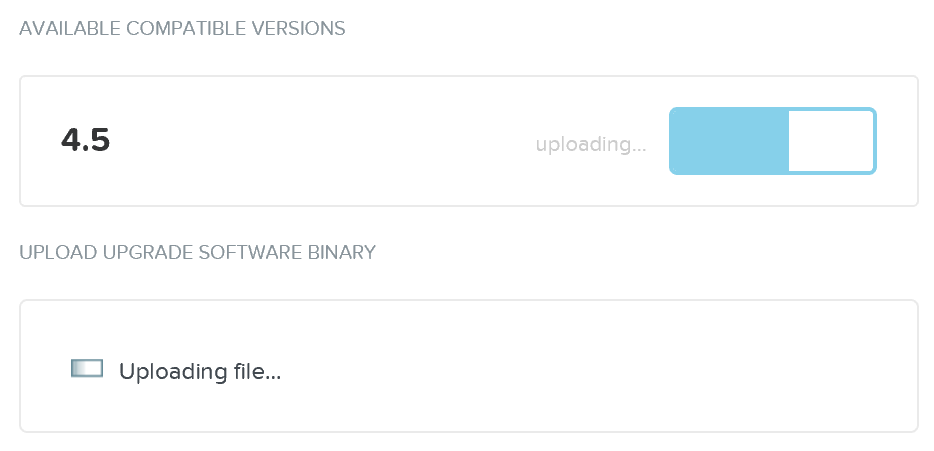

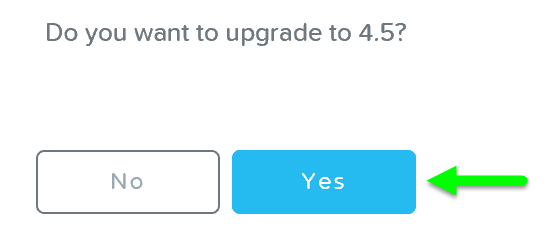

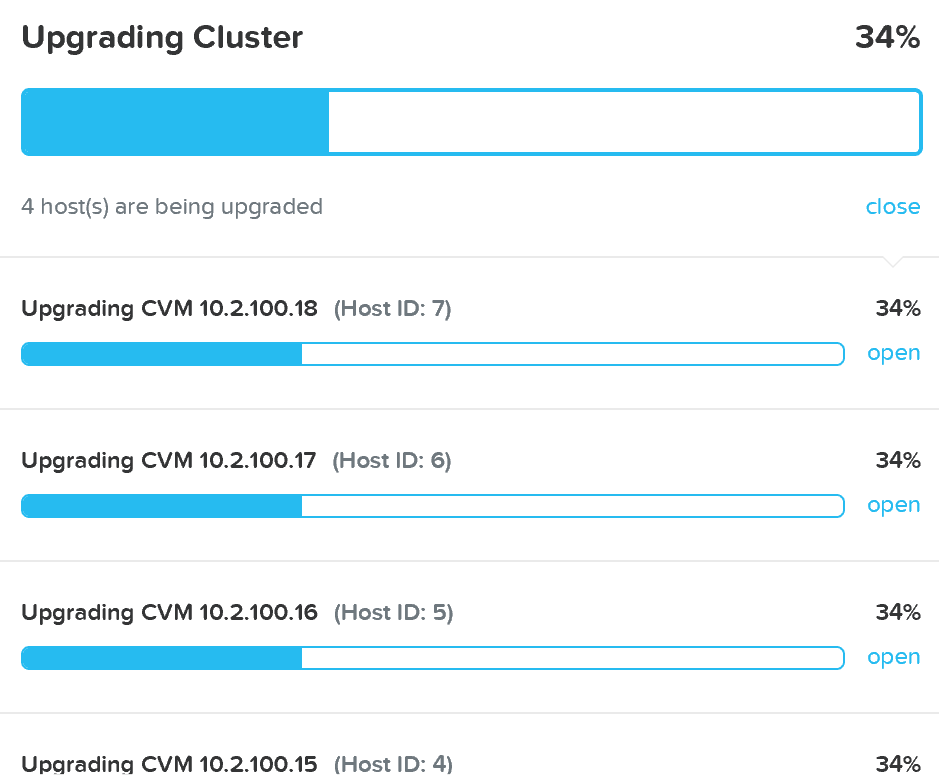

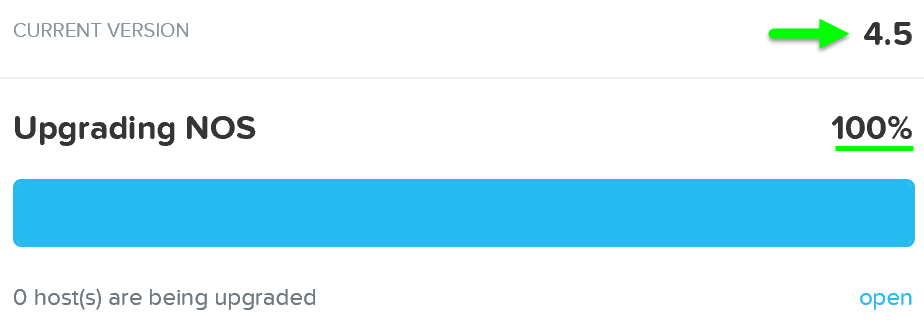

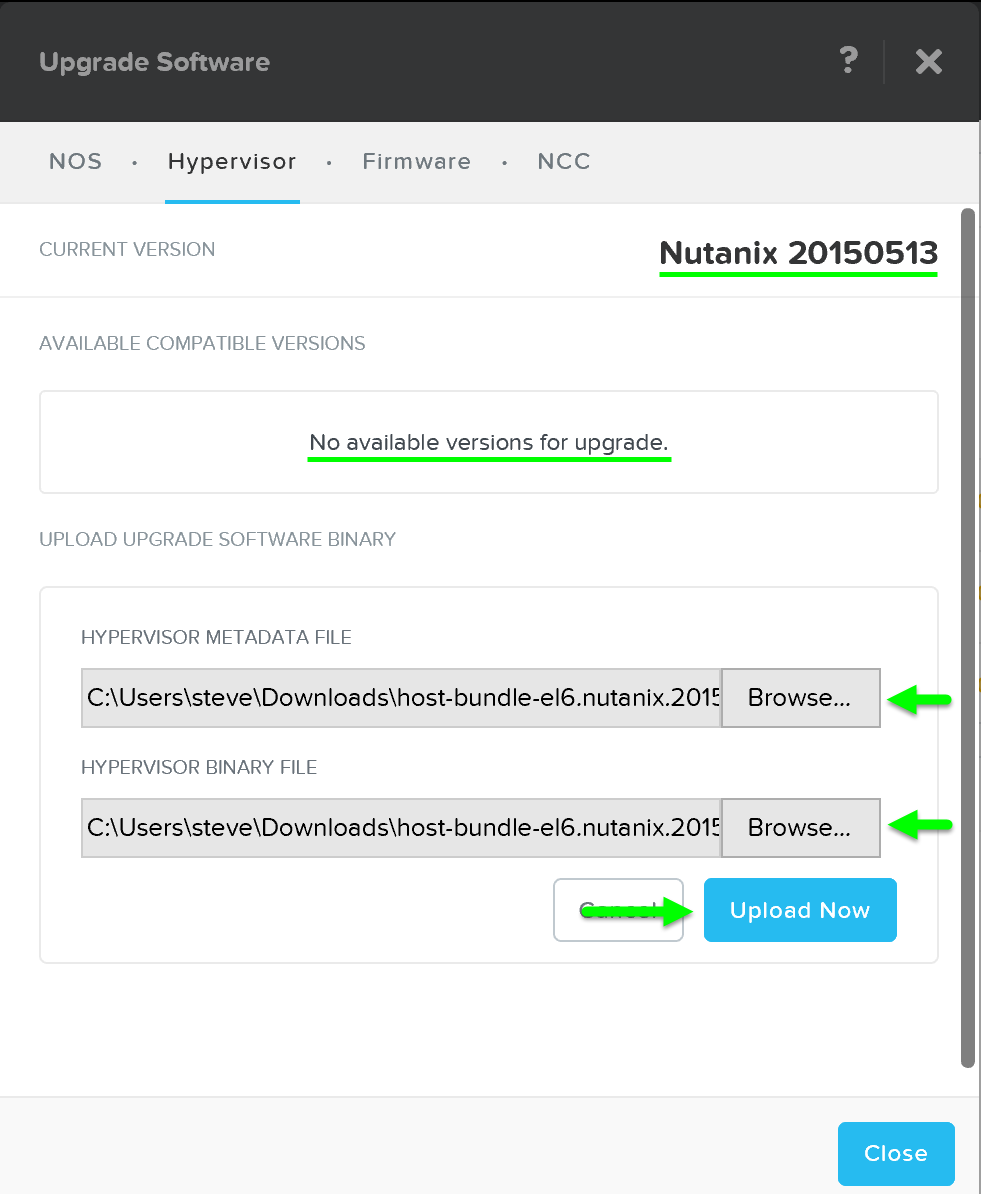

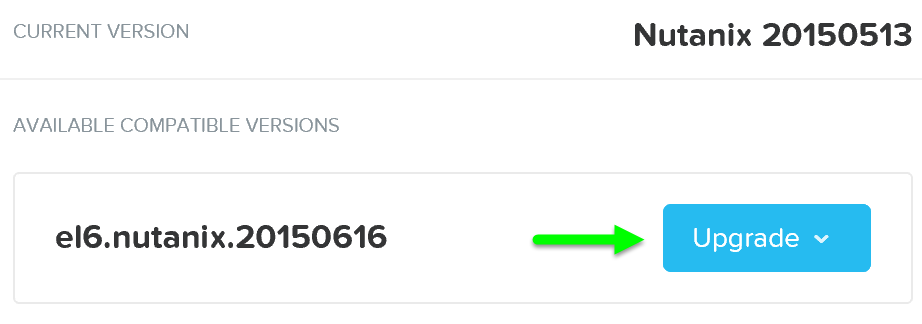

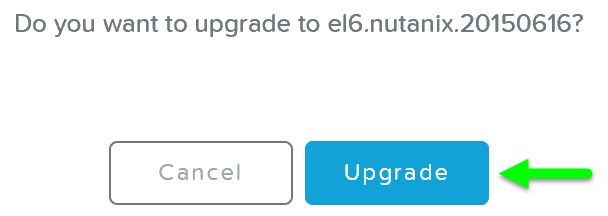

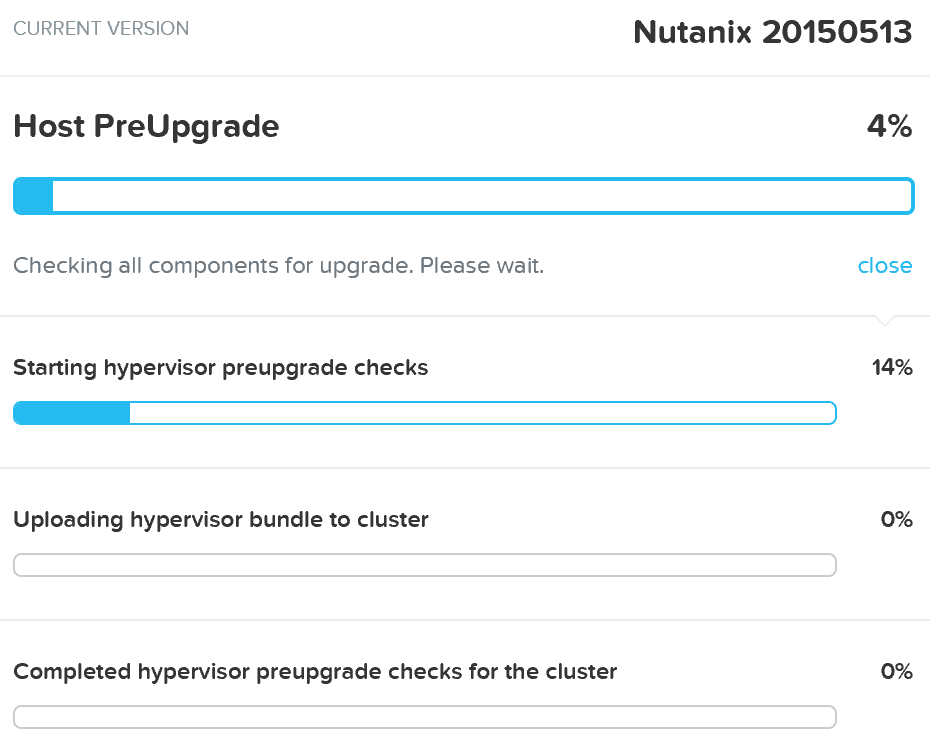

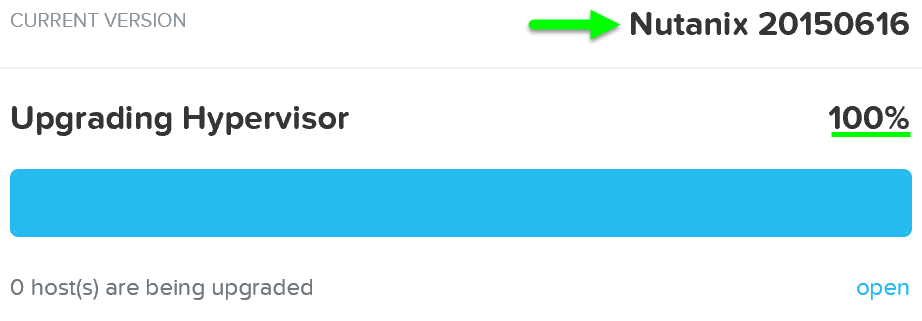

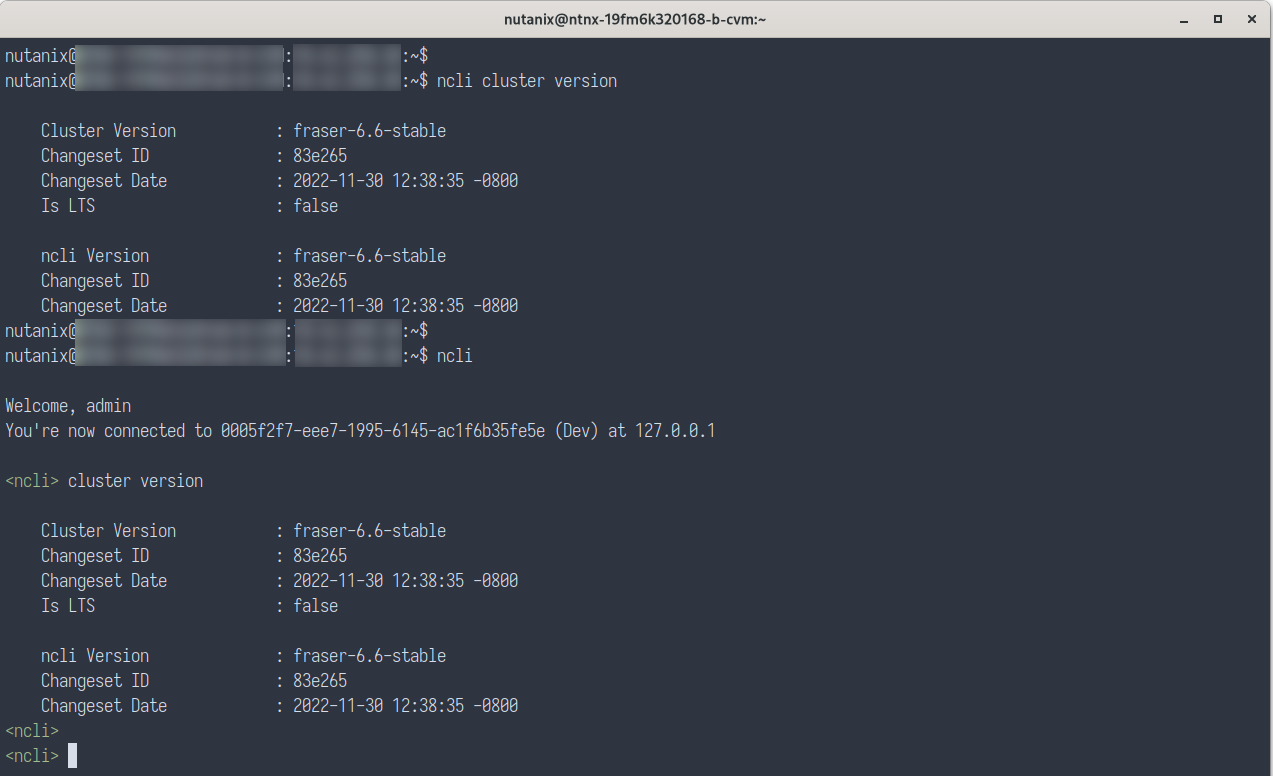

In ‘Nutanix Software Upgrade’ and ‘Hypervisor Upgrade’ within the Prism section, we highlighted the steps used to perform an upgrade of AOS and hypervisor versions. This section will cover the techniques allowing us to perform different types of upgrades in a non-disruptive manner.

AOS Upgrades

For an AOS upgrade there are a few core steps that are performed:

1 - Pre-upgrade Checks

During the pre-upgrade checks, the following items are verified. NOTE: This must complete successfully before an upgrade can continue.

- Check version compatibility between AOS, hypervisor versions

- Check cluster health (cluster status, free space, and component checks (e.g. Medusa, Stargate, Zookeeper, etc.)

- Check network connectivity between all CVMs and Hypervisors

2 - Upload upgrade software to 2 nodes

Once the pre-upgrade checks have been completed, the system will upload the upgrade software binaries to two nodes in the cluster. This is done for fault-tolerance and to ensure if one CVM is rebooting the other is available for others to pull the software from.

3 - Stage Upgrade Software

Once the software has been uploaded to two CVMs, all CVMs will stage the upgrade in parallel.

The CVMs have two partitions for AOS versions:

- Active partition (the currently running version)

- Passive partition (where upgrades are staged)

When an AOS upgrade occurs, we perform the upgrade on the non-active partition. When the upgrade token is received it will mark the upgraded partition as the active partition and reboot the CVM into the upgraded version. This is similar to a bootbank / altbootbank.

NOTE: the upgrade token is passed between nodes iteratively. This ensures only one CVM reboots at a time. Once the CVM reboots and is stable (check service status and communication) the token can be passed to the next CVM until all CVMs have been upgraded.

Note

Upgrade Error Handling

A common question is what happens if the upgrade is unsuccessful or has an issue partially through the process?

In the event some upgrade issue occurs we will stall the upgrade and not progress. NOTE: this is a very infrequent occurrence as pre-upgrade checks will find most issues before the upgrade actually begins. However, in the event the pre-upgrade checks succeed and some issue occurs during the actual upgrade, there will be no impact to workloads and user I/O running on the cluster.

The Nutanix software is designed to work indefinitely in a mixed mode between supported upgrade versions. For example, if the cluster is running x.y.foo and is upgrading to x.y.bar the system can run indefinitely with CVMs on both versions. This is actually what occurs during the upgrade process.

For example, if you have a 4 node cluster on x.y.foo and start the upgrade to x.y.bar, when the first node upgrades it will be running x.y.bar while the others are on x.y.foo. This process will continue and CVMs will reboot into x.y.bar as they receive the upgrade token.

» Download this section as PDF (opens in a new tab/window)

Foundation Imaging Architecture

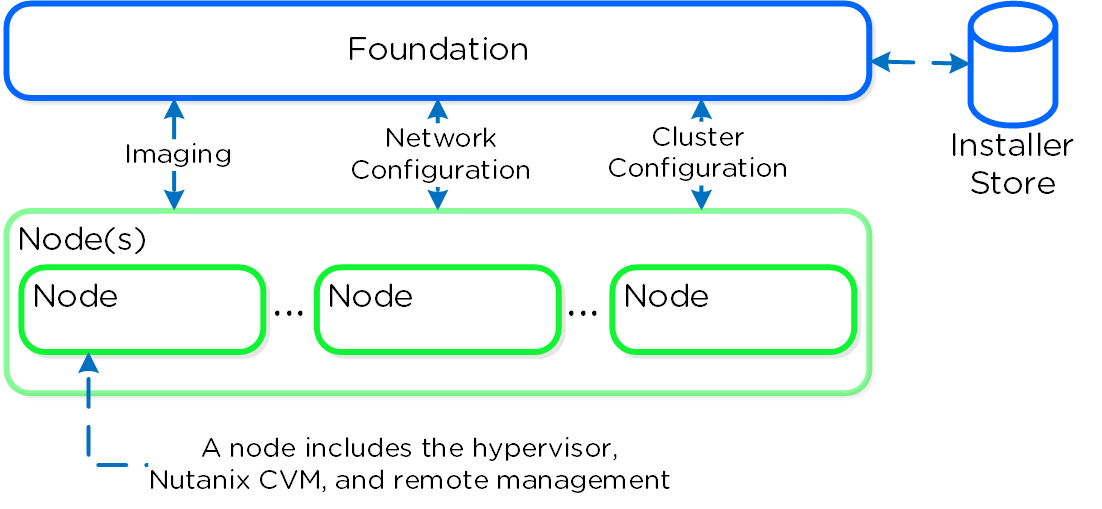

Foundation is a Nutanix provided tool leveraged for bootstrapping, imaging and deployment of Nutanix clusters. The imaging process will install the desired version of the AOS software as well as the hypervisor of choice.

By default Nutanix nodes ship with AHV pre-installed, to leverage a different hypervisor type you must use foundation to re-image the nodes with the desired hypervisor. NOTE: Some OEMs will ship directly from the factory with the desired hypervisor.

The figure shows a high level view of the Foundation architecture:

Foundation - Architecture

Foundation - Architecture

As of 4.5, Foundation is built in to the CVMs to simplify configuration. The installer store is a directory for storing uploaded images, these can be used for the initial imaging as well as cluster expansion when imaging is required.

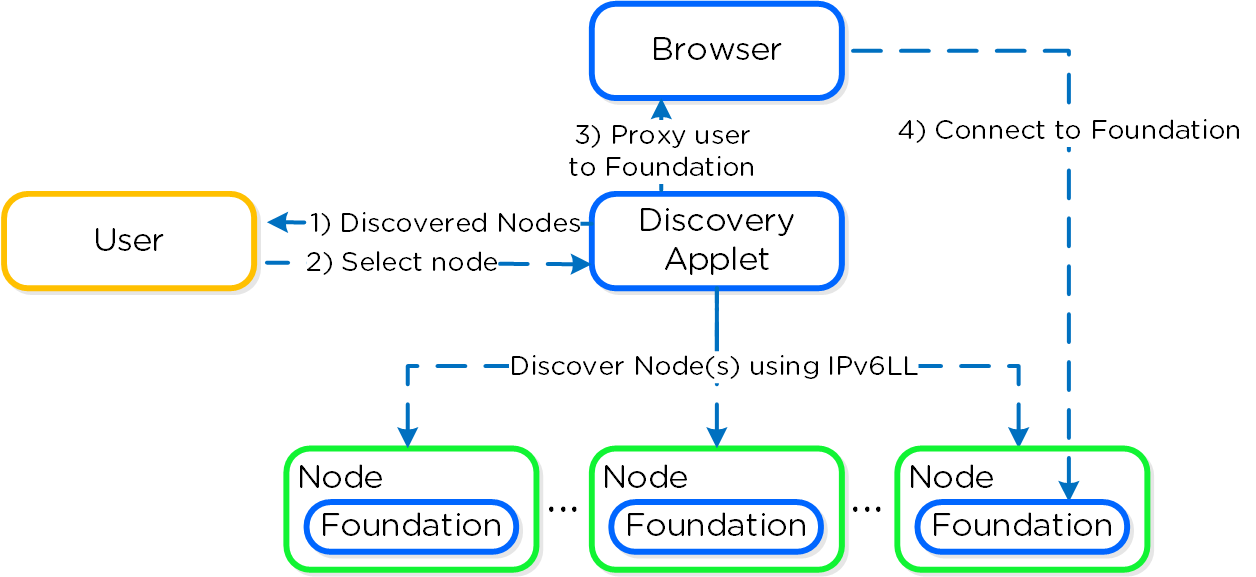

The Foundation Discovery Applet (which can be found HERE) is responsible for discovering nodes and allowing the user to select a node to connect to. Once the user has selected a node to connect to, the applet will proxy localhost:9442 IPv4 to the CVM’s IPv6 link-local address on port 8000.

The figure shows a high level view of the applet architecture:

Foundation - Applet Architecture

Foundation - Applet Architecture

NOTE: the discovery applet is merely a means of discovery and proxy to the Foundation service which runs on the nodes. All of the imaging and configuration is handled by the Foundation service, not the applet.

Note

Pro tip

If you're on a different network (L2) than your target Nutanix nodes (e.g. over the WAN) you can connect directly to the Foundation service on the CVM if it has an IPv4 address assigned (instead of using the discovery applet).

To directly connect browse to <CVM_IP>:8000/gui/index.html

Inputs

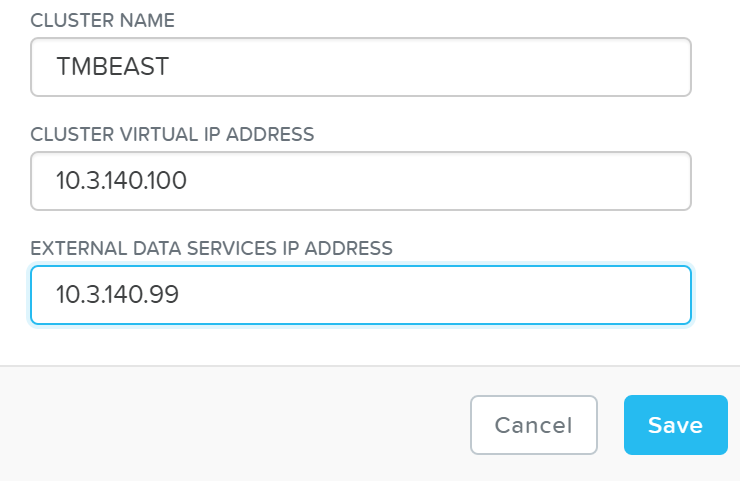

The Foundation tool has the following configuration inputs (below). A typical deployment requires 3 IP addresses per node (hypervisor, CVM, remote management (e.g. IPMI, iDRAC, etc.)). In addition to the per node addresses, it is recommended to set a Cluster and Data Services IP addresses.

- Cluster

- Name

- IP*

- NTP*

- DNS*

- CVM

- IP per CVM

- Netmask

- Gateway

- Memory

- Hypervisor

- IP per hypervisor host

- Netmask

- Gateway

- DNS*

- Hostname prefix

- IPMI*

- IP per node

- Netmask

- Gateway

NOTE: Items marked with ‘*’ are optional but highly advisable

System Imaging and Deployment

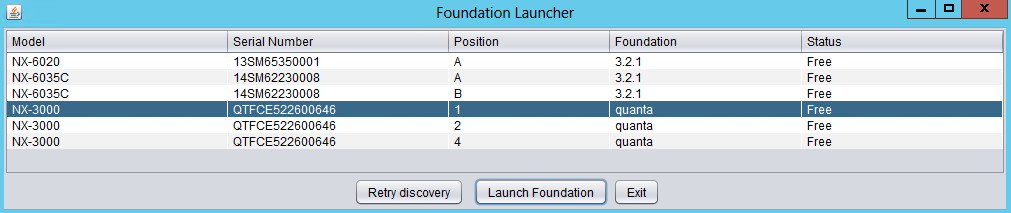

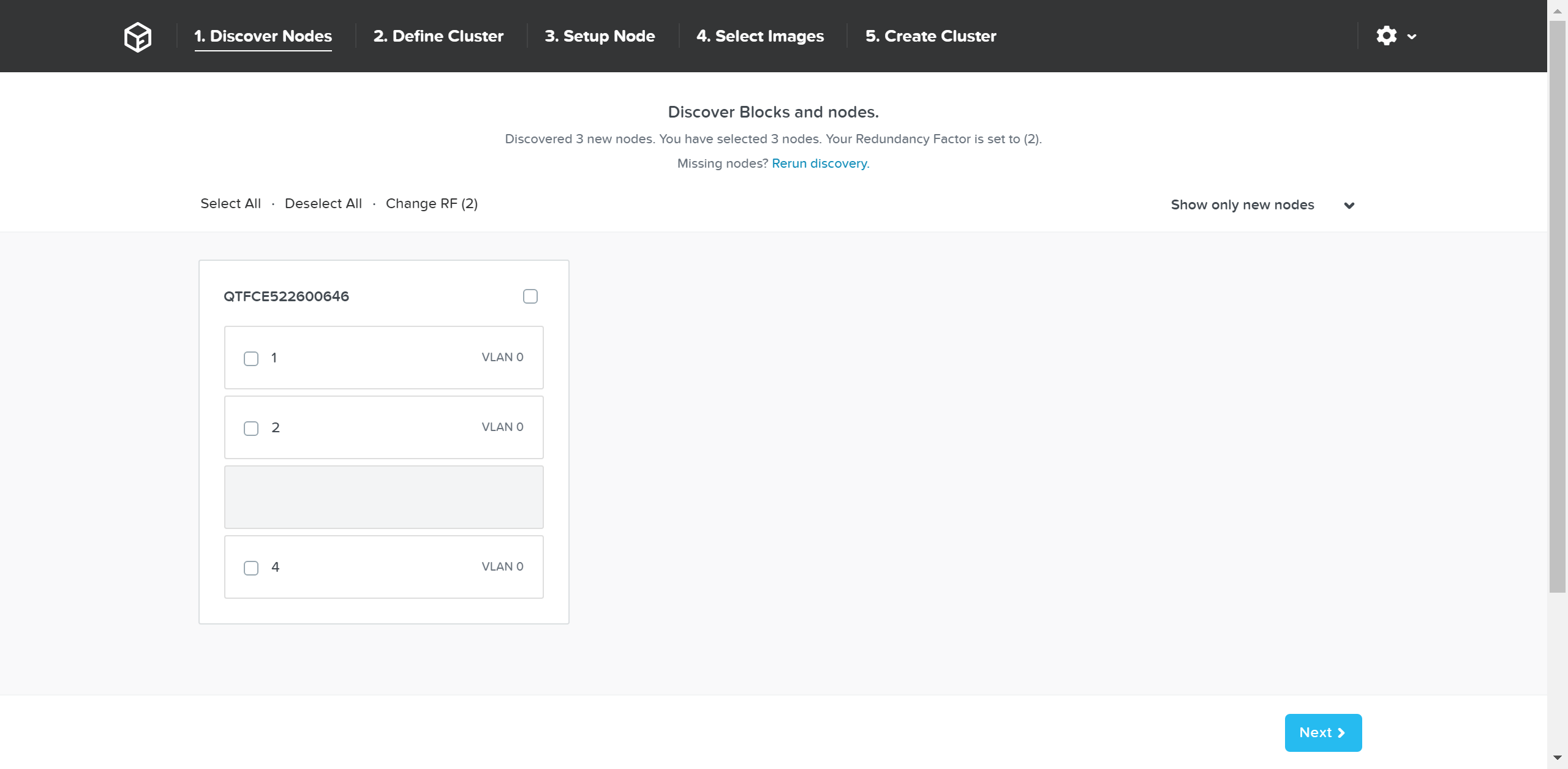

The first step is to connect to the Foundation UI which can be done via the discovery applet (if on same L2, node IPs unecessary):

Foundation - Discovery Applet

Foundation - Discovery Applet

If you can’t find the desired node, make sure you’re on the same L2 network.

After connecting into the selected node’s Foundation instance the main Foundation UI will appear:

Foundation - Discovery Page

Foundation - Discovery Page

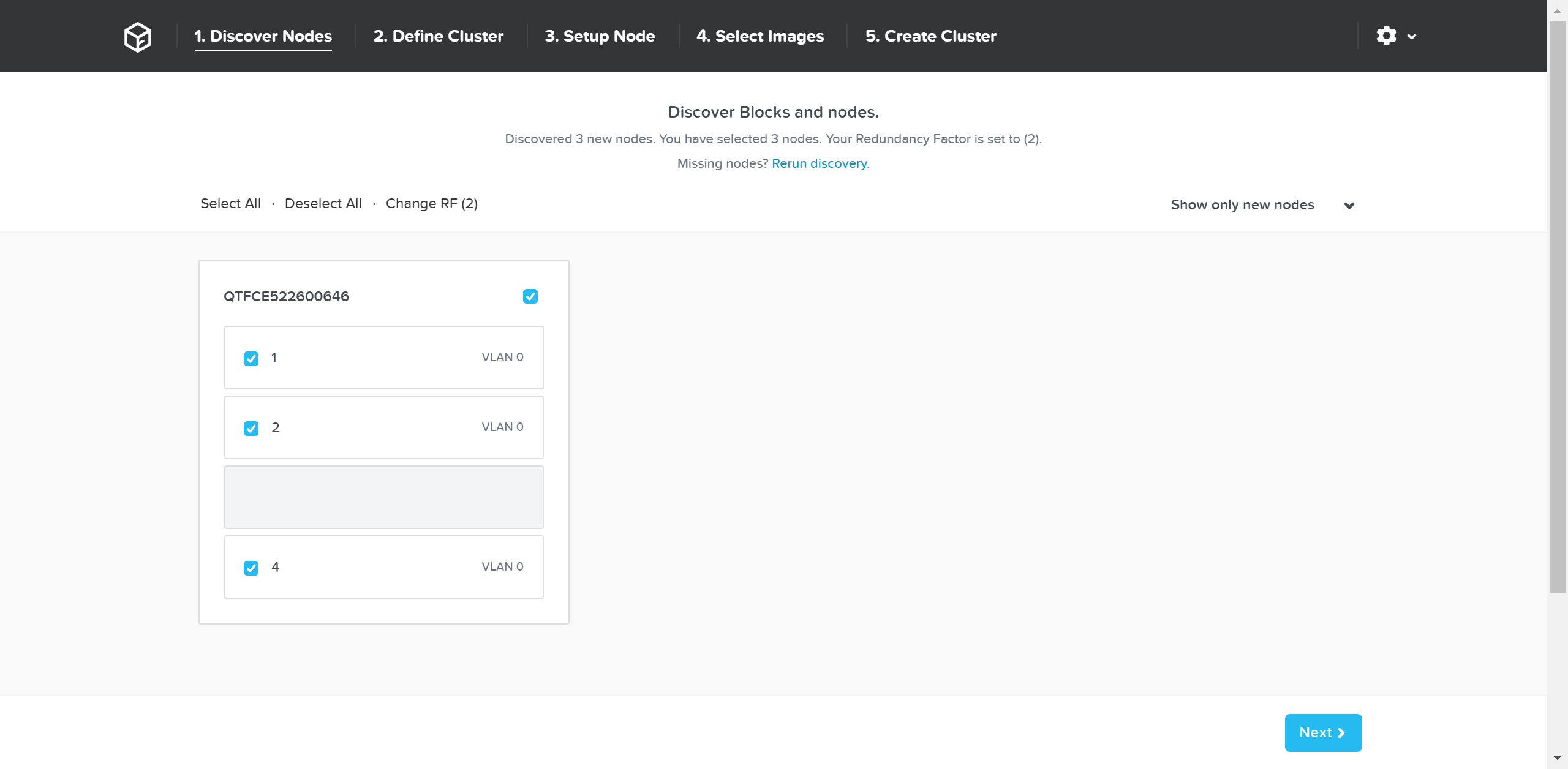

This will show all of the discovered nodes and their chassis. Select the desired nodes to form the cluster and click ‘Next’

Foundation - Node Selection

Foundation - Node Selection

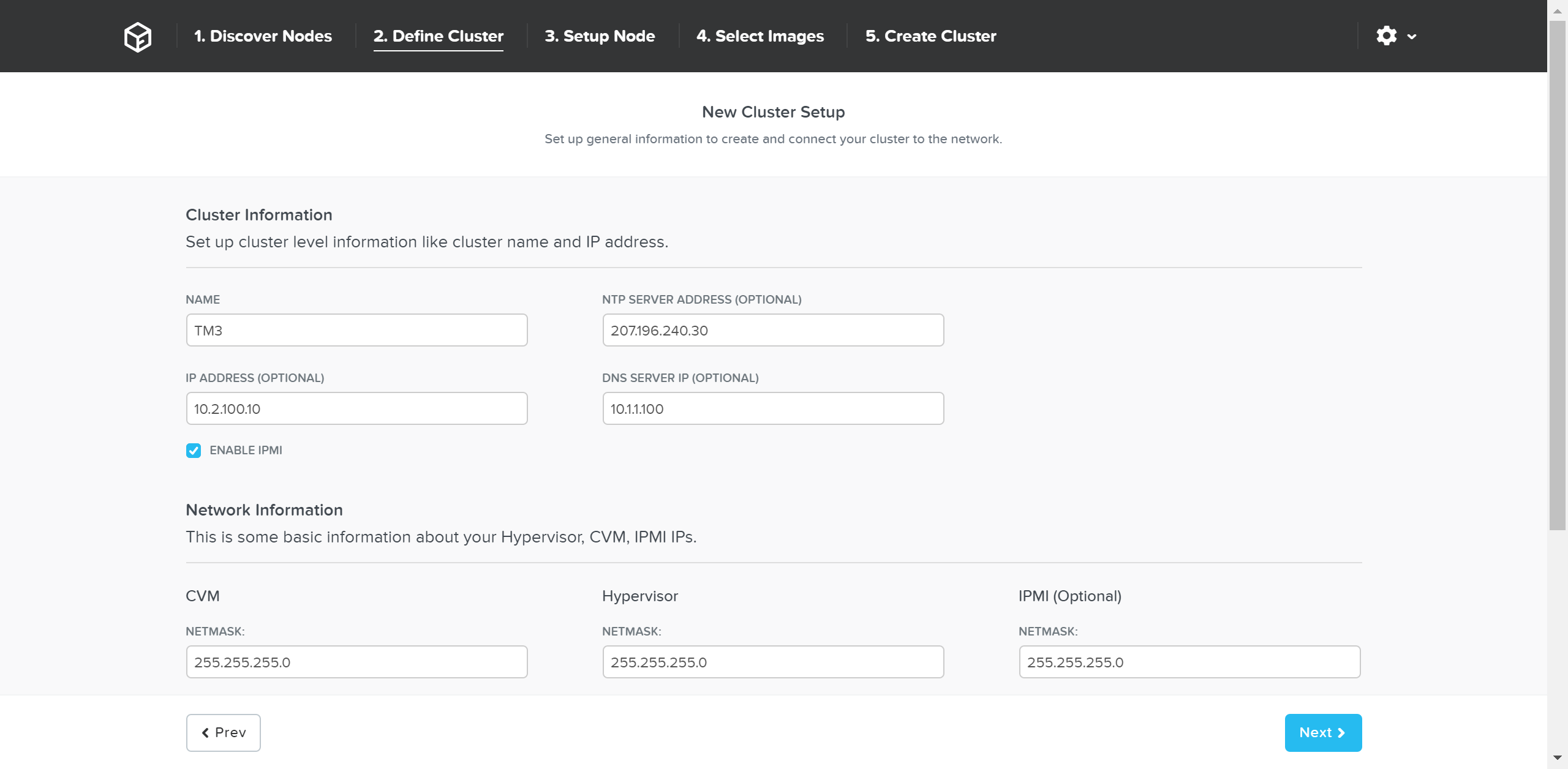

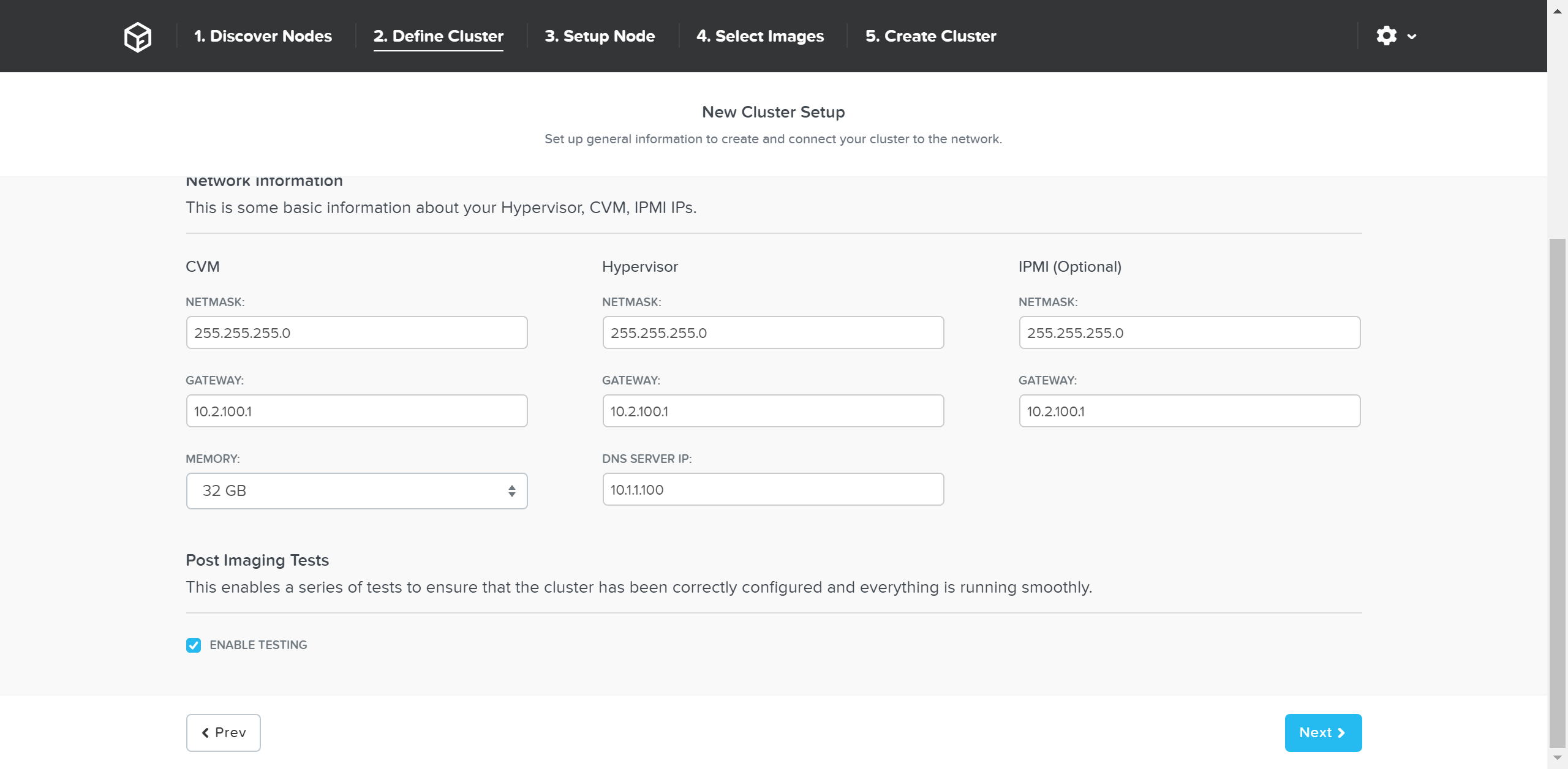

The next page prompts for the cluster and network inputs:

Foundation - Cluster Information

Foundation - Cluster Information

Foundation - Network Information

Foundation - Network Information

Once the details have been input, click ‘Next’

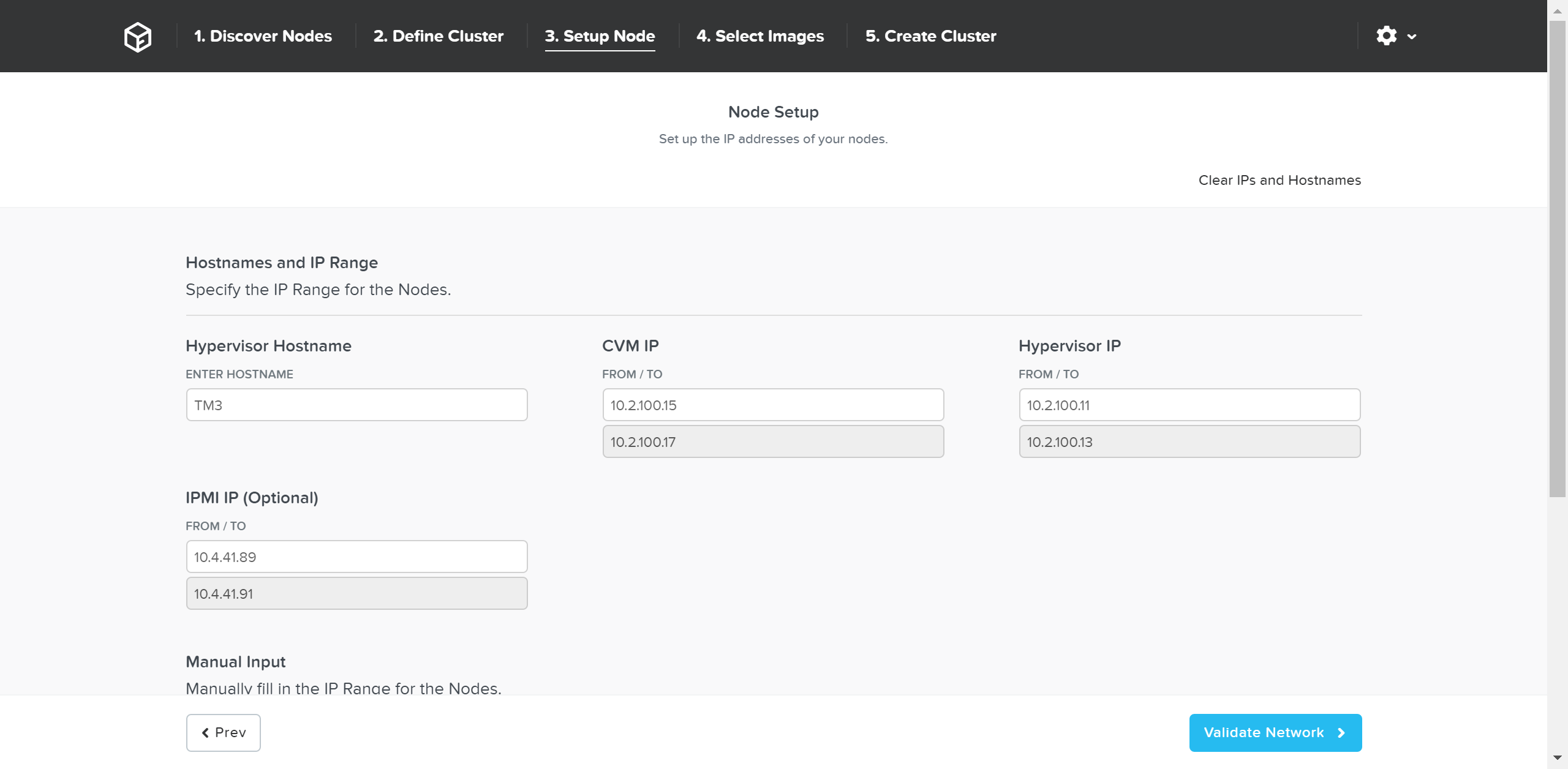

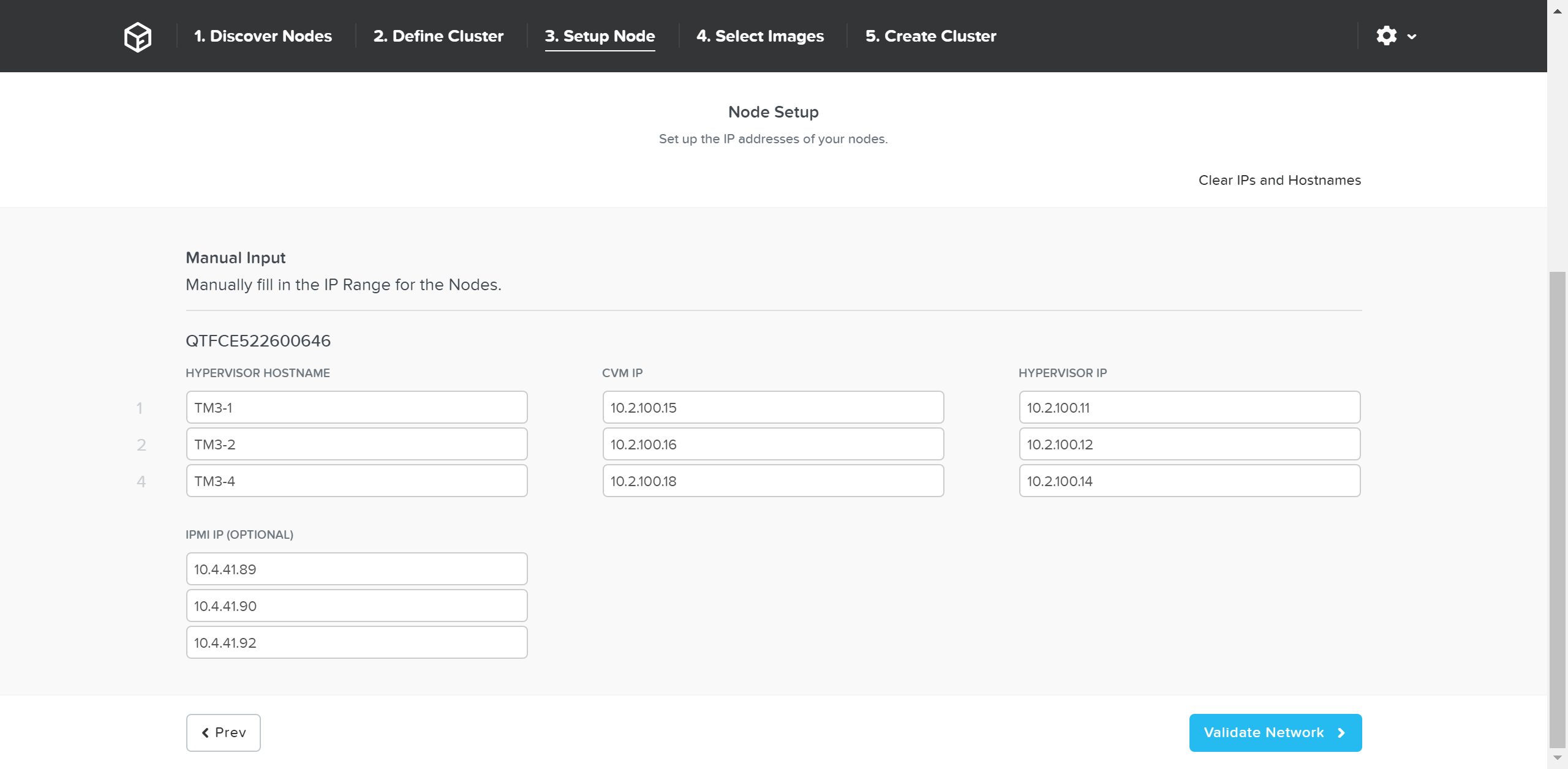

Next we’ll input the node details and IP addresses:

Foundation - Node Setup

Foundation - Node Setup

You can manually override the hostname and IP addresses if necessary:

Foundation - Hostname and IP

Foundation - Hostname and IP

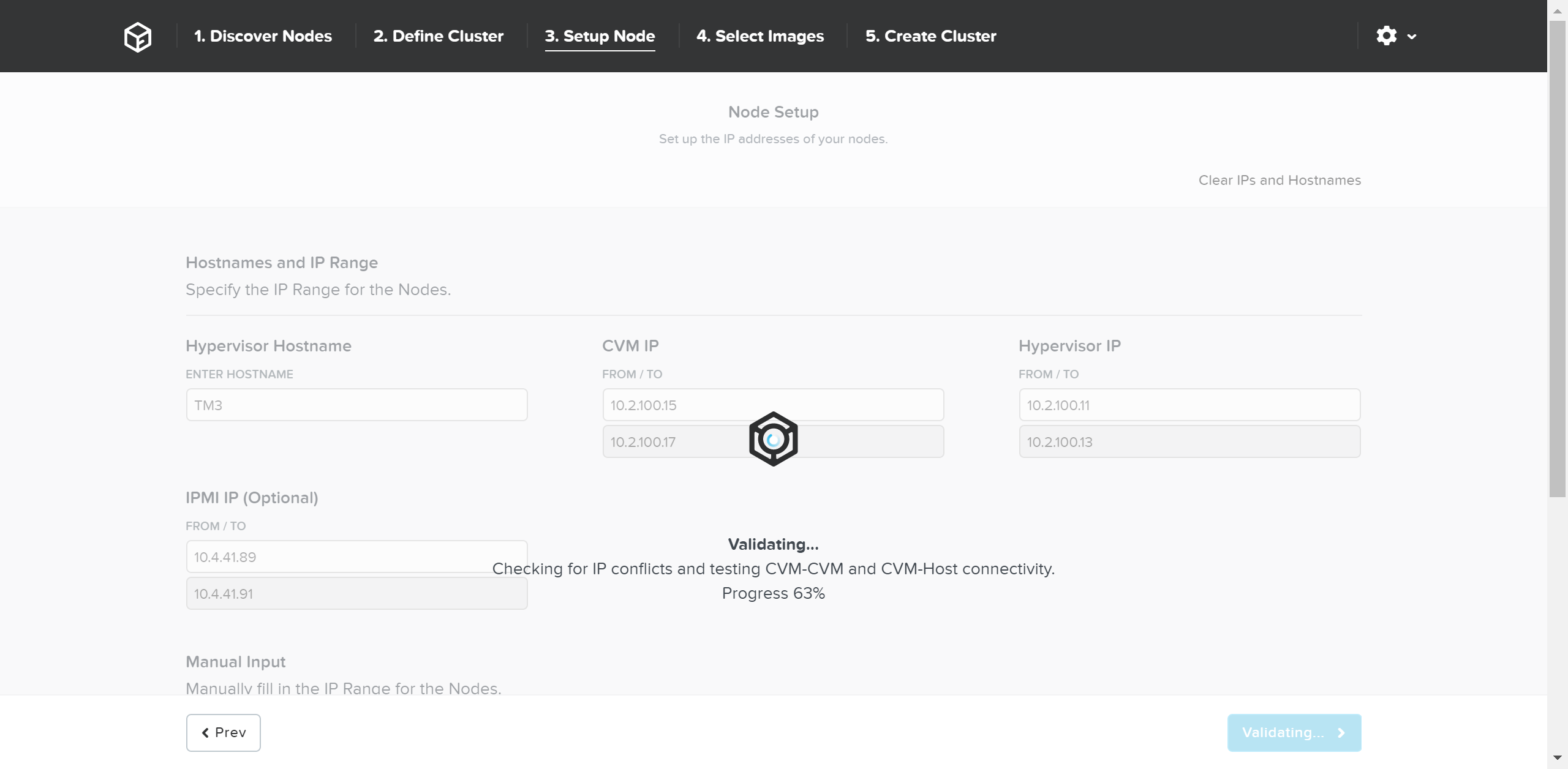

Click ‘Validate Network’ to validate network configuration and proceed. This will check for IP address conflicts and ensure connectivity.

Foundation - Network Validation

Foundation - Network Validation

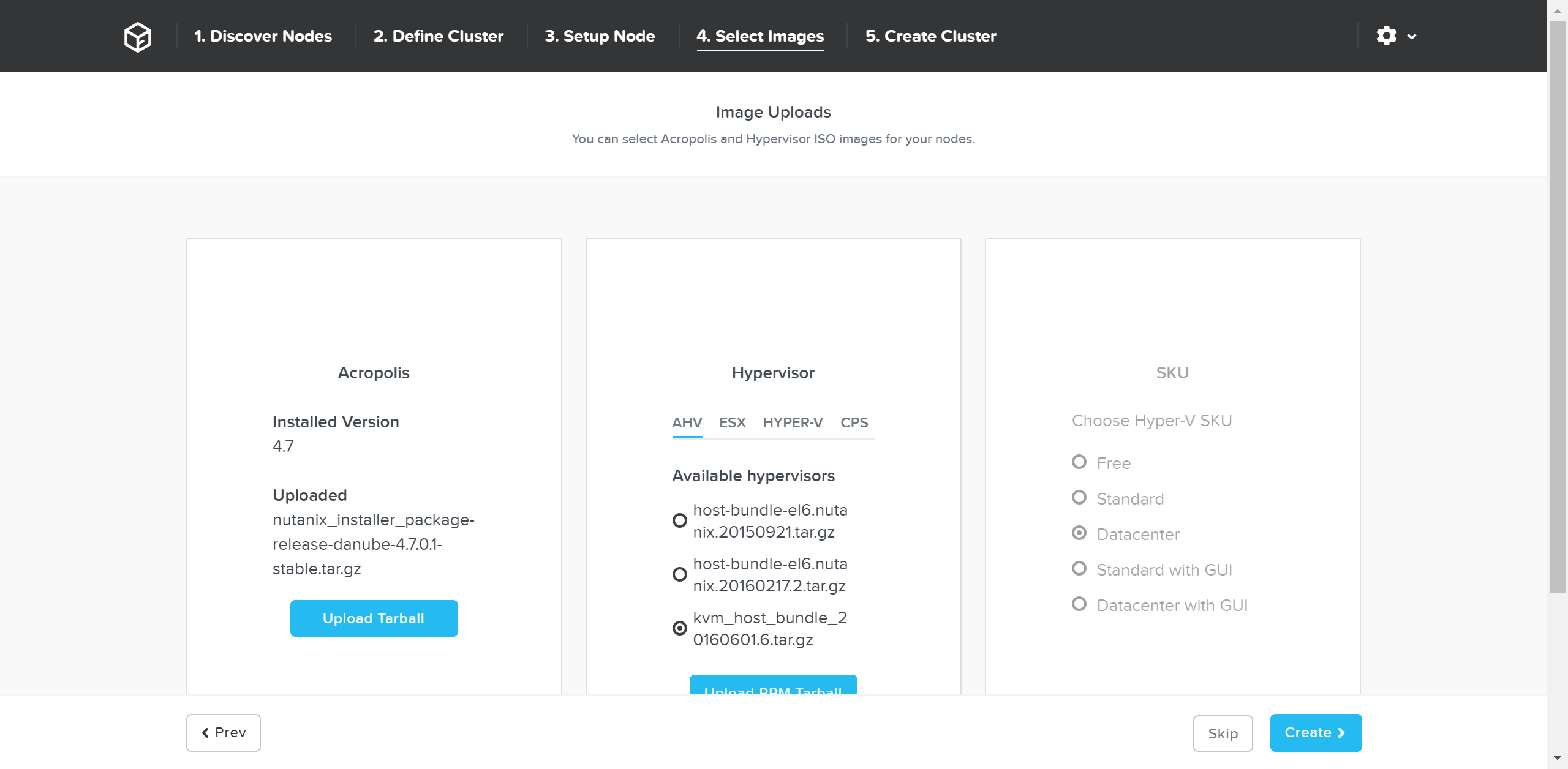

Once network validation has completed successfully we’ll now proceed to selecting the desired images.

To upgrade AOS to a newer version than currently on the CVM, download it from the portal and upload the Tarball. Once we have the desired AOS image, we’ll select the hypervisor.

For AHV, the image is built-in to the AOS image. For others you must upload the desired hypervisor image. NOTE: make sure the AOS and hypervisor versions are on the compatibility matrix (LINK).

Once we have the desired images, click ‘Create’:

Foundation - Select Images

Foundation - Select Images

If imaging is not necessary you can also click ‘Skip’ to skip the imaging process. This will not re-image the hypervisor or Nutanix cluster, but just configure the cluster (e.g. IP addresses, etc.).

Foundation will then proceed with the imaging (if necessary) and cluster creation process.

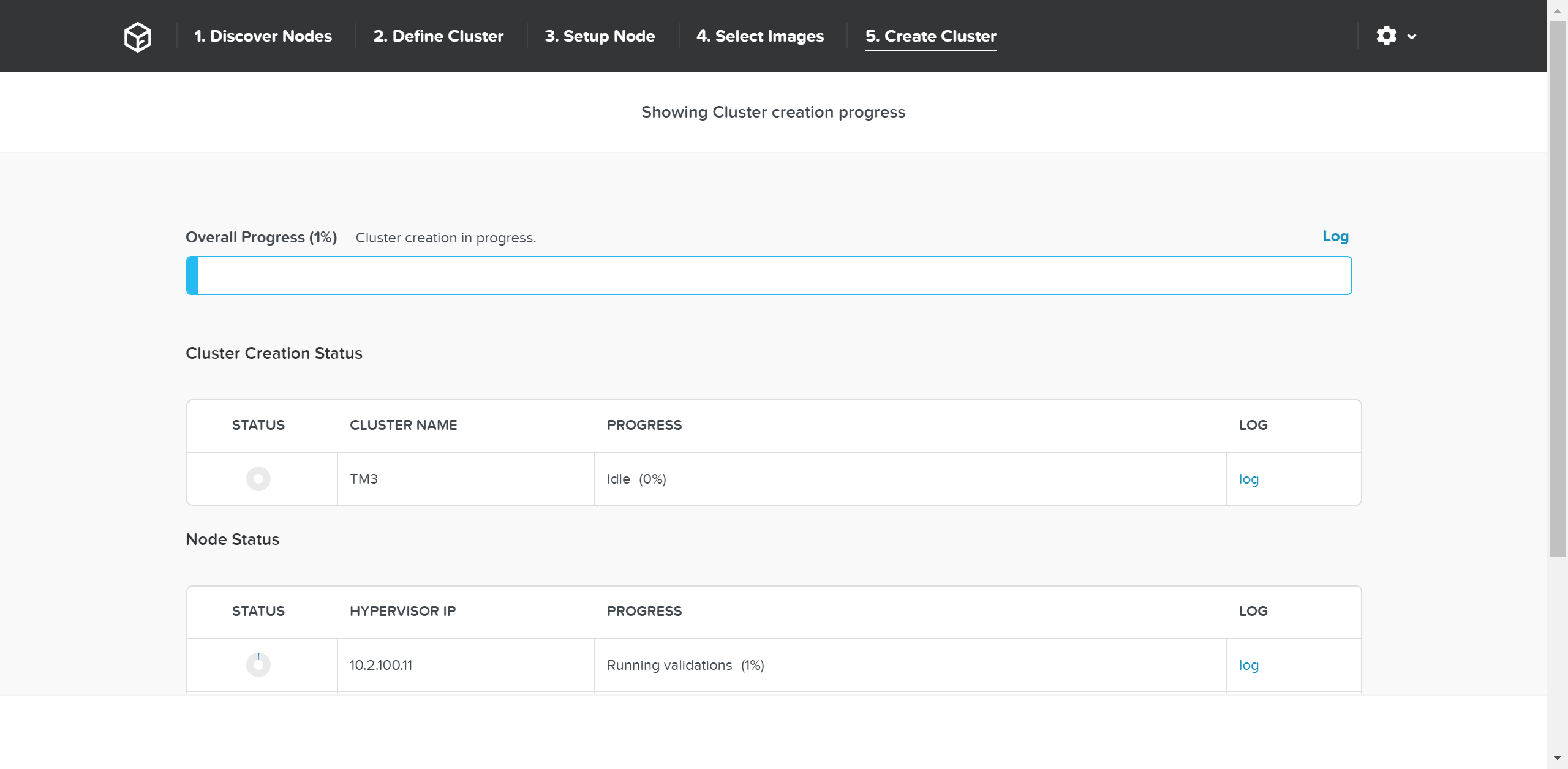

Foundation - Cluster Creation Process

Foundation - Cluster Creation Process

Once the creation is successful you’ll get a completion screen:

Foundation - Cluster Creation Complete

Foundation - Cluster Creation Complete

At this point you can now log into any CVM or the Cluster IP and start using the Nutanix platform!

» Download this section as PDF (opens in a new tab/window)

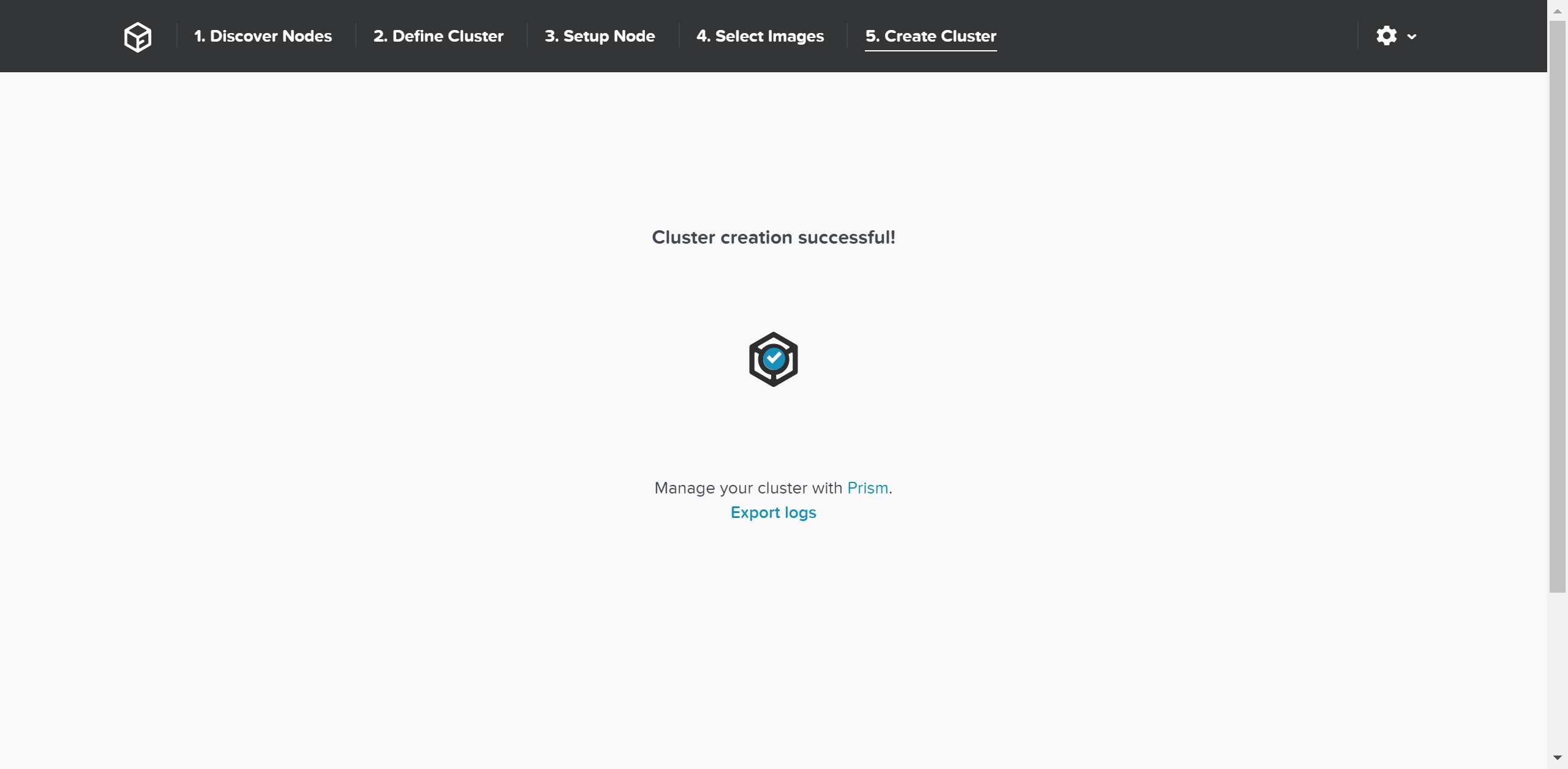

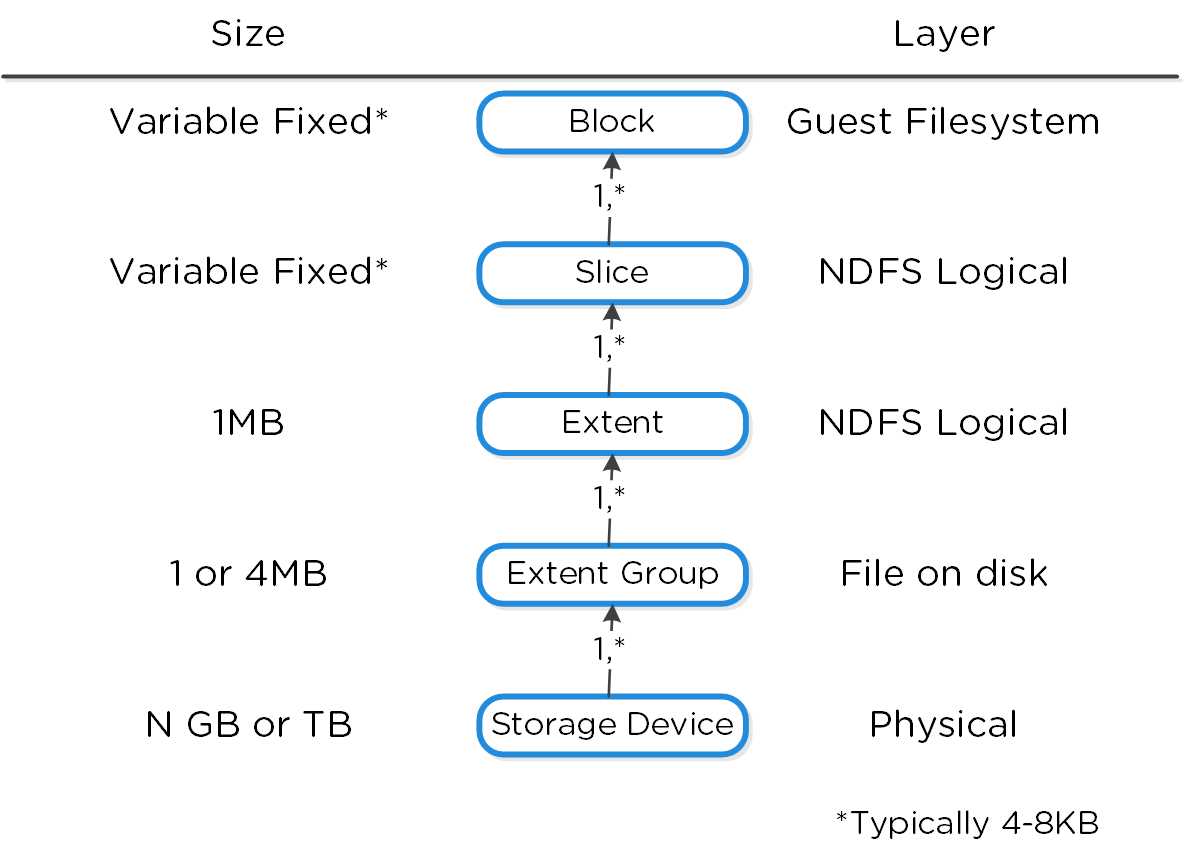

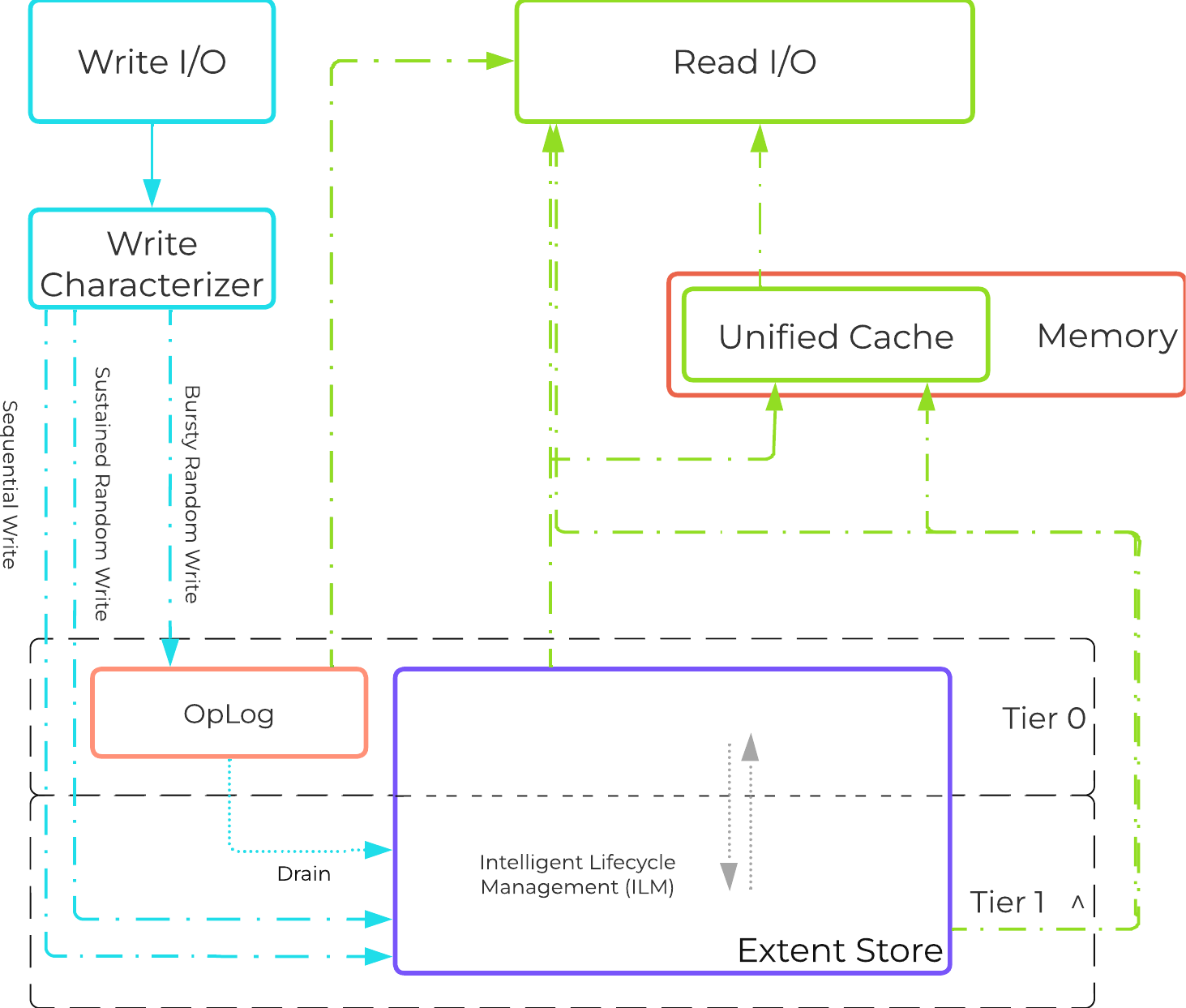

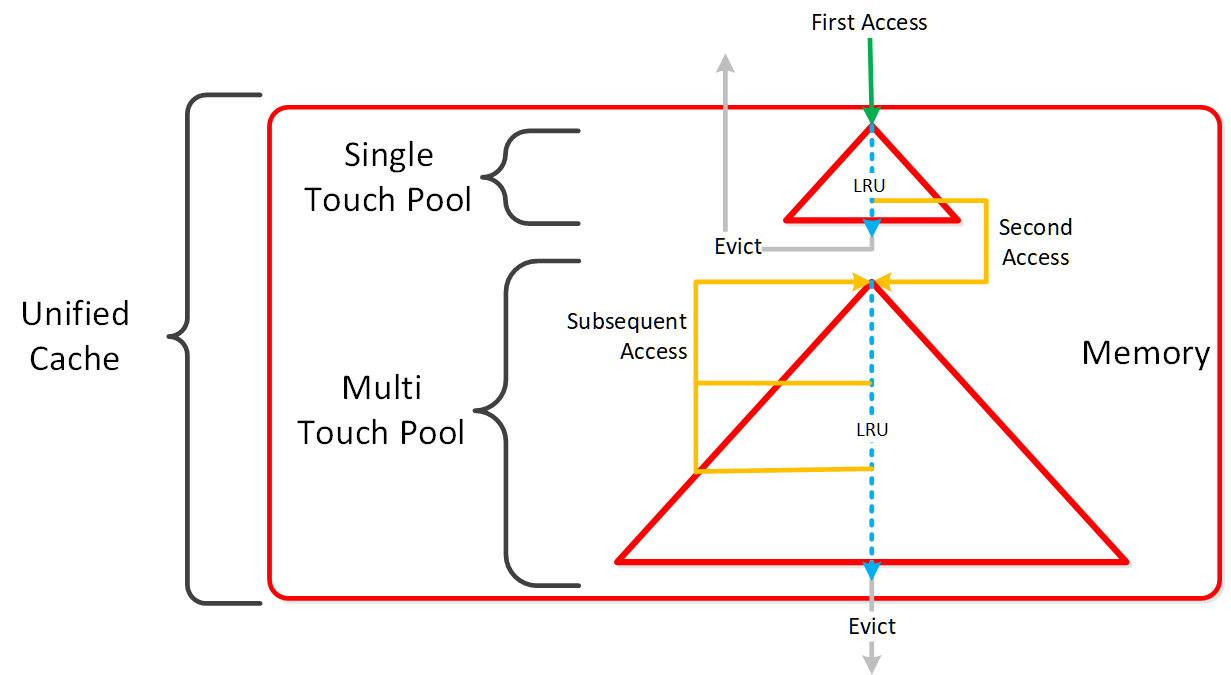

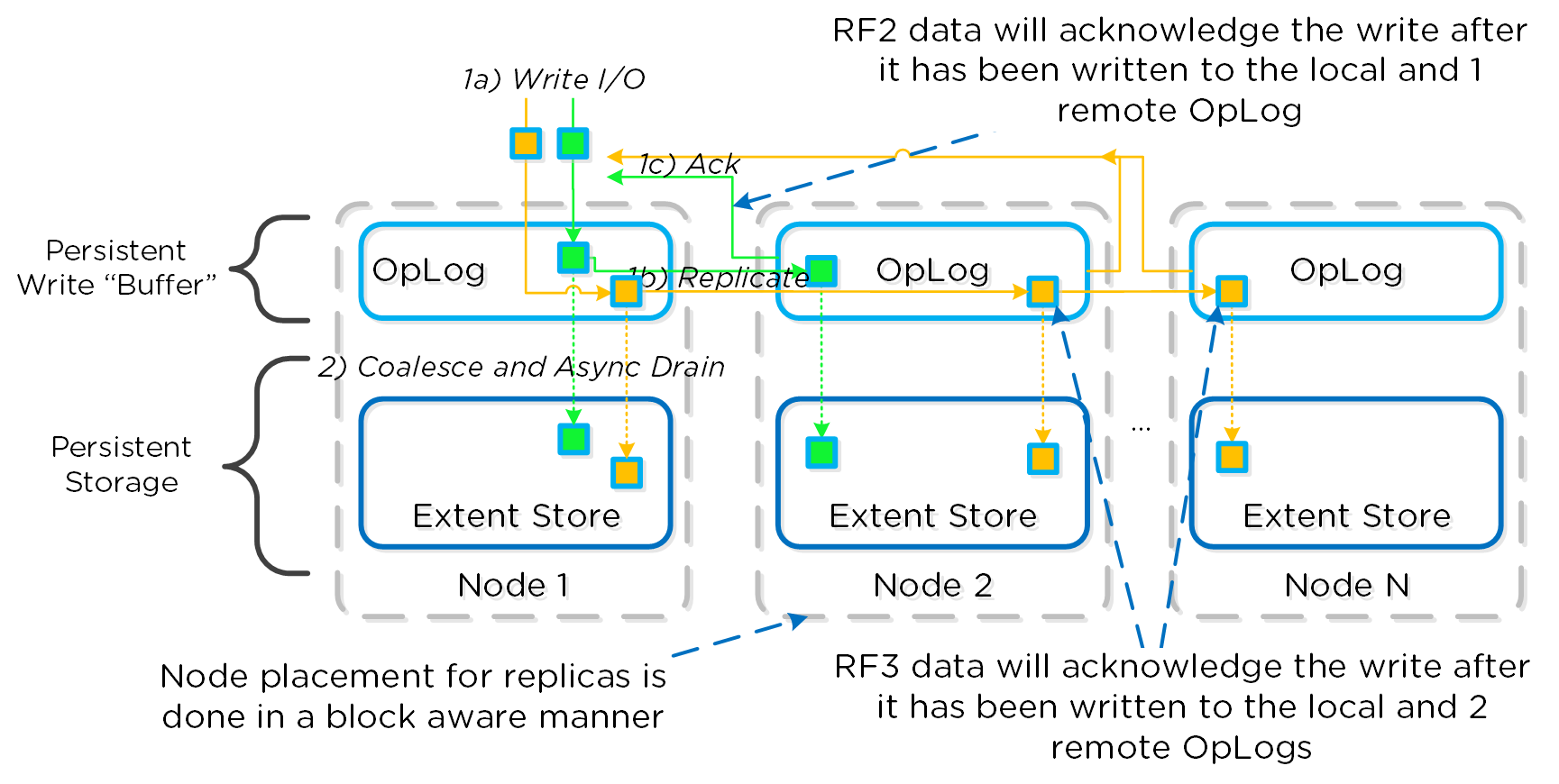

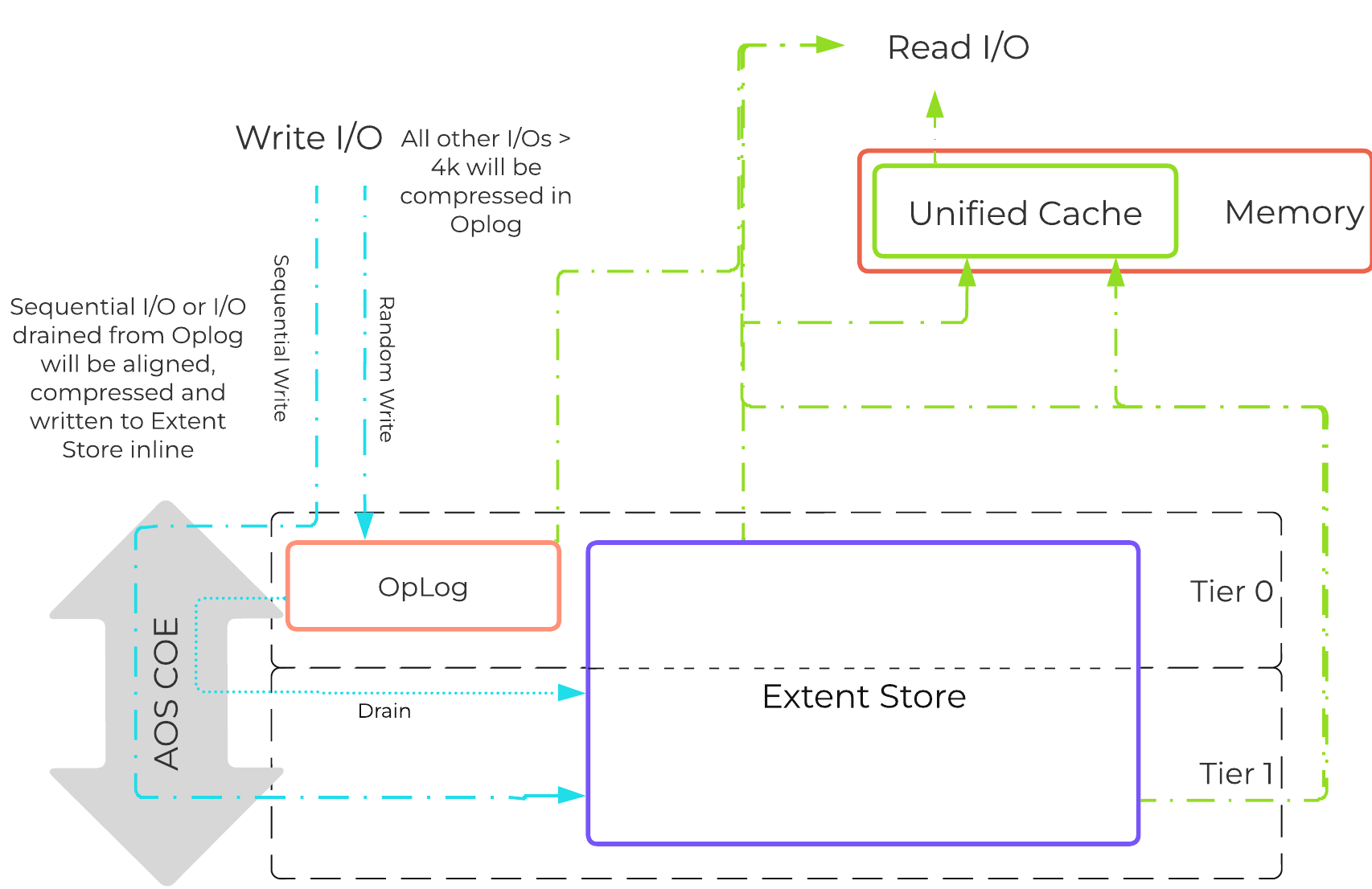

In this section, I’ll cover how the various storage devices (Performance (NVMe/SSD) / Capacity (SSD/HDD) are broken down, partitioned, and utilized by the Nutanix platform. NOTE: All of the capacities used are in Base2 Gibibyte (GiB) instead of the Base10 Gigabyte (GB). Formatting of the drives with a filesystem and associated overheads has also been taken into account.

Performance Disk Devices

Performance devices are the highest performance device in a node. These can be NVMe or a mix of NVMe and SSD devices. They store a few key items, as explained below

- Nutanix Home (CVM core)

- Metadata (Cassandra / AES storage)

- OpLog (persistent write buffer)

- Extent Store (persistent storage)

The following figure shows an example of the storage breakdown for a Nutanix node’s performance device:

Performance Drive Breakdown

Performance Drive Breakdown

Graphics and proportions aren’t drawn to scale. When evaluating the Remaining GiB capacities, do so from the top down. For example, the Remaining GiB to be used for the OpLog calculation would be after Nutanix Home and Cassandra have been subtracted from the formatted SSD capacity.

Nutanix Home is mirrored across the first two SSDs to ensure availability and has a 60GiB reservation for two devices.

As of 5.0 Cassandra is sharded across multiple SSDs in the node (currently up to 4) with an initial reservation of 15GiB per SSD (can leverage some Stargate SSD if metadata usage increases). In dual SSD systems, metadata will be mirrored between the SSDs. The metadata reservation per SSD is 15 GiB (30GiB for dual SSD, 60GiB for 4+ SSD).

Prior to 5.0, Cassandra was on the first SSD by default, if that SSD fails the CVM will be restarted and Cassandra storage will then be on the 2nd. In this case the metadata reservation per SSD is 30 GiB for the first two devices.

The OpLog is distributed among all SSD devices up to a max of 12 per node (Gflag: max_ssds_for_oplog). If NVMe devices are available, OpLog will be placed on those devices instead of SATA SSD.

The OpLog reservation per disk can be calculated using the following formula: MIN(((Max cluster RF/2)400 GiB)/ numDevForOplog), ((Max cluster RF/2)25%) x Remaining GiB). NOTE: The sizing for OpLog is done dynamically as of release 4.0.1 which will allow the extent store portion to grow dynamically. The values used are assuming a completely utilized OpLog.

For example, in a RF2 (FT1) cluster with 8 SSD devices that are 1TB the result would be:

- MIN(((2/2)400 GiB)/ 8), ((2/2)25%) x ~900GiB) == MIN(50, 225) == 50 GiB reserved for Oplog per device.

For a RF3 (FT2) cluster this would be:

- MIN(((3/2)400 GiB)/ 8), ((3/2)25%) x ~900GiB) == MIN(75, 337) == 75 GiB reserved for Oplog per device.

For a RF2 (FT1) cluster with 4 NVMe and 8 SSD devices that are 1TB the result would be:

- MIN(((2/2)400 GiB)/ 4), ((2/2)25%) x ~900GiB) == MIN(100, 225) == 100 GiB reserved for Oplog per device.

The Extent Store capacity would be the remaining capacity after all other reservations are accounted for.

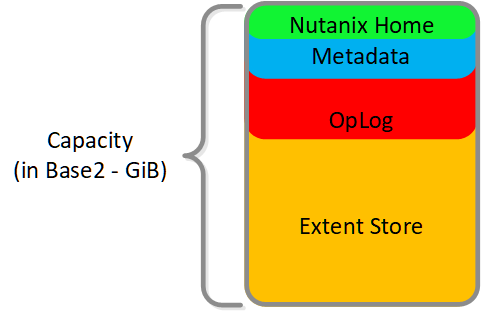

HDD Devices

Since HDD devices are primarily used for bulk storage, their breakdown is much simpler:

- Curator Reservation (Curator storage)

- Extent Store (persistent storage)

HDD Drive Breakdown

HDD Drive Breakdown

Compute

AHVLearn about the native Nutanix hypervisor, including the architecture, I/O path, and administration.

» Download this section as PDF (opens in a new tab/window)

Node Architecture

In AHV deployments, the Controller VM (CVM) runs as a VM and disks are presented using PCI passthrough. This allows the full PCI controller (and attached devices) to be passed through directly to the CVM and bypass the hypervisor. AHV is based upon CentOS KVM. Full hardware virtualization is used for guest VMs (HVM).

AHV Node

AHV Node

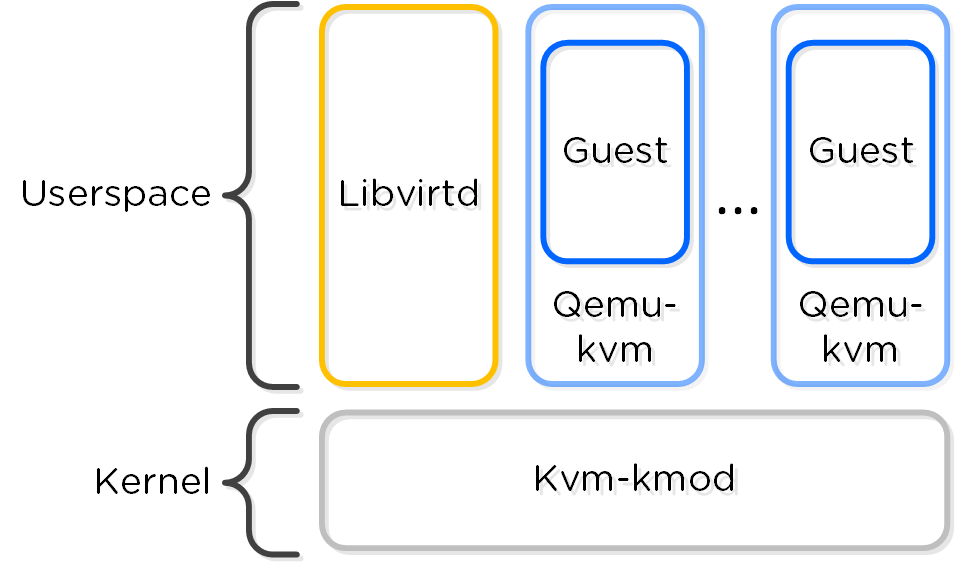

KVM Architecture

Within KVM there are a few main components:

- KVM-kmod

- KVM kernel module

- Libvirtd

- An API, daemon and management tool for managing KVM and QEMU. Communication between AOS and KVM / QEMU occurs through libvirtd.

- Qemu-kvm

- A machine emulator and virtualizer that runs in userspace for every Virtual Machine (domain). In AHV it is used for hardware-assisted virtualization and VMs run as HVMs.

The following figure shows the relationship between the various components:

KVM Component Relationship

KVM Component Relationship

Communication between AOS and KVM occurs via Libvirt.

Note

Processor generation compatibility

Similar to VMware's Enhanced vMotion Capability (EVC) which allows VMs to move between different processor generations; AHV will determine the lowest processor generation in the cluster and constrain all QEMU domains to that level. This allows mixing of processor generations within an AHV cluster and ensures the ability to live migrate between hosts.

Configuration Maximums and Scalability

The following configuration maximums and scalability limits are applicable:

- Maximum cluster size: 32

- Maximum vCPUs per VM: Check Configuration Maximums page for relevant AHV version

- Maximum memory per VM: 4.5TB or available physical node memory

- Maximum virtual disk size: 64TB

- Maximum VMs per host: Check Configuration Maximums page for relevant AHV version

- Maximum VMs per cluster: Check Configuration Maximums page for relevant AHV version

*The 64TB max vdisk size is the size that has been internally tested by Nutanix Engineering.

The above are true as of AHV 20230302.100173 and AOS 6.8. Refer to Configuration Maximums for other versions.

Compute

The following sections outline key capabilities of Nutanix AHV compute for workload management.

VM Templates

AHV has always had the image library which focused on capturing the data within a single vDisk so that it could be easily cloned, but input from the admin was needed to complete the process of declaring the CPU, memory and network details. VM Templates take this concept to the next level of simplicity and provides a familiar construct for admins that have utilized templates on other hypervisors.

AHV VM Templates are created from existing virtual machines, inheriting the attributes of the defining VM such as the CPU, memory, vdisks, and networking details. The template can then be configured to customize the guest OS upon deployment and can optionally provide a Windows license key. Templates allow for multiple versions to be maintained, allowing for easy updates such as operating system and application patches to be applied without the need to create a new template. Admins can choose which version of the template is active, allowing the updates to be staged ahead of time or the ability to switch back to a previous version if needed.

Memory Overcommit

One of the central benefits of virtualization is the ability to overcommit compute resources, making it possible to provision more CPUs to VMs than are physically present on the server host. Most workloads don’t need all of their assigned CPUs 100% of the time, and the hypervisor can dynamically allocate CPU cycles to workloads that need them at each point in time.

Much like CPU or network resources, memory can be overcommitted also. At any given time, the VMs on the host may or may not use all their allocated memory, and the hypervisor can share that unused memory with other workloads. Memory overcommit makes it possible for administrators to provision a greater number of VMs per host, by combining the unused memory and allocating it to VMs that need it.

AOS 6.1 brings memory overcommit to AHV as an option to allow administrators flexibility in environments such as test and development where additional memory and VM density are required. Overcommit is disabled by default and can be defined on a per-VM basis allowing sharing to be done on all or just a subset of the VMs on a cluster.

VM Affinity Policies

Different types of applications can have requirements that dictate whether the VMs should run on the same host or a different host. This is typically done for performance or availability benefits. Affinity controls enable you to govern where VMs run. AHV has two types of affinity controls:

- VM-host affinity

- Strictly ties a VM to a host or group of hosts, so the VM only runs on that host or group. Affinity is particularly applicable for use cases that involve software licensing or VM appliances. In such cases, you often need to limit the number of hosts an application can run on or bind a VM appliance to a single host.

- Anti-affinity

- AHV lets you declare that a given list of VMs shouldn’t run on the same hosts. Anti-affinity gives you a mechanism for allowing clustered VMs or VMs running a distributed application to run on different hosts, increasing the application’s availability and resiliency. To prefer VM availability over VM separation, the system overrides this type of rule when a cluster becomes constrained.

Virtual Trusted Platform Module (vTPM)

TPM technologies are designed to provide enhanced security and privacy in handling encryption operations. The purpose of the TPM is to ensure information storage is better protected from unauthorized access. The primary use case is storing secrets, making it difficult to access them without proper authorization.

The Trusted Computing Group outlines the TPM as a dedicated hardware chip that is soldered onto the motherboard in the computer, which works great in a bare metal deployment. In a virtualized environment using a hypervisor such as AHV or ESXi, the physical TPM chip approach does not scale to support multiple guest OS running on a single hardware configuration due to the following limitations.

- Key Storage – The storage available in a physical TPM chip can hold roughly three transient keys.

- TPM Isolation – The TPM chip does not provide any separation or isolation between multiple guest OS running on the same physical device.

To address the scaling issues for a virtualized environment, the hypervisor vendors have implemented a hypervisor-level software called virtual TPM (vTPM), which conforms to the Trusted Computing Group’s TPM specification. vTPM emulates these TPM specifications in the same functional manner as a physical TPM chip creating a private TPM instance per VM guest within the hypervisor. vTPM allows each VM guest to have its own key storage, isolating it from the other guests running on the same physical server. To maintain this isolation, the vTPM does not use the hardware physical TPM chip on the server.

Each hypervisor vendor is responsible for protecting the vTPM instance from outside access. Nutanix AHV, for example, ensures isolation between VMs and encrypts vTPM data using a secure distributed secrets service called Mantle, preventing unauthorized access or tampering.

Live Migrations

Live migration allows the system to move VMs from one host to another while the VM is turned on without workload interruption, regardless of whether the administrator or an automatic process initiates the movement. Live migrations occur regularly in the cluster nodes, triggered by maintenance operations, ADS workload balancing, node expansion, or administrator-driven requests.

You can also use live migration to migrate VMs to another physical cluster in the same location or in a different location to rebalance workloads, run maintenance operations, or avoid planned interruptions. For cross-cluster live migrations, we recommend a network with 5ms of latency and support a maximum 40ms latency between clusters.

There are several stages to a VM live migration:

- Create a VM placeholder on the destination host

- Iteratively copy the source VMs memory to the destination VM placeholder

- Pause the VM on the source host

- Copy the final VM state from the source VM to the destination VM placeholder

- Update the network switches to ensure continued connectivity

- Resume the VM on the destination host

- Delete the paused VM from the source

Step 2, the copying of the VM’s memory, can occur over and up to a specific number of iterations (50 at the time of writing). As the VM is still running, AHV will keep track of the VM’s memory which is actively being modified during the copy process in each iteration. Once an iteration is complete, AHV will analyze the amount of memory that has changed and still needs to be copied over to the destination, as well as the achieved rate of memory transfer over the network, to determine if another iteration is required or if the migration can proceed to the next step. The rate at which a VM is modifying memory is more important to a successful migration than the amount of memory in a VM, so AHV will proactively manage the speed of the VM to reduce the amount of memory needing to be sent in the next iteration.

Step 3, pausing of the VM on the source host, will only occur when AHV detects it can transfer the remaining memory in 300ms or less, ensuring that the VM will respond on the destination host after a very short period. This is the maximum stun window. If the remaining memory cannot be transferred within this window after the final iteration, then the migration can abort with a ‘failure to converge’ error. The migration may be automatically re-tried by ADS, host evacuation, or by the administrator manually triggering another migration.

Step 5, updating the network switches, is enacted by sending a RARP broadcast network packet to all devices on the subnet while the VM is being brought up. This ensures that external network switches are aware of where the VM is now running and can route packets appropriately, for example ensuring that TCP connections are not lost during the migration.

Generation ID

There are a range of applications that use the Generation ID to access a virtual machine identifier to validate if the VM was cloned or duplicated for licensing or functionality verification. Starting in AOS release 6.7, AHV creates a Generation ID for each VM that is created, which applications running inside of that VM have access to. Applications can then make decisions on how they want to behave based on whether the correct Generation ID is present. One notable example where this is important is in the case of Windows domain controllers, which can experience problems if accidentally cloned or rolled back without proper safeguards. Generation ID is a mechanism that provides this information to a VM, allowing these restrictions to be tested and enforced by the app.

Advanced Processor Compatibility

Advanced Processor Compatibility (APC) streamlines upgrades and helps the transition of clusters to the latest generation CPUs. Clusters are no longer limited by the lowest common denominator CPU. VMs can now accurately identify and utilize the CPU features available to them, improving migrations.

APC offers flexibility in a few different ways. First, clusters can be leveled for consistent CPU presentation to guest VMs, which is the default behavior. Also a baseline CPU model can be set on a per-VM basis for on-demand cross-cluster live migration to different generations of hardware.

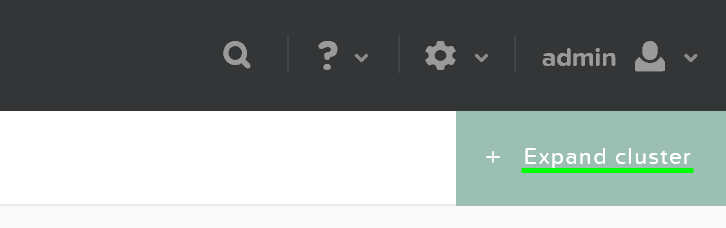

Automatic Cluster Selection

Automatic Cluster Selection makes it easy for Nutanix administrators to deliver a cloud-like experience with minimum management overhead. Many organizations have general-purpose environments with multiple clusters managed by a single Prism Central instance, but the utilization of the different clusters previously had to be manually managed by an administrator.

Automatic Cluster Selection intelligently determines the optimal cluster for VM deployment based on resource availability, host affinities, project specifications, and image distribution policies when a VM is created. This capability ensures that resources are automatically balanced across the clusters as new VMs are deployed. Automatic Cluster Selection also saves valuable time helps evenly distribute workloads for maximum performance and utilization across the infrastructure.

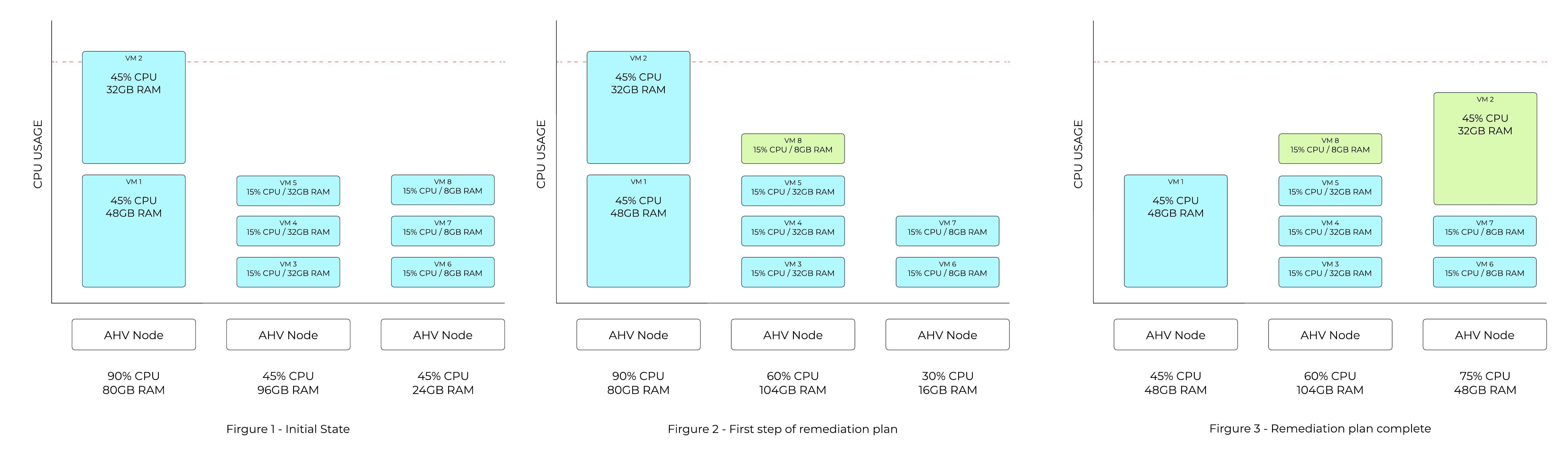

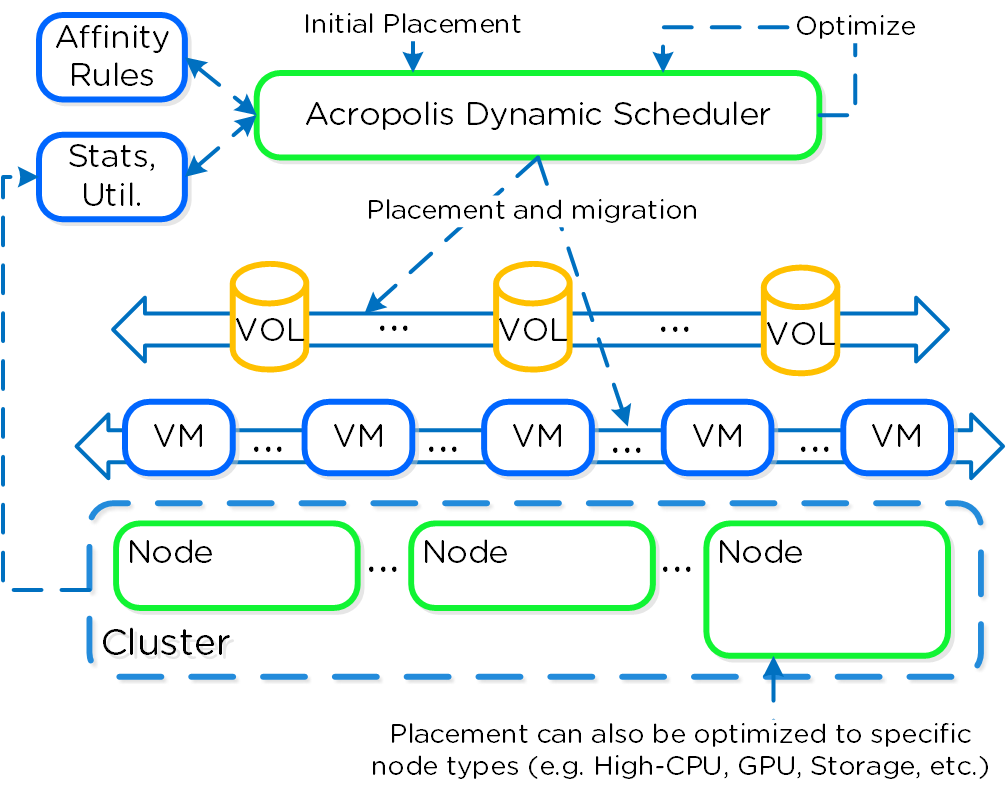

Acropolis Dynamic Scheduler (ADS)

ADS, the Acropolis Dynamic Scheduler, is a key component in the AHV stack. ADS is responsible for VM migrations and placements during many operations including resolving host hotspots (hosts with high CPU and storage usage), maintaining High Availability guarantees, optimizing VM placement to free up resources, and enforcing administrator-defined policies. ADS is always running in the background, continuously monitoring and optimizing the infrastructure.

Key features of ADS include:

- Initial VM placement - Choosing the AHV host for a VM and defragmenting the cluster if needed to ensure sufficient resources are available for the new VM

- Dynamic hotspot mitigation - Monitoring each host and, when a hotspot is detected, resolving that hotspot

- Background policy enforcement - for example moving VMs around to respect VM:Host affinity policies and VM:VM anti-affinity policies

- Enforcing High Availability guarantees - When HA guarantees are enabled, ADS will move VMs in the running system to ensure all VMs on each host can be recovered following the failure of an individual host.

- Dynamic GPU management - Supports NVIDIA GPUs to provide specific vGPU profiles based on the VMs running in the system

- Creating remediation plans based on the cost of movement to address each of the above cases

As ADS focuses on hotspot mitigation and has cost-minimizing remediation plans, this results in fewer VM movements than would be required for an active load-balancing scenario.

Hotspot Mitigation Example - Simple Plan

Figure 1 in the following example shows a host utilizing 90% of available CPU capacity. The CPU hotspot threshold is 85%, so ADS identifies that VM movements are required.

In Figure 2, ADS computes a plan that will move one of the 8GB VMs from the third host to the second host, making sufficient space for one of the VMs on the first host to move over.

The amount of memory on each of the VMs on the first host is taken into account when deciding which VM can be moved more easily. The final state in Figure 3 shows the smaller of the two VMs on the first host moving over to the third host as that is the lowest cost move.

ADS Mitigation Example

ADS Mitigation Example

ADS plans are usually more complex than this, as multiple dimensions are considered both for hotspot detection and for cost-minimization of the remediation plans.

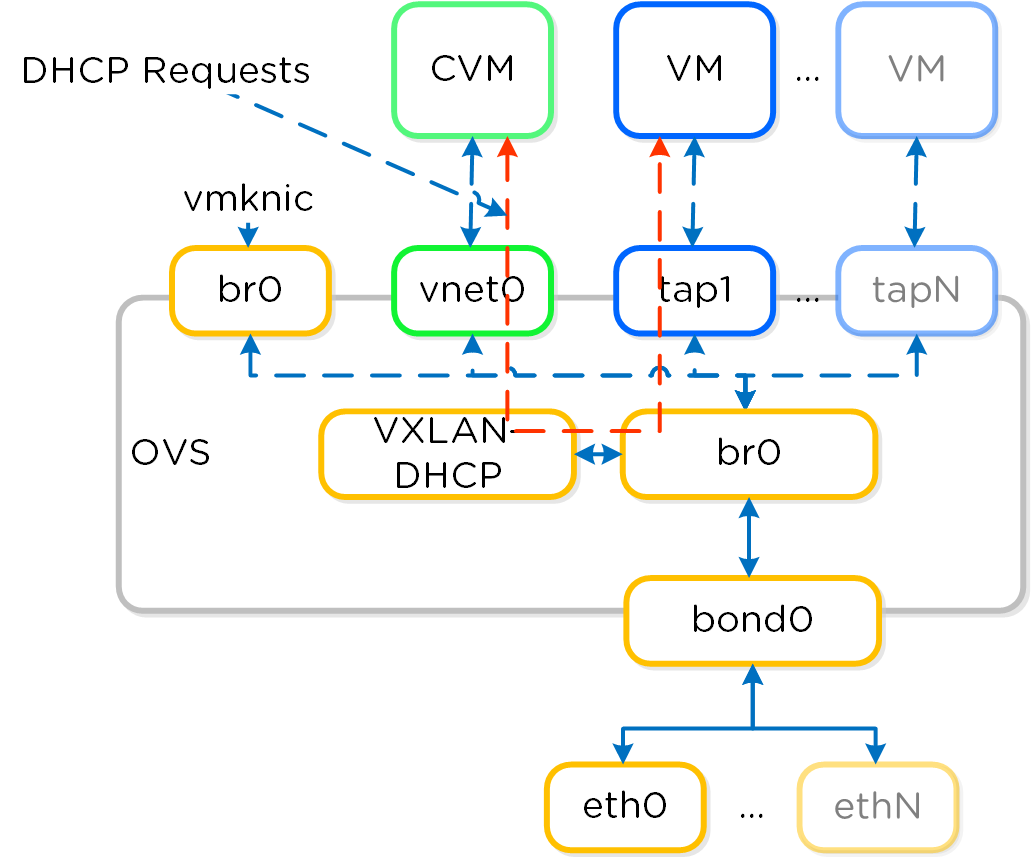

Networking

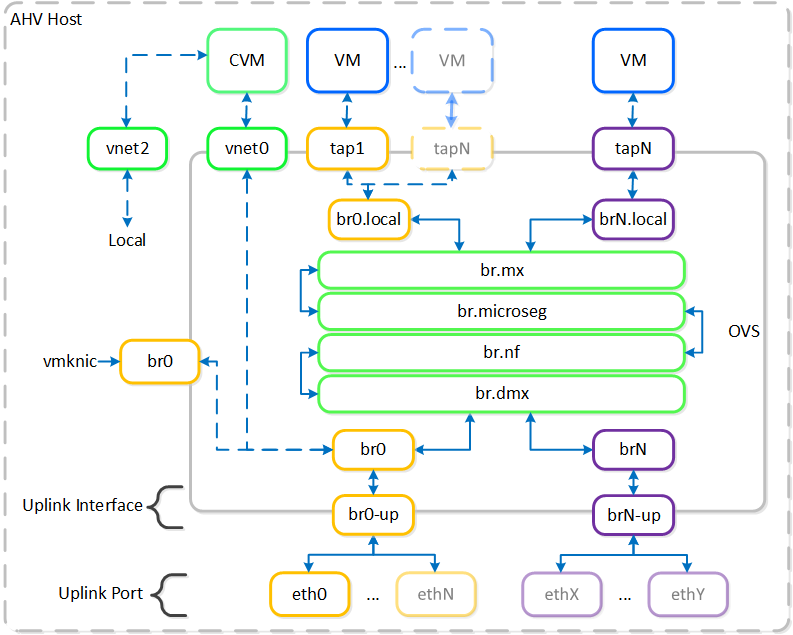

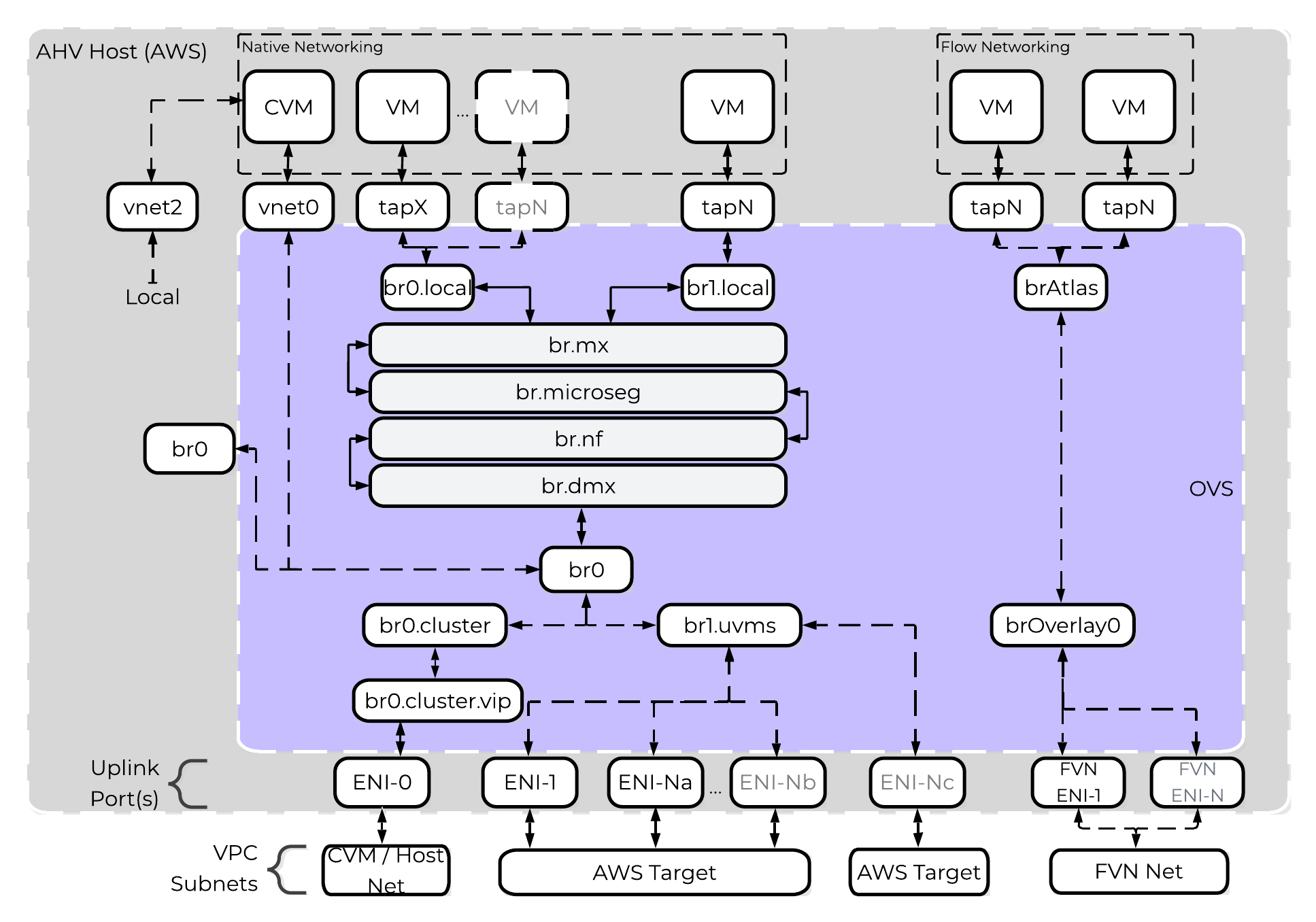

AHV leverages Open vSwitch (OVS) for all VM networking. VM networking is configured through Prism / ACLI and each VM nic is connected into a tap interface.

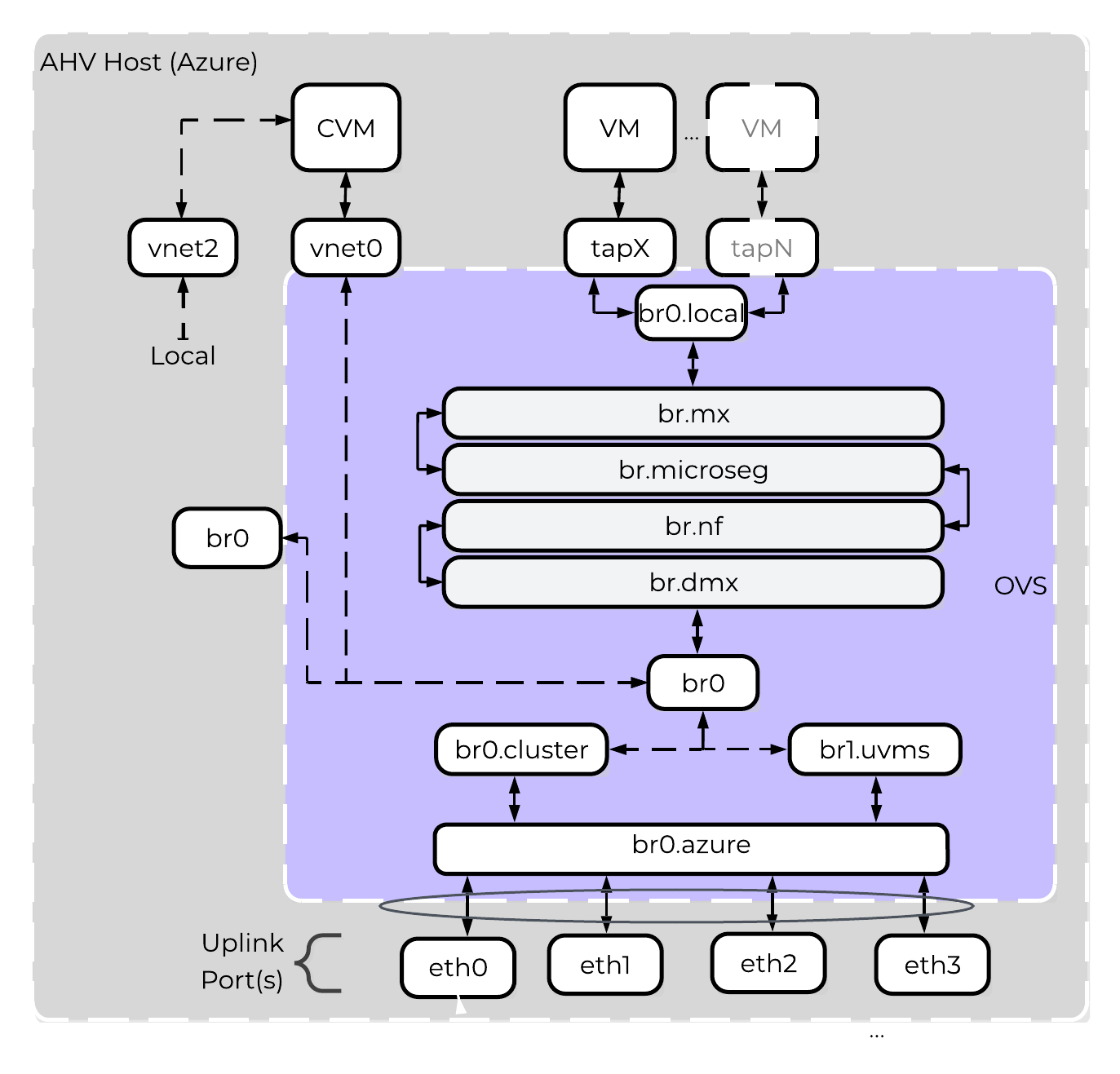

The following figure shows a conceptual diagram of the OVS architecture:

Open vSwitch Network Overview

Open vSwitch Network Overview

In the prior image you see a few types of components:

Open vSwitch (OVS)

OVS is an open source software switch implemented in the Linux kernel and designed to work in a multiserver virtualization environment. By default, OVS behaves like a layer-2 learning switch that maintains a MAC address table. The hypervisor host and VMs connect to virtual ports on the switch.

OVS supports many popular switch features, including VLAN tagging, Link Aggregation Control Protocol (LACP), port mirroring, and quality of service (QoS), to name a few. Each AHV server maintains an OVS instance, and all OVS instances combine to form a single logical switch. Constructs called bridges manage the switch instances residing on the AHV hosts.

Bridge

Bridges act as virtual switches to manage network traffic between physical and virtual network interfaces. The default AHV configuration includes an OVS bridge called br0 and a native Linux bridge called virbr0. The virbr0 Linux bridge carries management and storage communication between the CVM and AHV host. All other storage, host, and VM network traffic flows through the br0 OVS bridge. The AHV host, VMs, and physical interfaces use “ports” for connectivity to the bridge.

Port

Ports are logical constructs created in a bridge that represent connectivity to the virtual switch. Nutanix uses several port types, including internal, tap, VXLAN, and bond:

- An internal port—with the same name as the default bridge (br0)—provides access for the AHV host.

- Tap ports act as bridge connections for virtual NICs presented to VMs.

- VXLAN ports are used for the IP address management functionality provided by Acropolis.

- Bonded ports provide NIC teaming for the physical interfaces of the AHV host.

Bond

Bonded ports aggregate the physical interfaces on the AHV host. By default, a bond named br0-up is created in bridge br0. After the node imaging process, all interfaces are placed within a single bond, which is a requirement for the foundation imaging process. Changes to the default bond, br0-up, often rename this to bond0. Nutanix recommends using the name br0-up to quickly identify the interface as the bridge br0 uplink.

OVS bonds allow for several load-balancing modes, including active-backup, balance-slb and balance-tcp. LACP can also be activated for a bond. The “bond_mode” setting is not specified during installation and therefore defaults to active-backup, which is the recommended configuration.

Uplink Load Balancing

Briefly mentioned in the prior section, it is possible to balance traffic across bond uplinks.

The following bond modes are available:

- active-backup

- Default configuration which transmits all traffic over a single active adapter. If the active adapter becomes unavailable, another adapter in the bond will become active. Limits throughput to a single nic’s bandwidth. (Recommended)

- balance-slb

- Balances each VM’s nic across adapters in the bond (e.g. VM A nic 1 - eth0 / nic 2 - eth1). Limits VM per-nic throughput to a single nic’s bandwidth, however a VM with x nics can leverage x * adapter bandwidth (assuming x is the same for the number of VM nics and physical uplink adapters in the bond). NOTE: has caveats for multicast traffic

- balance-tcp / LACP

- Balances each VM nic’s TCP session across adapters in the bond. Limits per-nic throughput to the maximum bond bandwidth (number of physical uplink adapters * speed). Requires link aggregation and used when LACP is required.

You can find additional information on bonds in the AHV networking guide.

VM NIC Types

AHV supports the following VM network interface types:

- Access (default)

- Trunk (4.6 and above)

By default VM nics will be created as Access interfaces (similar to what you’d see with a VM nic on a port group), however it is possible to expose a trunked interface up to the VM’s OS. Trunked NICs send the primary VLAN untagged, and all additional VLANs as tags to the same vNIC on the VM. This is useful to bring multiple networks to a VM without adding vNICs.

A trunked interface can be added with the following command:

vm.nic_create VM_NAME vlan_mode=kTrunked trunked_networks=ALLOWED_VLANS network=NATIVE_VLAN

Example:

vm.nic_create fooVM vlan_mode=kTrunked trunked_networks=10,20,30 network=vlan.10

Service Chaining

AHV Service chaining allows us to intercept all traffic and forward to a packet processor (NFV, appliance, virtual appliance, etc.) functions transparently as part of the network path.

Common uses for service chaining:

- Firewall (e.g. Palo Alto, etc.)

- IDS/IPS/network monitors (e.g. packet capture)

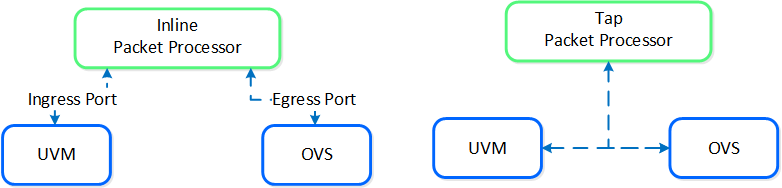

Within service chaining there are two types of way:

Service chain - Packet Processors

Service chain - Packet Processors

- Inline packet processor

- Intercepts packets inline as they flow through OVS

- Can modify and allow/deny packet

- Common use: firewalls

- Tap packet processor

- Inspects packets as they flow, can only read as it’s a tap into the packet flow

- Common uses: IDS/IPS/network monitor

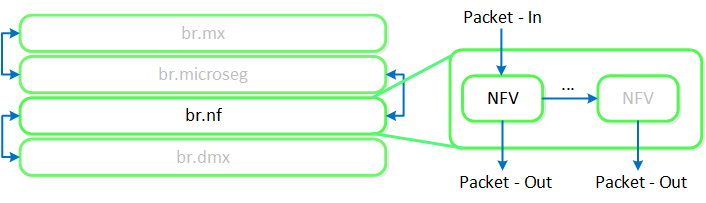

Any service chaining is done after the Flow - Microsegmentation rules are applied and before the packet leaves the local OVS. This occurs in the network function bridge (br.nf):

Service Chain - Flow

Service Chain - Flow

NOTE: it is possible to string together multiple NFV / packet processors in a single chain. Service chaining is only applicable when Acropolis controls the network stack. Service chaining is not currently supported for network controller-based VLANs or VPCs.

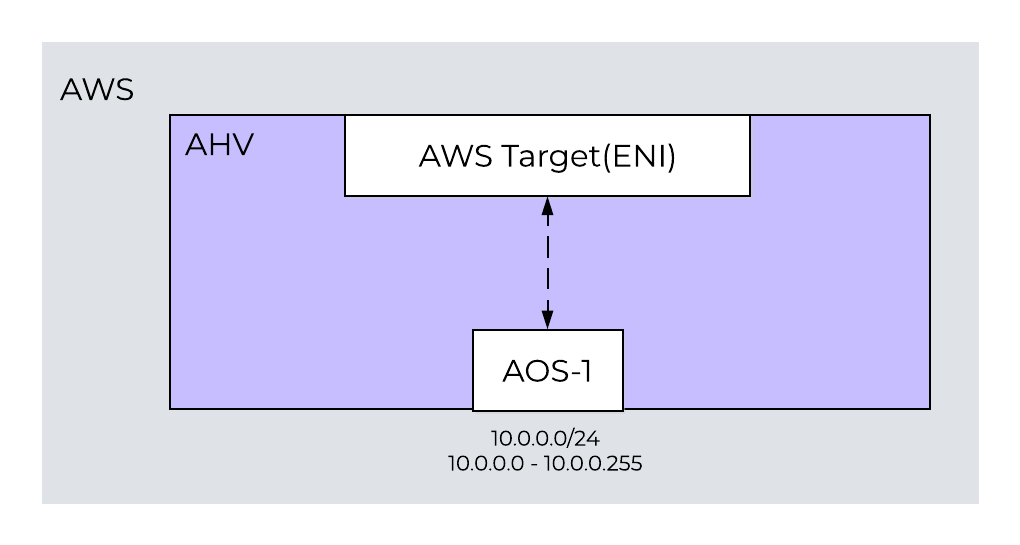

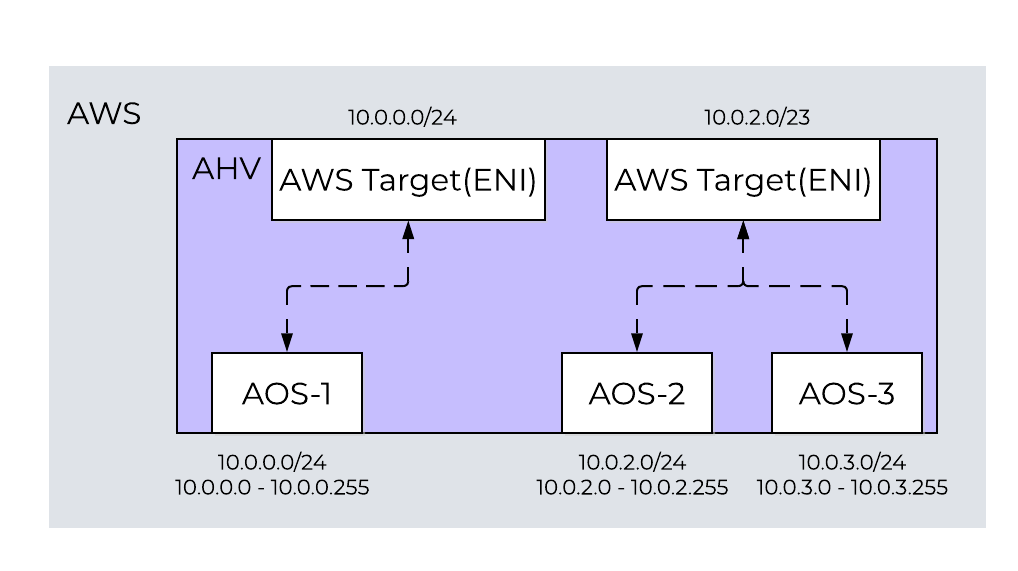

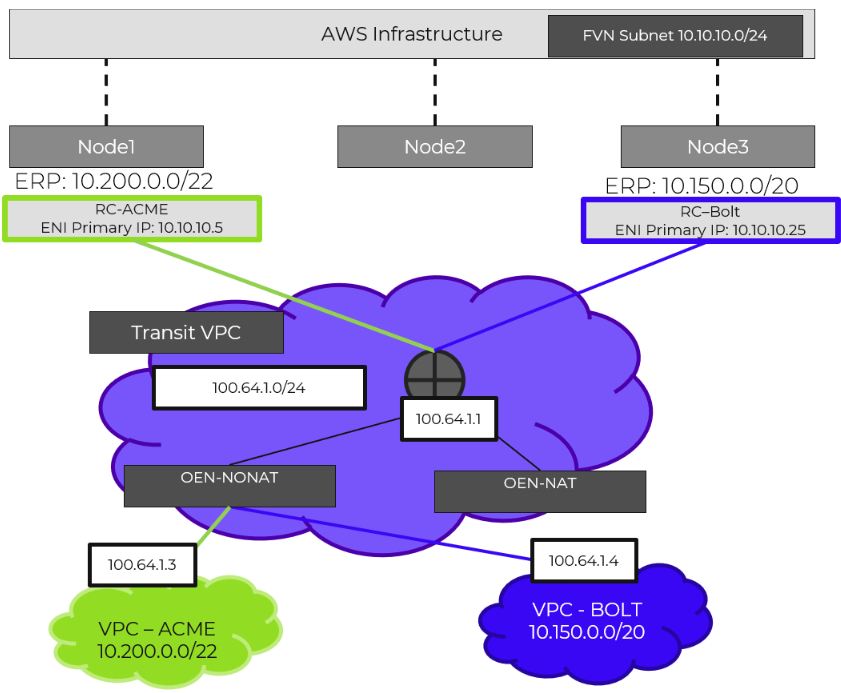

Network Controller

The network controller was released in AOS 6.0 to enable Flow Virtual Networking and VPC overlay subnets. With AOS release 6.7, Nutanix enhanced the network controller, adding support for guest VMs in VLAN-backed subnets. When the network controller is enabled, a new subnet label, VLAN basic is created to describe the existing VLANs managed by the Acropolis leader. Network controller-managed subnets have no label and are simply called VLANs.

Supported Configurations

Core Use Cases:

- AHV networking with additional features in network controller-enabled VLAN subnets

- Flow Virtual Networking VPC overlay subnets

- Microsegmentation for network controller-enabled VLAN-backed subnets

- Microsegmentation within VPC overlay subnets

Management interfaces(s):

- Prism Central (PC)

Supported Environment(s):

- On-Premises:

- AHV

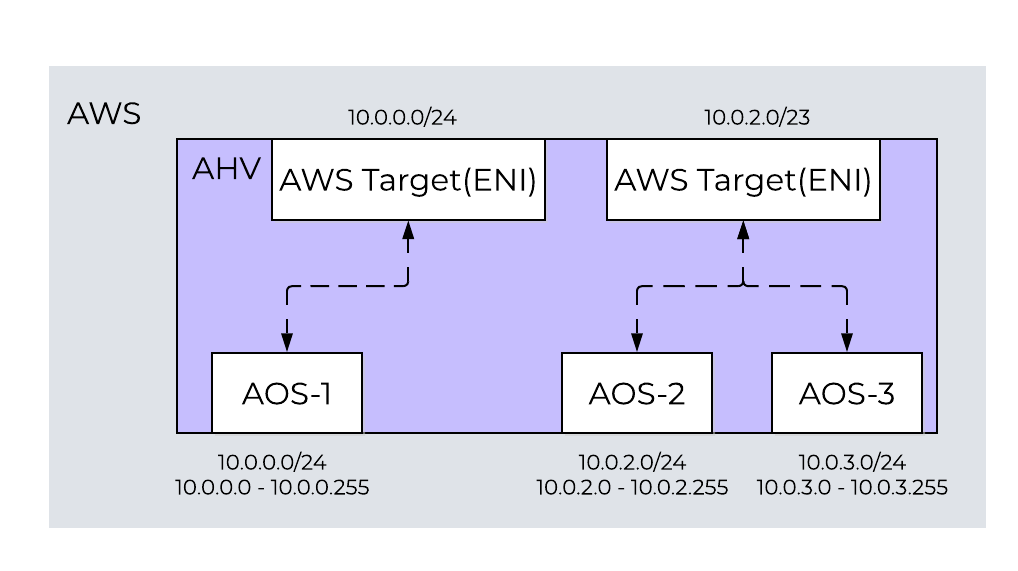

- Nutanix Cloud Clusters (NC2)

- NC2 Azure

Prerequisites for network controller-enabled VLANs:

- Prism Central 2023.3

- AOS 6.7

- AHV 9

- MSP enabled

Upgrades:

- Included in LCM

Note

Pro tip

Deploy Prism Central as extra large for maximum network controller scalability.

Implementation Constructs

The network controller (previously Atlas Network Controller) controls the virtual networking stack used by Flow Virtual Networking VPCs and network controller-enabled VLAN-backed subnets in AHV. The network controller enables configurations at scale and allows for new features such as subnets that exist in multiple Prism Element clusters. This network stack will enable future networking and network security features. With the expanded capabilities, there are a few constructs that are different from what is used in the OVS-based architecture.

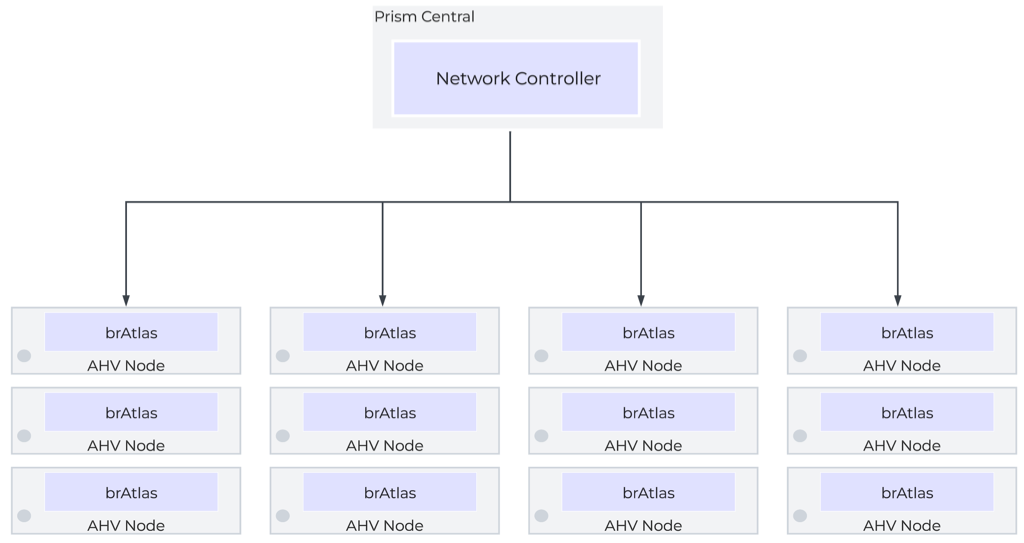

The network controller is used to centrally manage and administer VLANs, overlay subnets, IP address pools, and security policies from Prism Central. The network controller runs on Prism Central.

Network Control Plane

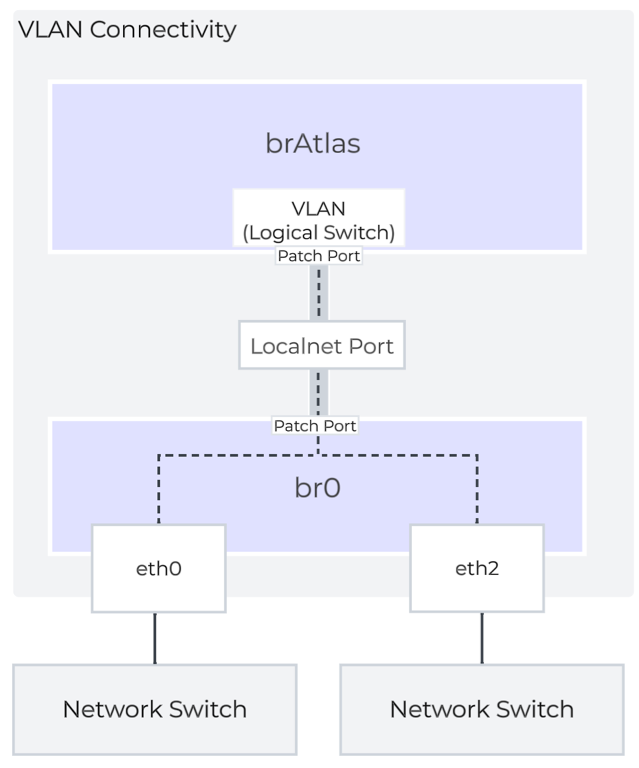

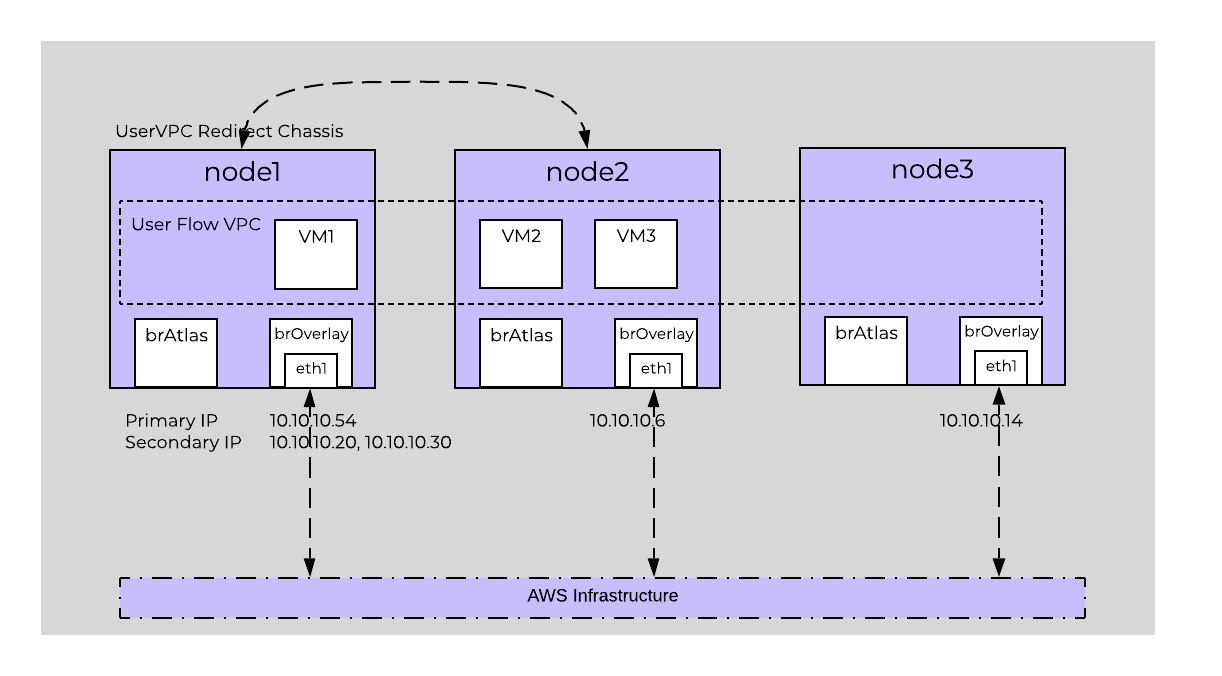

The network controller programs the network control plane and determines how packets are processed. This control plane uses Open Virtual Network (https://www.ovn.org/en/architecture/). A new virtual switch named brAtlas is applied to every AHV host.

Network Controller Control Plane

Network Controller Control Plane

Network Bridges

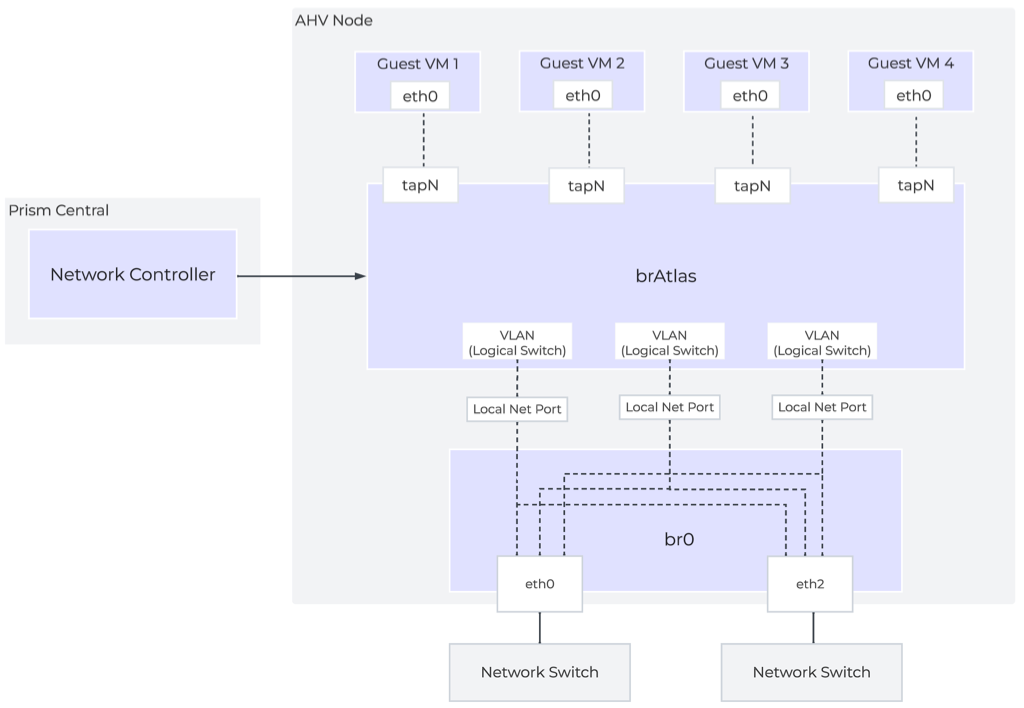

There are 2 bridge types used with the network controller, brAtlas and br0.

brAtlas

The bridge brAtlas is a virtual switch that is applied to every AHV host and managed by the network controller. Guest VMs in VPCs and network controller-backed VLANs connect to brAtlas via a tap interface.

Network Controller Architecture Overview

Network Controller Architecture Overview

br0

The bridge br0 is an uplink bridge that functions as a layer 2 switch for brAtlas and connects the physical networks. There can be multiple uplink bridges if required. These additional uplink bridges would follow the same naming construct and be named br0, br1, br2, etc.

VLANs and Logical Switches

For guest VLAN tagging, every VLAN is mapped to a logical switch within brAtlas. Each logical switch will have an associated localnet port connecting to the uplink virtual switch, such as br0. A localnet port is the point of connectivity between logical switches and physical networks.

A localnet port is made up of a pair of patch ports between brAtlas and br0. A network controller-enabled VLAN-backed subnet maps to a logical switch in brAtlas.

» Download this section as PDF (opens in a new tab/window)

Storage I/O Path

AHV does not leverage a traditional storage stack like ESXi or Hyper-V. All disk(s) are passed to the VM(s) as raw SCSI block devices. This keeps the I/O path lightweight and optimized.

Note

Note

AOS abstracts kvm, virsh, qemu, libvirt, and iSCSI from the end-user and handles all backend configuration. This allows the user to focus higher up the stack on the VMs via Prism / ACLI. The following is for informational purposes only and it is not recommended to manually mess with virsh, libvirt etc.

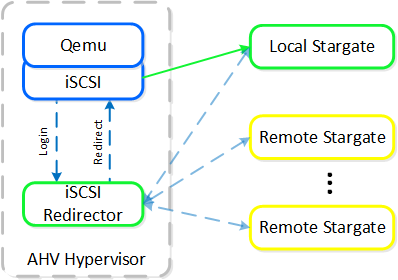

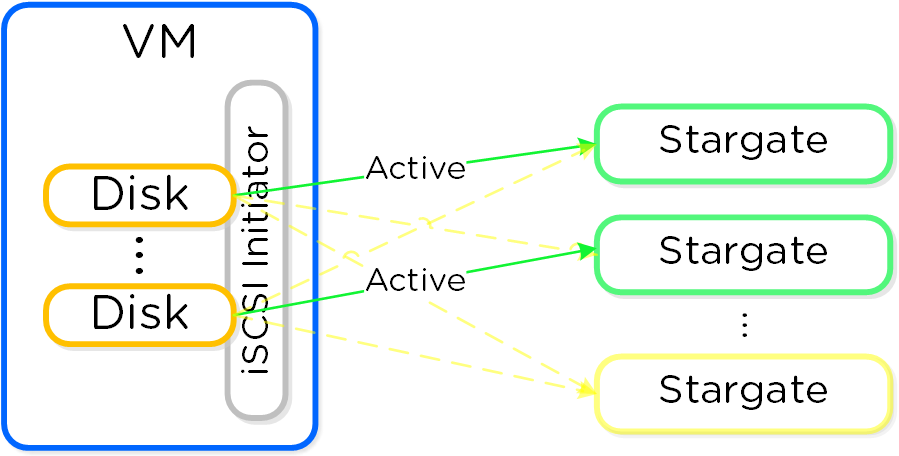

Each AHV host runs an iSCSI redirector which regularly checks the health of Stargates throughout the cluster using NOP commands.

In the iscsi_redirector log (located in /var/log/ on the AHV host), you can see each Stargate’s health:

2017-08-18 19:25:21,733 - INFO - Portal 192.168.5.254:3261 is up ... 2017-08-18 19:25:25,735 - INFO - Portal 10.3.140.158:3261 is up 2017-08-18 19:25:26,737 - INFO - Portal 10.3.140.153:3261 is up

NOTE: The local Stargate is shown via its 192.168.5.254 internal address.

In the following you can see the iscsi_redirector is listening on 127.0.0.1:3261:

[root@NTNX-BEAST-1 ~]# netstat -tnlp | egrep tcp.*3261 Proto ... Local Address Foreign Address State PID/Program name ... tcp ... 127.0.0.1:3261 0.0.0.0:* LISTEN 8044/python ...

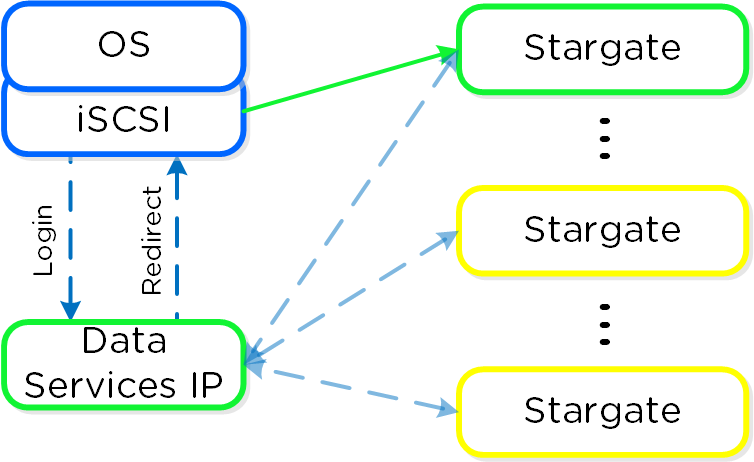

QEMU is configured with the iSCSI redirector as the iSCSI target portal. Upon a login request, the redirector will perform an iSCSI login redirect to a healthy Stargate (preferably the local one).

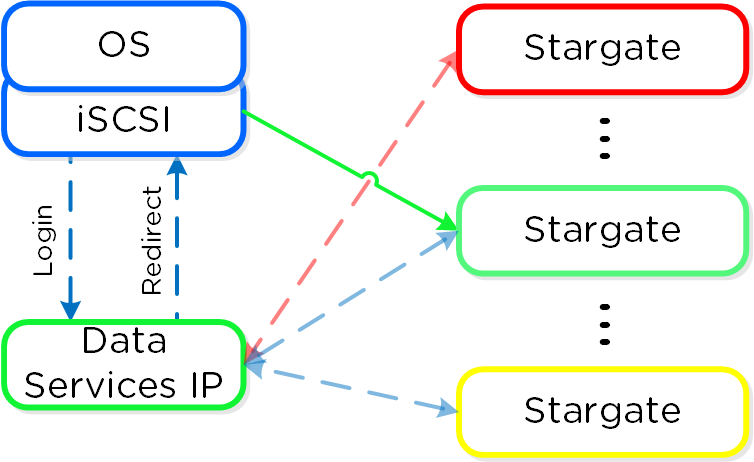

iSCSI Multi-pathing - Normal State

iSCSI Multi-pathing - Normal State

The preferred controller type is virtio-scsi (default for SCSI devices). IDE devices, while possible, are not recommended for most scenarios. In order for virtio to be used with Windows the virtio drivers, Nutanix mobility drivers, or Nutanix guest tools must be installed. Modern Linux distros ship with virtio pre-installed.

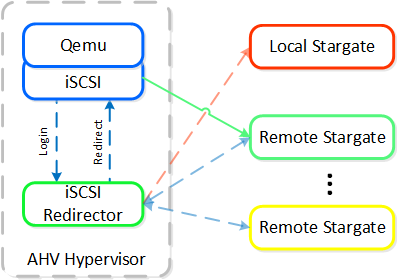

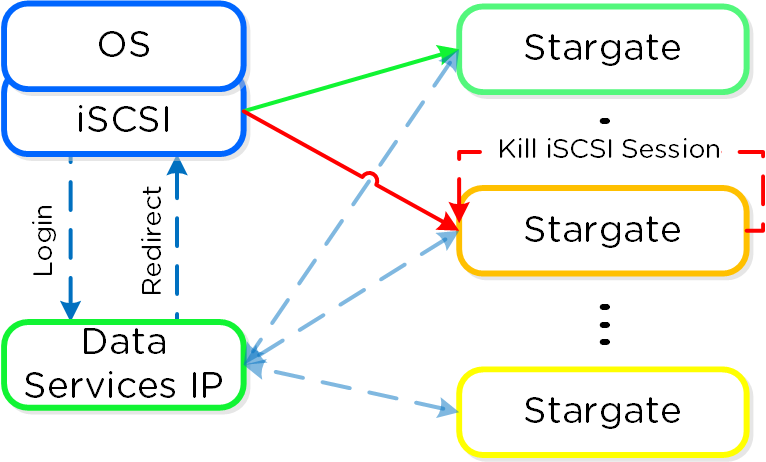

In the event where the active Stargate goes down (thus failing to respond to the NOP OUT command), the iSCSI redirector will mark the local Stargate as unhealthy. When QEMU retries the iSCSI login, the redirector will redirect the login to another healthy Stargate.

iSCSI Multi-pathing - Local CVM Down

iSCSI Multi-pathing - Local CVM Down

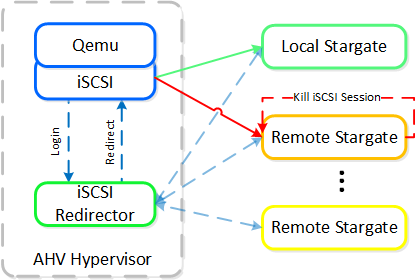

Once the local CVM’s Stargate comes back up (and begins responding to the NOP OUT commands), the remote Stargate will quiesce then kill all connections to remote iSCSI sessions. QEMU will then attempt an iSCSI login again and will be redirected to the local Stargate.

iSCSI Multi-pathing - Local CVM Back Up

iSCSI Multi-pathing - Local CVM Back Up

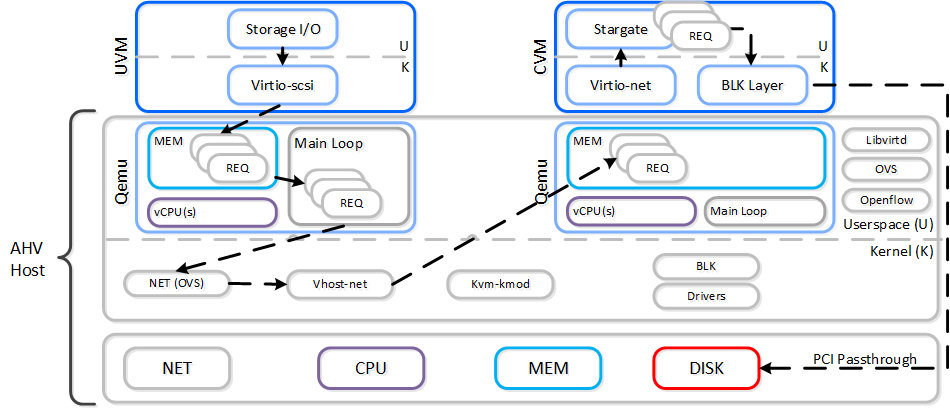

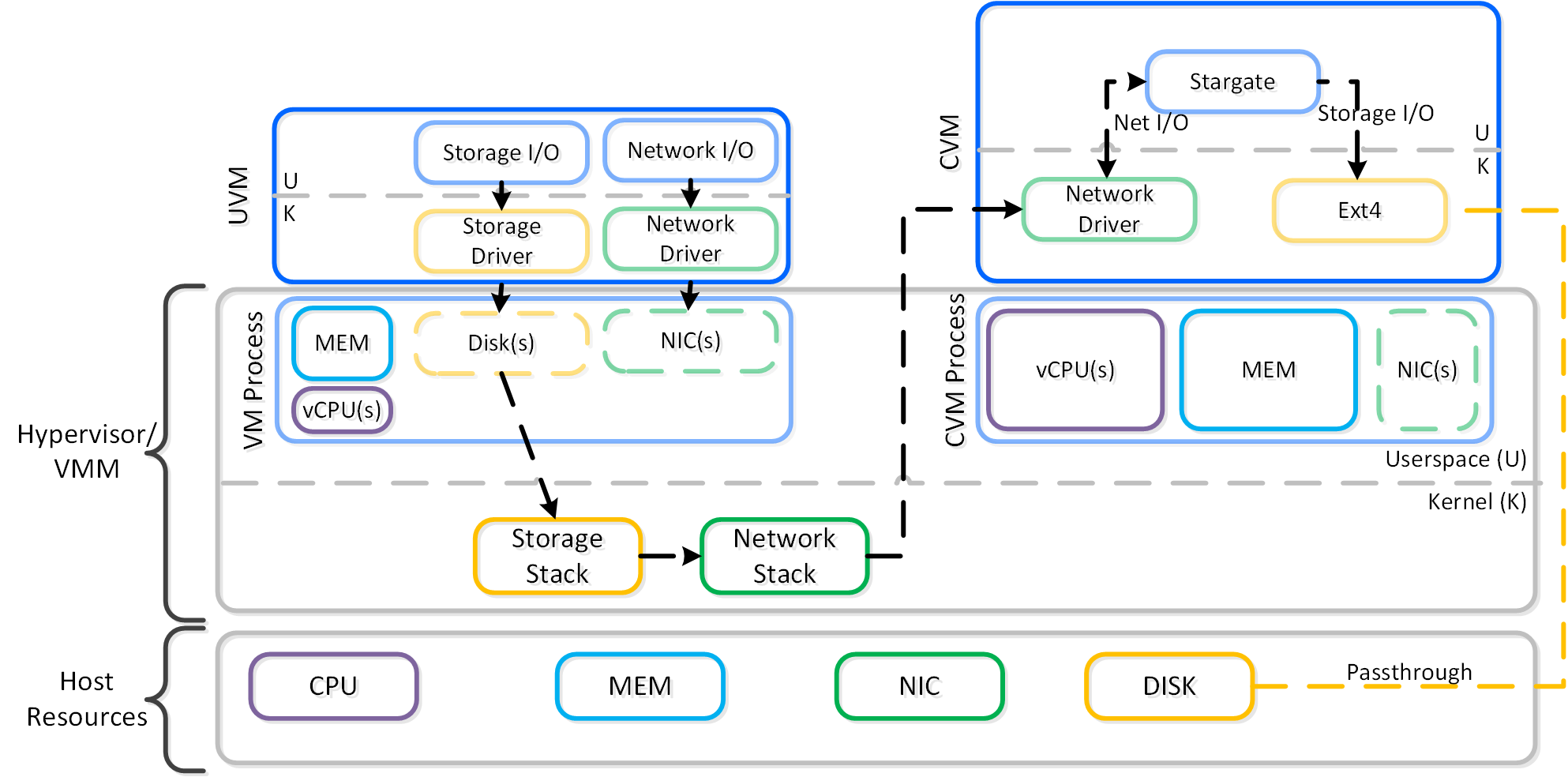

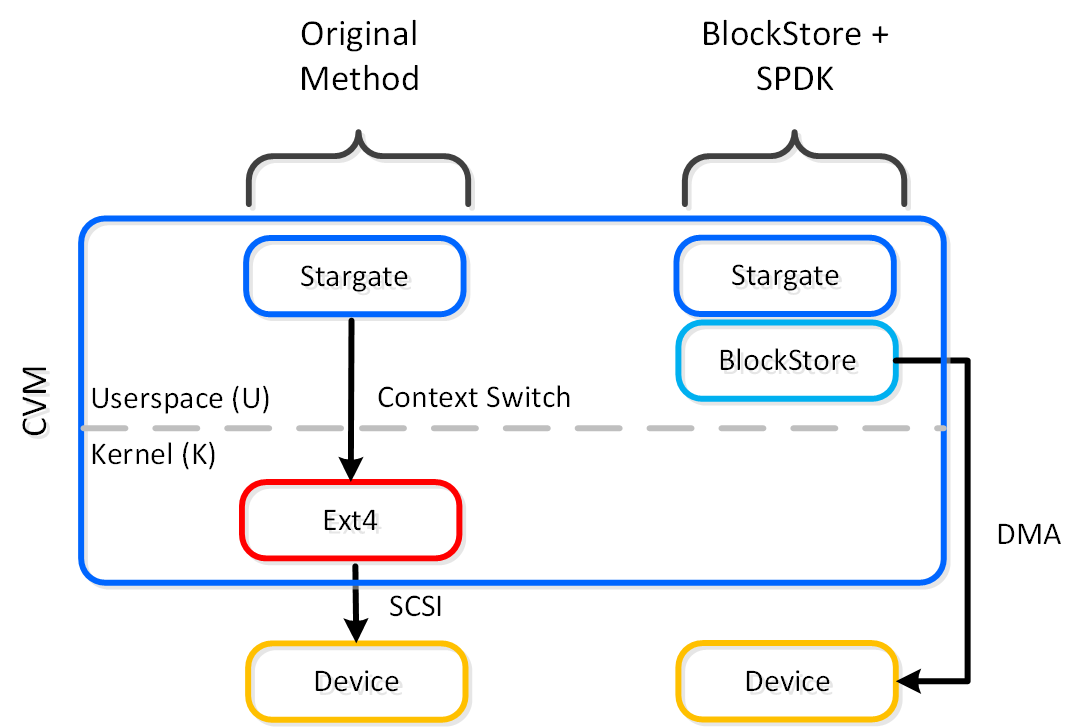

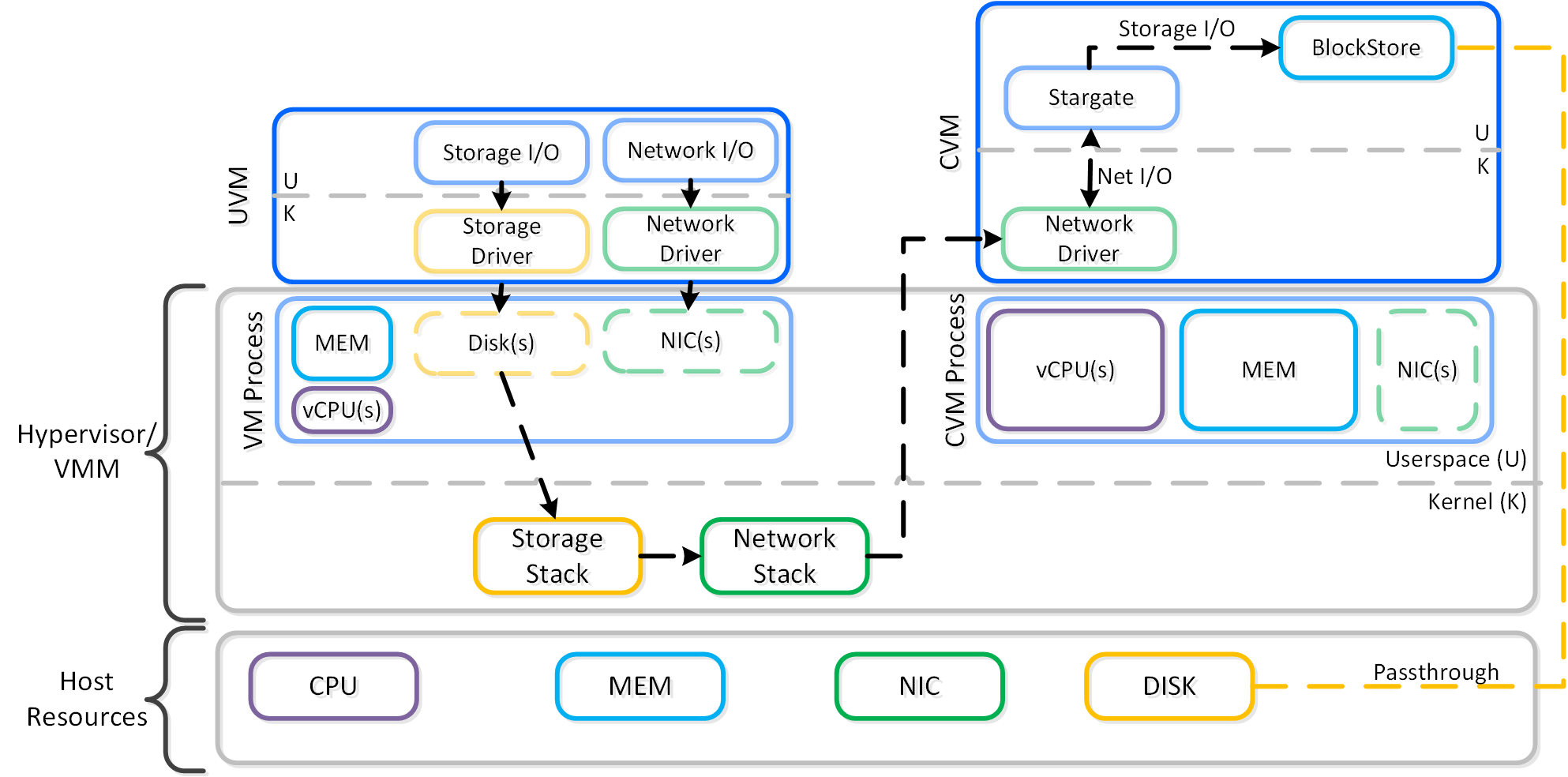

Traditional I/O Path

Like every hypervisor and OS there is a mix of user and kernel space components which interact to perform a common activity. Prior to reading the following, it is recommended to read the ‘User vs. Kernel Space’ section to learn more about how each interact with eachother.

When a VM performs an I/O it will perform the following (some steps have been excluded for clarity):

- VM’s OS perform SCSI command(s) to virtual device(s)

- Virtio-scsi takes those requests and places them in the guest’s memory

- Requests are handled by the QEMU main loop

- Libiscsi inspects each request and forwards

- Network layer forwards requests to local CVM (or externally if local is unavailable)

- Stargate handles request(s)

The following shows this sample flow:

AHV VirtIO Data Path - Classic

AHV VirtIO Data Path - Classic

Looking at an AHV host, you can see qemu-kvm has established sessions with a healthy Stargate using the local bridge and IPs. For external communication, the external host and Stargate IPs will be used. NOTE: There will be one session per disk device (look at PID 24845)

[root@NTNX-BEAST-1 log]# netstat -np | egrep tcp.*qemu Proto ... Local Address Foreign Address State PID/Program name tcp ... 192.168.5.1:50410 192.168.5.254:3261 ESTABLISHED 25293/qemu-kvm tcp ... 192.168.5.1:50434 192.168.5.254:3261 ESTABLISHED 23198/qemu-kvm tcp ... 192.168.5.1:50464 192.168.5.254:3261 ESTABLISHED 24845/qemu-kvm tcp ... 192.168.5.1:50465 192.168.5.254:3261 ESTABLISHED 24845/qemu-kvm ...

Now in this path there are a few inefficiencies as the main loop is single threaded and libiscsi inspects every SCSI command.

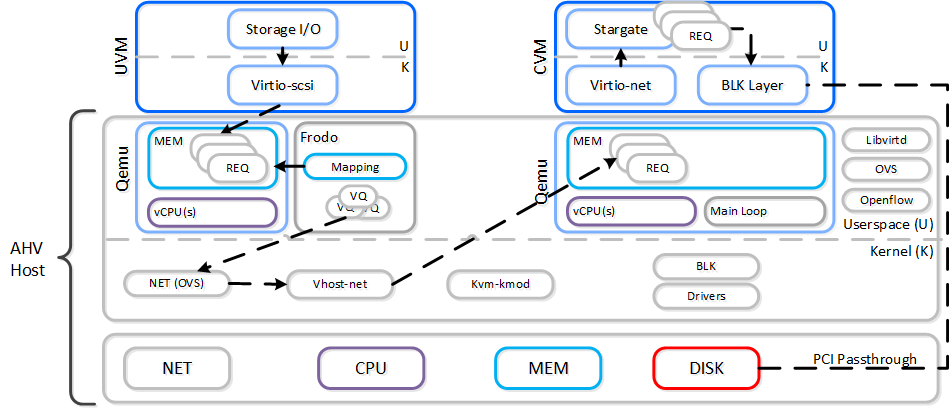

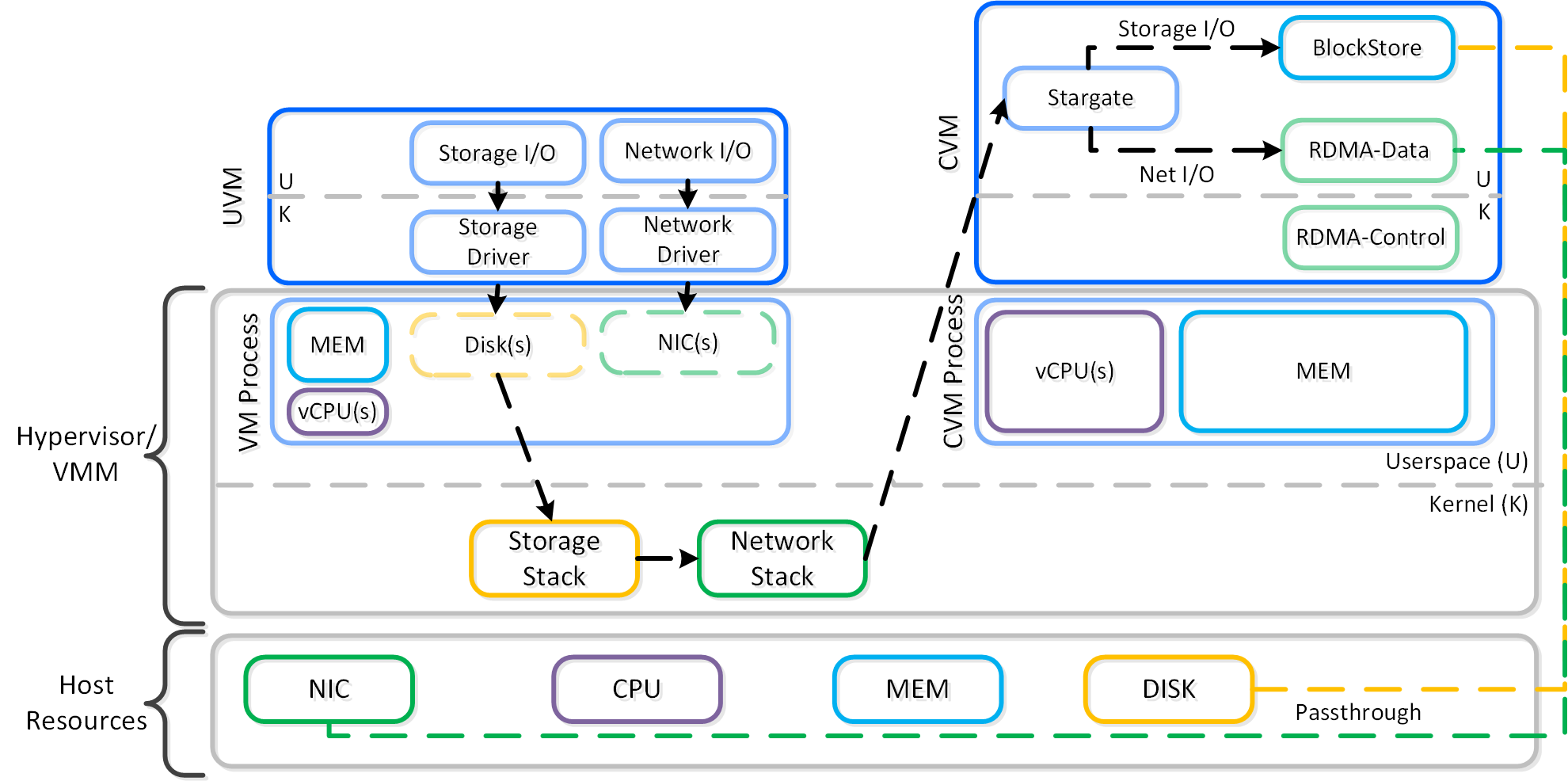

Frodo I/O Path (aka AHV Turbo Mode)

As storage technologies continue to evolve and become more efficient, so must we. Given the fact that we fully control AHV and the Nutanix stack this was an area of opportunity.

In short Frodo is a heavily optimized I/O path for AHV that allows for higher throughput, lower latency and less CPU overhead.

Note

Pro tip

Frodo is enabled by default on VMs powered on after AOS 5.5.X.

When a VM performs an I/O it will perform the following (some steps have been excluded for clarity):

- VM’s OS perform SCSI command(s) to virtual device(s)

- Virtio-scsi takes those requests and places them in the guest’s memory

- Requests are handled by Frodo

- Custom libiscsi appends iscsi header and forwards

- Network layer forwards requests to local CVM (or externally if local is unavailable)

- Stargate handles request(s)

The following shows this sample flow:

AHV VirtIO Data Path - Frodo

AHV VirtIO Data Path - Frodo

The following path does looks similar to the traditional I/O except for a few key differences:

- Qemu main loop is replaced by Frodo (vhost-user-scsi)

- Frodo exposes multiple virtual queues (VQs) to the guest (one per vCPU)

- Leverages multiple threads for multi-vCPU VMs

- Libiscsi is replaced by our own much more lightweight version

To the guest it will notice that it now has multiple queues for the disk device(s), other than that it’ll just see the performance improvements. In some cases we’ve seen a CPU overhead reduction of 25% to perform the I/O and performance increases of up to 3x compared to Qemu! Comparing to another hypervisor we’ve seen CPU overhead to perform I/Os drop by up to 3x.

Looking at an AHV host, you will see a frodo process for each VM (qemu-kvm process) running:

[root@drt-itppc03-1 ~]# ps aux | egrep frodo ... /usr/libexec/qemu-kvm ... -chardev socket,id=frodo0,fd=3 \ -device vhost-user-scsi-pci,chardev=frodo0,num_queues=16... ... /usr/libexec/frodo ... 127.0.0.1:3261 -t iqn.2010-06.com.nutanix:vmdisk... ...

Note

Pro tip

To take advantage of Frodo's multiple threads / connections, you must have >= 2 vCPU for a VM when it is powered on.

It can be characterized by the following:

-

1 vCPU UVM:

- 1 Frodo thread / session per disk device

- >= 2 vCPU UVM:

- 2 Frodo threads / sessions per disk device

In the following, you can see Frodo has established sessions with a healthy Stargate using the local bridge and IPs. For external communication, the external host and Stargate IPs will be used.

[root@NTNX-BEAST-1 log]# netstat -np | egrep tcp.*frodo Proto ... Local Address Foreign Address State PID/Program name tcp ... 192.168.5.1:39568 192.168.5.254:3261 ESTABLISHED 42957/frodo tcp ... 192.168.5.1:39538 192.168.5.254:3261 ESTABLISHED 42957/frodo tcp ... 192.168.5.1:39580 192.168.5.254:3261 ESTABLISHED 42957/frodo tcp ... 192.168.5.1:39592 192.168.5.254:3261 ESTABLISHED 42957/frodo ...

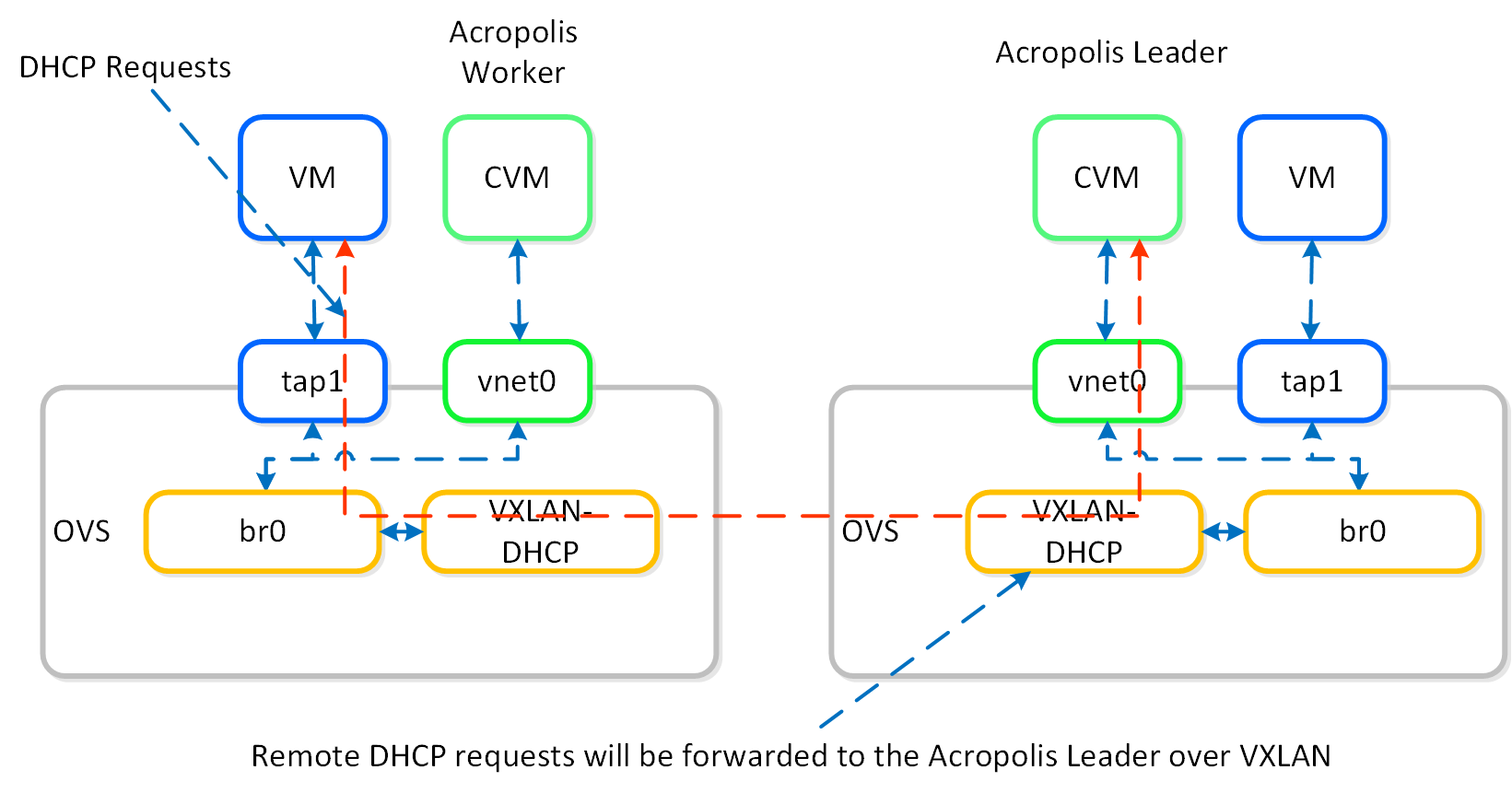

IP Address Management

The Acropolis IP address management (IPAM) solution provides the ability to establish a DHCP scope and assign addresses to VMs. This leverages VXLAN and OpenFlow rules to intercept the DHCP request and respond with a DHCP response.

Here we show an example DHCP request using the Nutanix IPAM solution where the Acropolis Leader is running locally:

IPAM - Local Acropolis Leader

IPAM - Local Acropolis Leader

If the Acropolis Leader is running remotely, the same VXLAN tunnel will be leveraged to handle the request over the network.

IPAM - Remote Acropolis Leader

IPAM - Remote Acropolis Leader

Traditional DHCP / IPAM solutions can also be leveraged in an ‘unmanaged’ network scenario.

VM High Availability (HA)

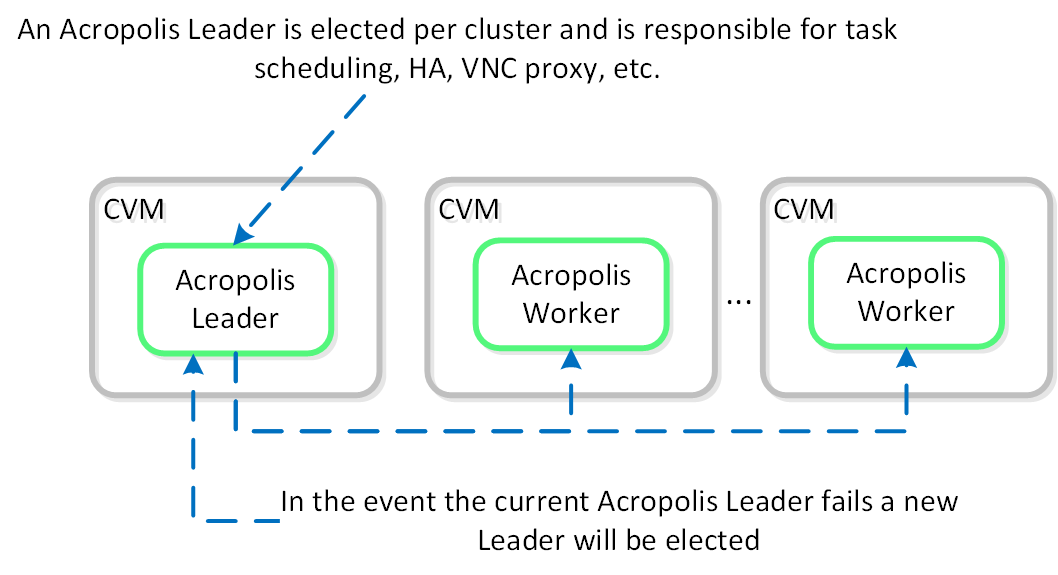

AHV VM HA is a feature built to ensure VM availability in the event of a host or block outage. In the event of a host failure the VMs previously running on that host will be restarted on other healthy nodes throughout the cluster. The Acropolis Leader is responsible for restarting the VM(s) on the healthy host(s).

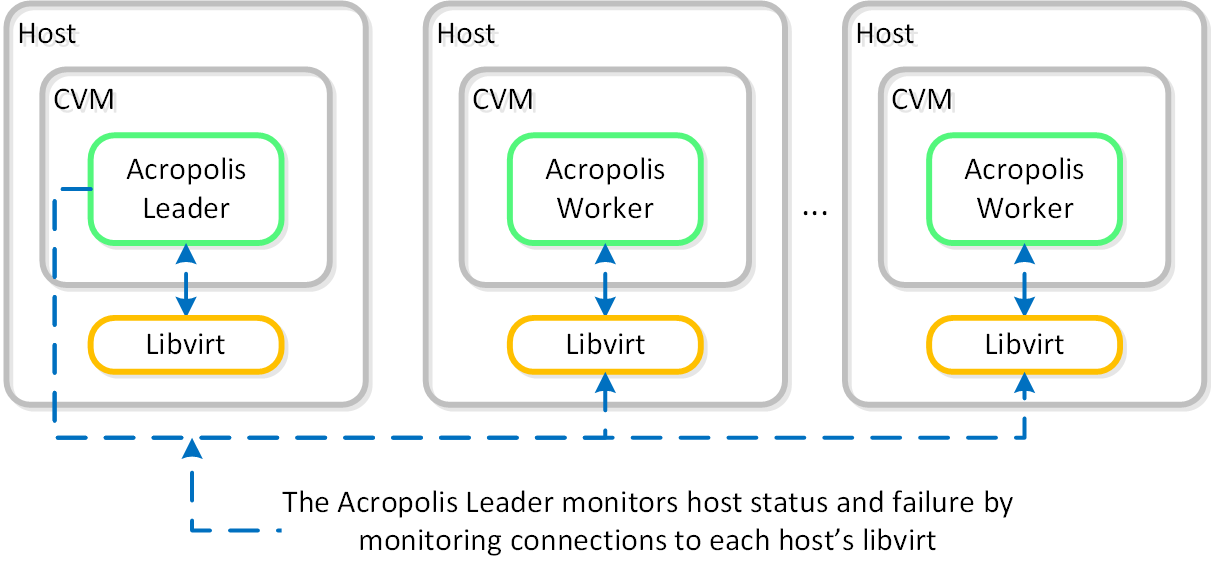

The Acropolis Leader tracks host health by monitoring its connections to the libvirt on all cluster hosts:

HA - Host Monitoring

HA - Host Monitoring

Once the libvirt connection goes down, the countdown to the HA restart is initiated. Should libvirt connection fail to be re-established within the timeout, Acropolis will restart VMs that were running on the disconnected host. When this occurs, VMs should be restarted within 120 seconds.

In the event the Acropolis Leader becomes partitioned, isolated or fails a new Acropolis Leader will be elected on the healthy portion of the cluster. If a cluster becomes partitioned (e.g X nodes can’t talk to the other Y nodes) the side with quorum will remain up and VM(s) will be restarted on those hosts.

There are two main modes for VM HA:

- Default

- This mode requires no configuration and is included by default when installing an AHV-based Nutanix cluster. When an AHV host becomes unavailable, the VMs that were running on the failed AHV host restart on the remaining hosts, depending on the available resources. Not all of the failed VMs restart if the remaining hosts do not have sufficient resources.

- Guarantee

- This nondefault configuration reserves space throughout the AHV hosts in the cluster to guarantee that all failed VMs can restart on other hosts in the AHV cluster during a host failure. To enable Guarantee mode, select the Enable HA check box, as shown in the figure below. A message then appears displaying the amount of memory reserved and how many AHV host failures can be tolerated.

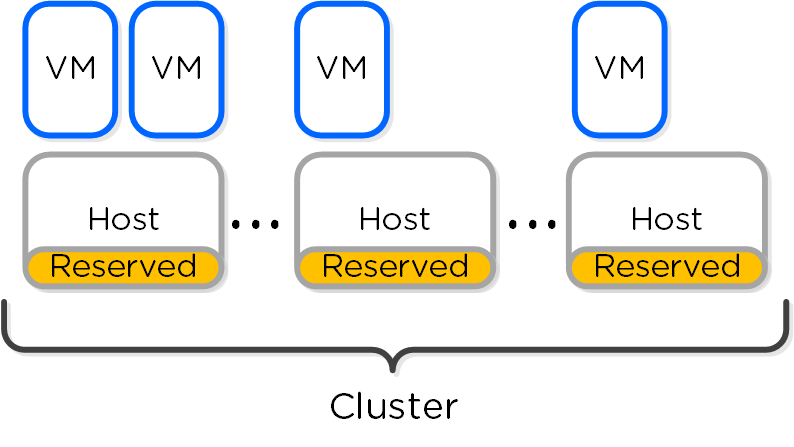

Resource Reservations

When using the Guarantee mode for VM HA, the system will reserve host resources for VMs. The amount of resources which are reserved is summarized by the following:

- If all containers are RF2 (FT1)

- One “host” worth of resources

- If any containers are RF3 (FT2)

- Two “hosts” worth of resources

When hosts have uneven memory capacities the system will use the largest host’s memory capacity when determining how much to reserve per host.

Note

Post 5.0 Resource Reservations

Prior to 5.0, we supported both host and segment based reserevations. With 5.0 and later we now only support a segment based reservation which is automatically implemented when the Guarantee HA mode is selected.

Reserve segments distributes the resource reservation across all hosts in a cluster. In this scenario, each host will share a portion of the reservation for HA. This ensures the overall cluster has enough failover capacity to restart VM(s) in the event of a host failure.

The figure shows an example scenario with reserved segments:

HA - Reserved Segment

HA - Reserved Segment

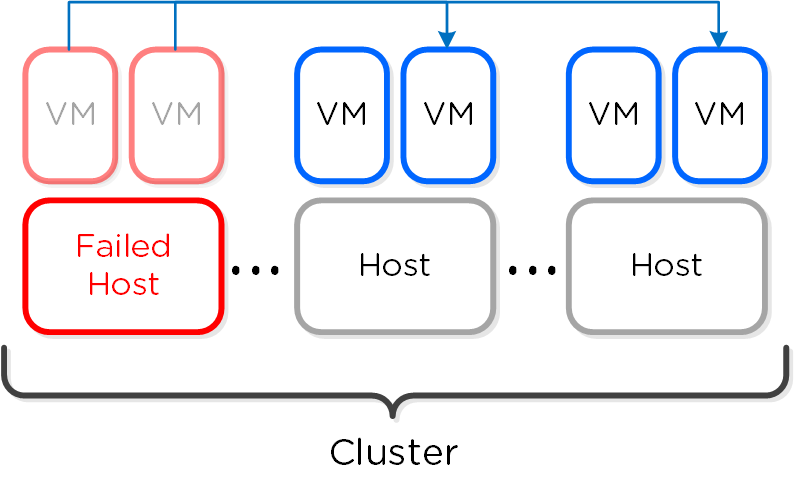

In the event of a host failure VM(s) will be restarted throughout the cluster on the remaining healthy hosts:

HA - Reserved Segment - Fail Over

HA - Reserved Segment - Fail Over

Note

Reserved segment(s) calculation

The system will automatically calculate the total number of reserved segments and per host reservation.

Finding reservations reduces to a well known set of problems called Knapsack. The optimal solution is NP-hard (exponential), but heuristic solutions can come close to optimal for the common case. We implement one such algorithm called MTHM. Nutanix will continue improving its placement algorithms.

» Download this section as PDF (opens in a new tab/window)

Command Reference

Show OVS uplinks

Description: Show ovs uplinks for local host

manage_ovs show_uplinks

Description: Show ovs uplinks for full cluster

allssh "manage_ovs show_uplinks"

Show OVS interfaces

Description: Show ovs interfaces for local host

manage_ovs show_interfaces

Show interfaces for full cluster

allssh "manage_ovs show_interfaces"

Show OVS switch information

Description: Show switch information

ovs-vsctl show

List OVS bridges

Description: List bridges

ovs-vsctl list br

Show OVS bridge information

Description: Show OVS port information

ovs-vsctl list port br0 ovs-vsctl list port bond

Show OVS interface information

Description: Show interface information

ovs-vsctl list interface br0

Show ports / interfaces on bridge

Description: Show ports on a bridge

ovs-vsctl list-ports br0

Description: Show ifaces on a bridge

ovs-vsctl list-ifaces br0

Add ports to bridge

Description: Add port to bridge

ovs-vsctl add-port bridge port

Description: Add bond port to bridge

ovs-vsctl add-bond bridge port iface

Show OVS bond details

Description: Show bond details

ovs-appctl bond/show bond

Example:

ovs-appctl bond/show bond0

Set bond mode and configure LACP on bond

Description: Enable LACP on ports

ovs-vsctl set port bond lacp=active/passive

Description: Enable on all hosts for bond0

for i in `hostips`;do echo $i; ssh $i source /etc/profile > /dev/null 2>&1; ovs-vsctl set port bond0 lacp=active;done

Show LACP details on bond

Description: Show LACP details

ovs-appctl lacp/show bond

Set bond mode

Description: Set bond mode on ports

ovs-vsctl set port bond bond_mode=active-backup, balance-slb, balance-tcp

Show OpenFlow information

Description: Show OVS openflow details

ovs-ofctl show br0

Description: Show OpenFlow rules

ovs-ofctl dump-flows br0

Get QEMU PIDs and top information

Description: Get QEMU PIDs

ps aux | grep qemu | awk '{print $2}'

Description: Get top metrics for specific PID

top -p PID

Get active Stargate for QEMU processes

Description: Get active Stargates for storage I/O for each QEMU processes

netstat –np | egrep tcp.*qemu

Metrics and Thresholds

More coming soon!

Troubleshooting & Advanced Administration

Check iSCSI Redirector Logs

Description: Check iSCSI Redirector Logs for all hosts

for i in `hostips`; do echo $i; ssh root@$i cat /var/log/iscsi_redirector;done

Example for single host

Ssh root@HOSTIP Cat /var/log/iscsi_redirector

Monitor CPU steal (stolen CPU)

Description: Monitor CPU steal time (stolen CPU)

Launch top and look for %st (bold below)

Cpu(s): 0.0%us, 0.0%sy, 0.0%ni, 96.4%id, 0.0%wa, 0.0%hi, 0.1%si, **0.0%st**

Monitor VM network resource stats

Description: Monitor VM resource stats

Launch virt-top

Virt-top

vSphereLearn how VMware ESXi works on Nutanix.

» Download this section as PDF (opens in a new tab/window)

Node Architecture

In ESXi deployments, the Controller VM (CVM) runs as a VM and disks are presented using VMDirectPath I/O. This allows the full PCI controller (and attached devices) to be passed through directly to the CVM and bypass the hypervisor.

ESXi Node Architecture

ESXi Node Architecture

Configuration Maximums and Scalability

The following configuration maximums and scalability limits are applicable:

- Maximum cluster size: 48

- Maximum vCPUs per VM: 256

- Maximum memory per VM: 6TB

- Maximum virtual disk size: 62TB

- Maximum VMs per host: 1,024

- Maximum VMs per cluster: 8,000 (2,048 per datastore if HA is enabled)

NOTE: As of vSphere 7.0 and AOS 6.8. Refer to AOS Configuration Maximums and ESX Configuration Maximums for other versions.

Note

Pro tip

When doing benchmarking on ESXi hosts, always test with the ESXi host power policy set to 'High performance'. This will disable and P- and C- states and will make sure the test results aren't artificially limited.

Networking

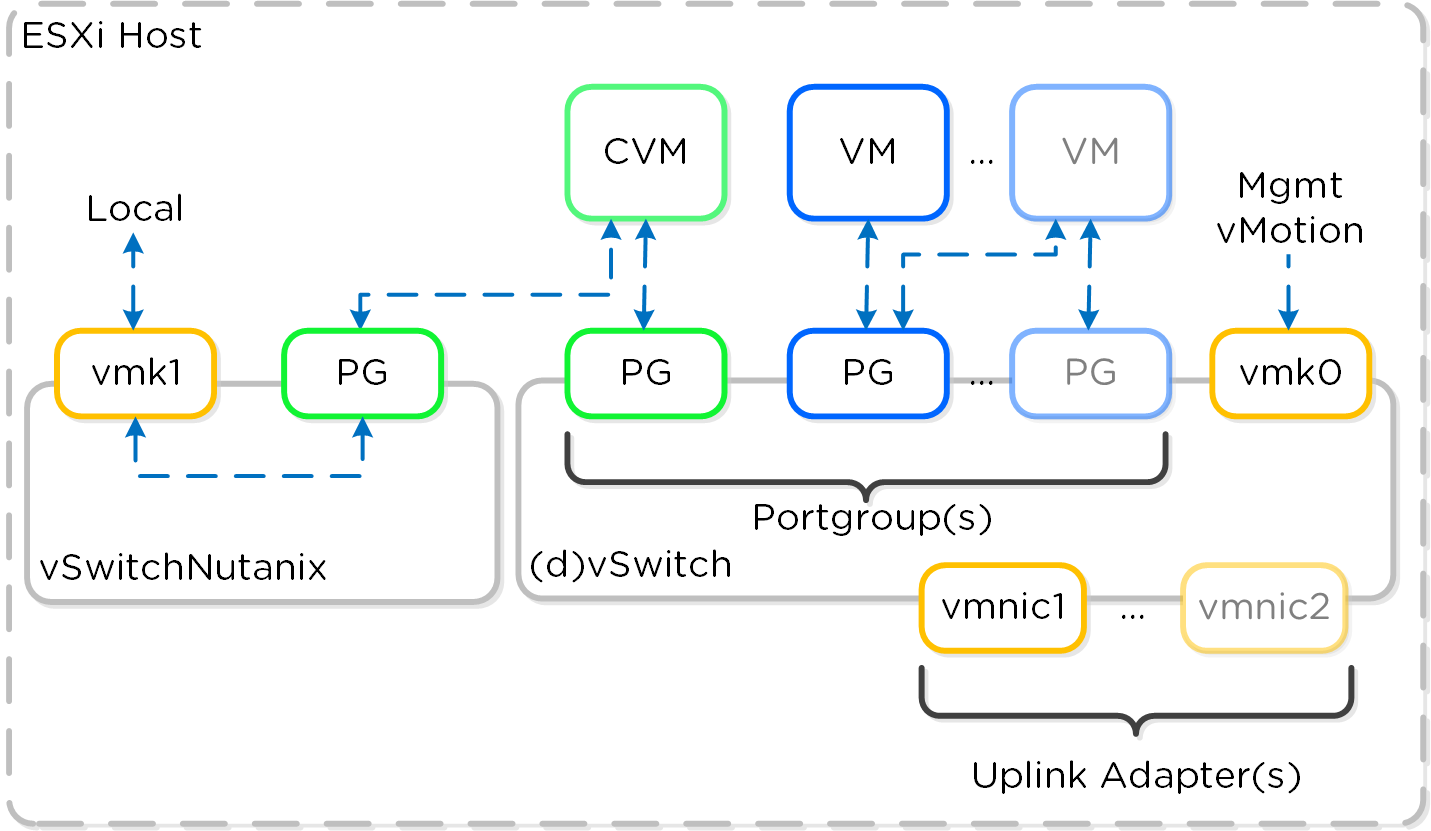

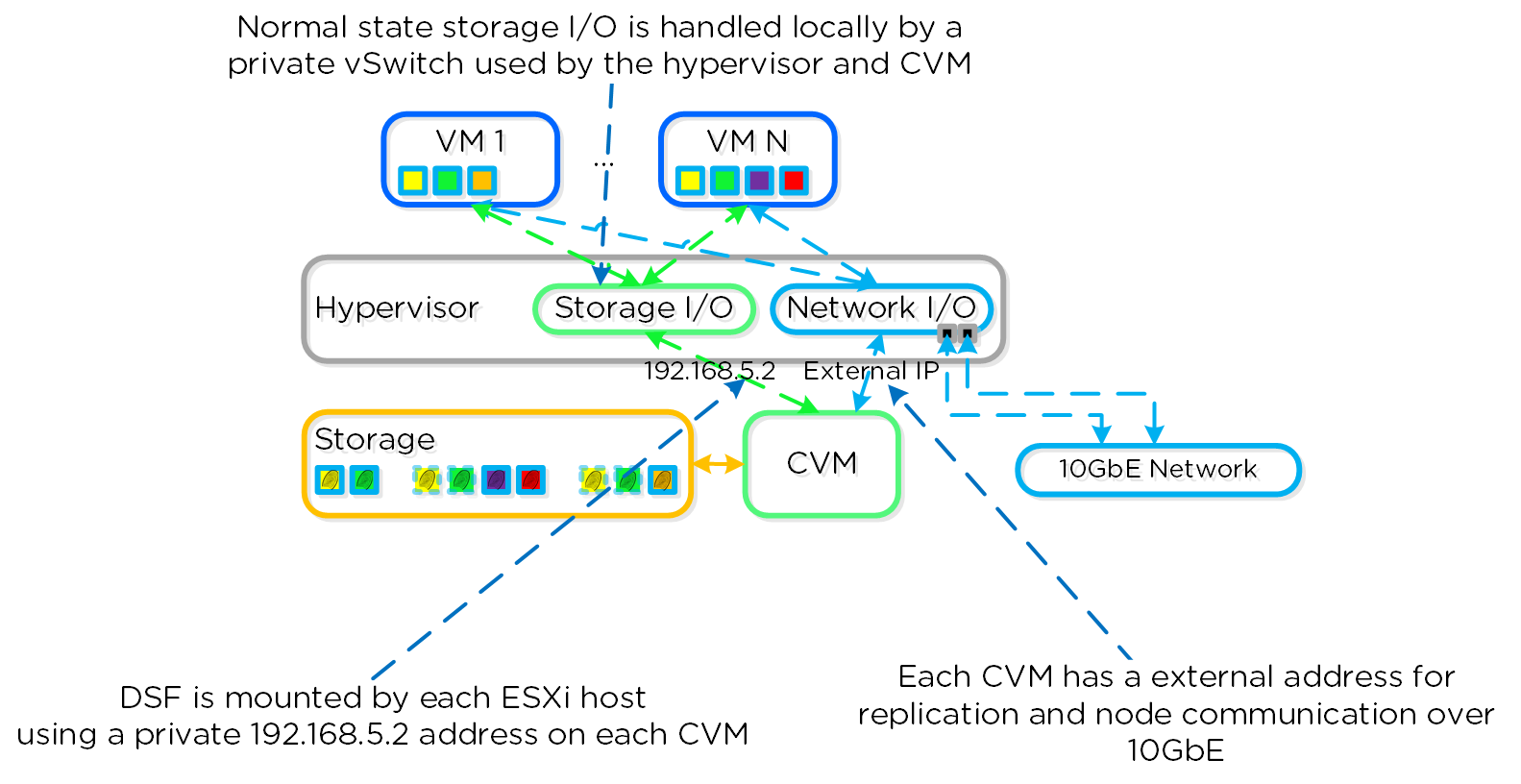

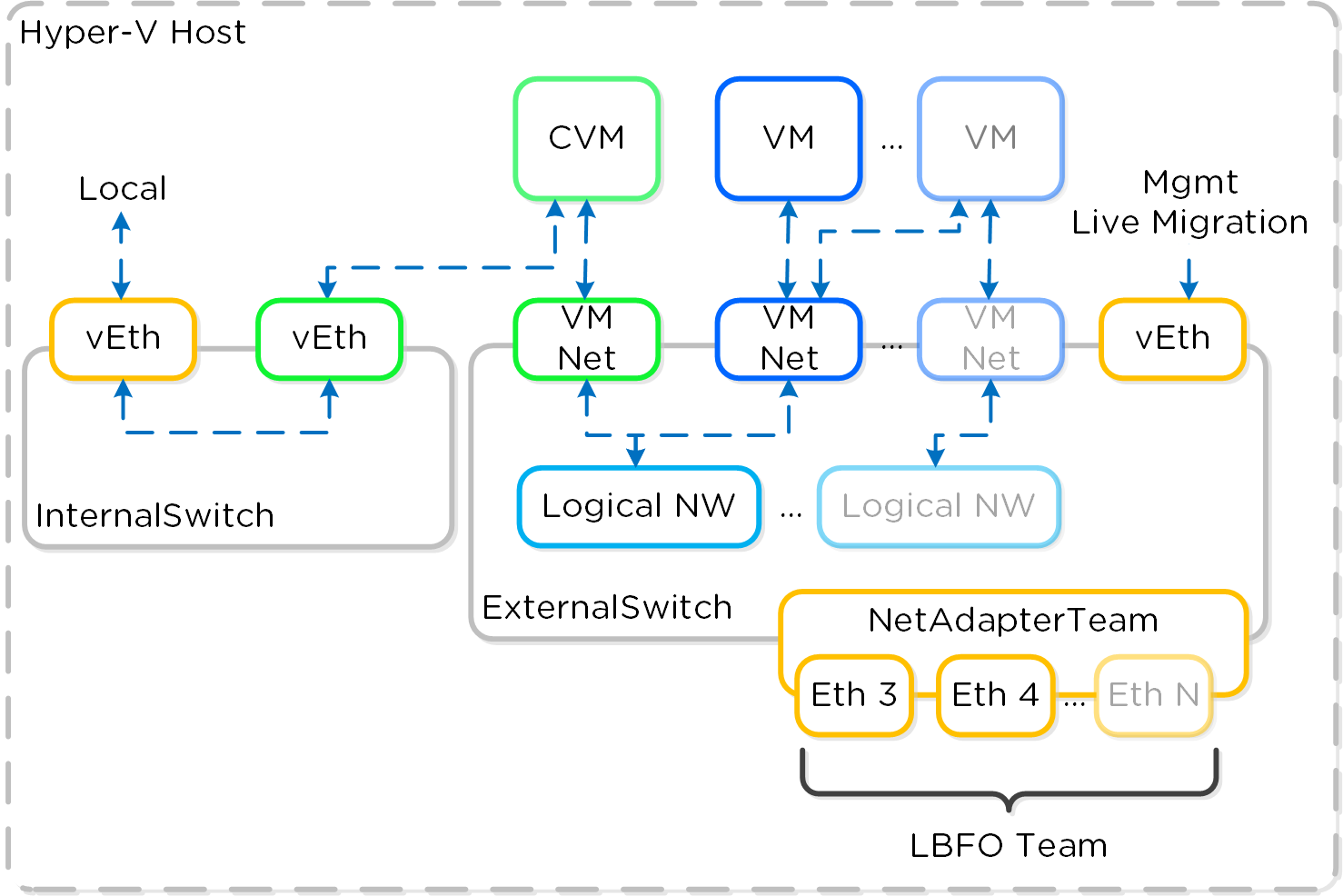

Each ESXi host has a local vSwitch which is used for intra-host communication between the Nutanix CVM and host. For external communication and VMs a standard vSwitch (default) or dvSwitch is leveraged.

The local vSwitch (vSwitchNutanix) is for local communication between the Nutanix CVM and ESXi host. The host has a vmkernel interface on this vSwitch (vmk1 - 192.168.5.1) and the CVM has an interface bound to a port group on this internal switch (svm-iscsi-pg - 192.168.5.2). This is the primary storage communication path.